How we Created an in-Browser Kubernetes Experience

Building a in-browser Kubernetes experience is a fairly unusual product decision. Here's how and why we did it.

Table of Contents

Before we dive into the technical weeds of how our cloud shell came to fruition, it’s worth explaining why we built a cloud shell(our in-browser Kubernetes experience) in the first place. After all, it’s a fairly unusual product decision.

For those of you who are unfamiliar with our product, Plural is an open-source DevOps platform that simplifies deploying open-source software on Kubernetes. Even if you aren’t a power user of Kubernetes, you likely are familiar with or know a developer who mentions how steep the learning curve is for Kubernetes. For starters, you need to leverage a large list of tools to deploy and manage a Kubernetes cluster properly.

In our case, we relied on the following tools:

- Helm

- Terraform

- Kubectl

- git

- any cloud CLI (e.g., AWS, GCP, AZURE)

- and the Plural CLI

In addition to installing all of the above, you need to properly configure all their credentials, ensure they are operating on the correct versions and iron out all the other details for running the software locally.

As you can see, it’s definitely a time-consuming task and requires at least some basic knowledge of Helm and Terraform in order to properly deploy and manage applications.

What we Learned From our Early Adopters

When we originally built Plural, we designed our application under the assumption that most of our product users would have some familiarity with deploying infrastructure already, and the development environment would be pretty familiar to them, if not already pre-installed on their system.

We quickly learned as a team that our initial assumption was incorrect, and a good chunk of our power users actually had little knowledge of deploying infrastructure on Kubernetes. Instead, they were originating from data science and engineering backgrounds and used Plural to deploy and manage some of the most prominent technologies in open-source, such as Airbyte and Airflow.

In most cases, we found that users did not have a standard DevOps toolchain installed, and were hitting roadblocks with most of these dependencies. That limited the power of our software.

While the underlying technology of our platform was not an issue, and the feedback from our early adopters was enlightening, we knew we had to quickly solve this common roadblock users were facing. When our engineering team sat down to troubleshoot this issue, they originally came up with two ways to address the user DevOps knowledge gap.

The first solution was to simply give users a Docker image that came pre-installed and ready to go. However, getting a Docker container to work with a local git repository and your local cloud credentials is a nontrivial challenge in itself. We were back to square one.

The next natural path was to figure out how to run and configure that Docker image on our infrastructure ourselves, removing that step from the user experience. Initially, it didn’t seem that challenging to convert our Docker image into a cloud shell-type experience. Kubernetes has a remote exec API and we figured we could leverage the API to make it work.

Seems simple enough right? Well sort of, but not really.

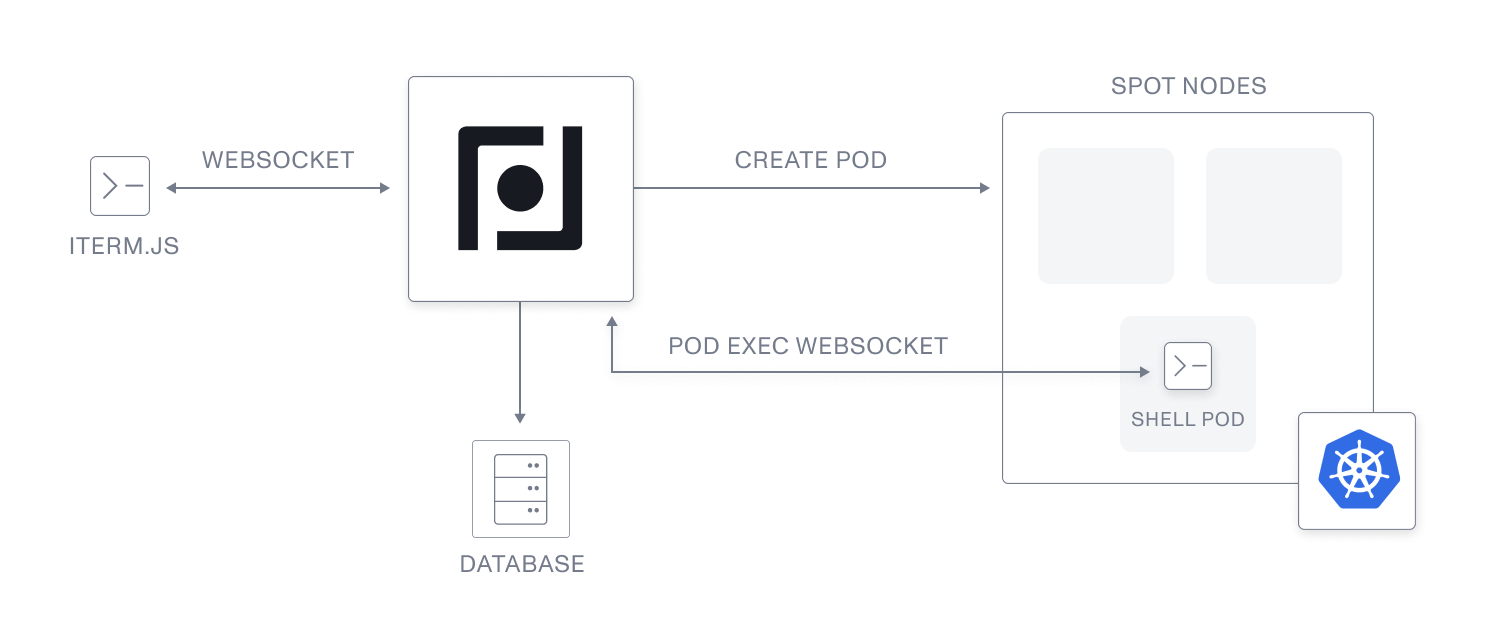

Basic Architecture of Plural’s Cloud Shell

Leveraging the API was a little more challenging than we originally thought. We were able to overcome this by following this general system design.

- We have a set of graphql APIs to manage the creation, polling, and restart of a cloud shell instance. The API creates both a reference to the shell pod in our database and creates the pod in a k8s cluster.

- We place all shell pods in a dedicated k8s node group that are network isolated and run on-spot instances to reduce costs as much as possible.

- We have an operator that tracks a pod label that declares an expiry, which removes shell instances after six hours. We then have a graceful shutdown mechanism that will ensure uncommitted git changes are pushed upstream to make sure we don’t destroy state recklessly.

- Our frontend is presented with a WebSocket API that allows us to push and receive the browser equivalent of stdin and stdout, all b64 encoded and presented into xterm.js in our react app.

This isn’t crazily complex, but the devil is always in the details.

Handling the Kubernetes pods/exec API

The first problem we had to surmount was to figure out how the Kubernetes pod exec API operates. In general, our server was going to stand between a browser client and a Kubernetes pod, providing auth+authz and handling pod recreation logic. This meant we somehow needed to proxy the format that API exposes and convert it to an elixir phoenix channel WebSocket response.

As it turns out, the API is quite poorly documented but is actually pretty simple to use. While Kubernetes documentation has no details on it, we were able to reverse engineer how it’s used by diving into the code for the official Kubernetes dashboard.

You simply need to connect via wss to a path like:

/api/v1/namespaces/{namespace}/pods/{name}/exec,

and, provide any arguments as query args. The API then exposes five channels, all of which are binary messages prefixed by the integers 0 to 4.

- 0 is the channel for stdin.

- 1-3 are output channels corresponding to stdout, stderr, etc.

- 4 is dedicated to a JSON message meant to resize the terminal screen.

We were able to very elegantly handle this with elixir binary pattern matching like:

def command(client, message) do

WebSockex.send_frame(client, {:binary, <<0>> <> message})

end

def resize(client, cols, rows) do

resize = Jason.encode!(%{Width: cols, Height: rows})

WebSockex.send_frame(client, {:binary, <<4>> <> resize})

end

defp deliver_frame(<<1, frame::binary>>, pid),

do: send_frame(pid, frame)

defp deliver_frame(<<2, frame::binary>>, pid),

do: send_frame(pid, frame)

defp deliver_frame(<<3, frame::binary>>, pid),

do: send_frame(pid, frame)

defp deliver_frame(frame, pid), do: send_frame(pid, frame)Elixir also has a fairly simple GenServer-based WebSocket client we leveraged to establish a resilient connection to the k8s API. We linked the WebSocket genserver to our channel process to ensure everything is properly restarted in the event we lose connection to k8s.

All the code to manage the pod exec WebSocket can be found here, and encapsulating that code into the genserver allowed us to create a pretty thin channel to manage shuttling stdout and stdin to and from the browser:

defmodule RtcWeb.ShellChannel do

use RtcWeb, :channel

alias Core.Services.{Shell.Pods, Shell}

alias Core.Shell.Client

alias Core.Schema.CloudShell

require Logger

def join("shells:me", _, socket) do

send(self(), :connect)

{:ok, socket}

end

def handle_info(:connect, socket) do

with %CloudShell{pod_name: name} = shell <- Shell.get_shell(socket.assigns.user.id),

{:ok, _} <- Client.setup(shell),

url <- Pods.PodExec.exec_url(name),

{:ok, pid} <- Pods.PodExec.start_link(url, self()) do

{:noreply, assign(socket, :wss_pid, pid)}

else

err ->

Logger.info "failed to exec pod with #{inspect(err)}"

{:stop, {:shutdown, :failed_exec}, socket}

end

end

def handle_info({:stdo, data}, socket) do

push(socket, "stdo", %{message: Base.encode64(data)})

{:noreply, socket}

end

def handle_in("command", %{"cmd" => cmd}, socket) do

Pods.PodExec.command(socket.assigns.wss_pid, fmt_cmd(cmd))

{:reply, :ok, socket}

end

def handle_in("resize", %{"width" => w, "height" => h}, socket) do

Pods.PodExec.resize(socket.assigns.wss_pid, w, h)

{:reply, :ok, socket}

end

defp fmt_cmd(cmd) when is_binary(cmd), do: cmd

defp fmt_cmd(cmd) when is_list(cmd), do: Enum.join(cmd, " ")

end

Elixir turned out to be an awesome solution for this sort of product for two reasons. It made creating a purpose-built WebSocket API a joyful experience. It also allowed us to leverage its unique binary handling capabilities to handle a goofy protocol easily and present it in a Javascript digestible format to our browser clients.

Shell Resilience

One of the downsides to using Kubernetes pod execs is if the WebSocket is terminated for whatever reason, the shell process you exec into that pod with is terminated pod-side. This means that any time someone refreshed a page or navigated in the browser, their session was entirely destroyed.

In common k8s dashboard use cases, this is totally fine because you’re likely running very ephemeral tasks like inspecting files or calling a small python script in a one-off manner.

However, in our case, our shell is executing long-running terraform and helm commands which, if terminated prematurely, can cause a corrupted state and dangling resources. This was a huge showstopper and almost killed the project.

This has been a common issue for a lot of other terminal tasks such as long-running ssh sessions. The old-school solution was to use tools like screen or tmux to handle the issue, this option however would not work for us.

Thankfully we figured out a solution; terminal multiplexing.

If you are unfamiliar with a terminal multiplexer, it is essentially a way to run a terminal session in a small local server which is never terminated, even if the shell process calling it terminates. Because of this, you are able to continue whatever work you were doing and not be interrupted when doing so.

To manage a single tmux session in our shell pods we wrote a simple bash script:

#!/bin/sh

session="workspace"

tmux start

tmux has-session -t $session 2>/dev/null

if [ $? != 0 ]; then

tmux new-session -c ~/workspace -s $session zsh

fi

# Attach to created session

tmux attach-session -d -t $sessionThis ensures there’s a durable tmux session that the browser always mounts to on WebSocket connect, and it also makes our shell in many ways preferable to running locally since a laptop’s network is going to be far less reliable than the AWS datacenter our shell pods run in. It also lets you have a run-and-forget workflow for longer-running terraform setups, especially the k8s cluster creation process.

I did not come into this project expecting to have to dust off my tmux skills, but it was fun to be able to mix it into our ultimate solution.

React implementation

The final piece of the puzzle we had to figure out was how to render a terminal in the browser.

There are obviously a fair number of tools in the wild that do this. GCP and Azure both have a cloud shell, and the Kubernetes dashboard OSS project also embeds one in its pod viewer. So there was clearly prior art.

After digging a bit, our team came across the xtermjs project, which is a full POSIX terminal implementation in browser-compatible javascript. There is also a react wrapper for xterm-for-react, which basically just mounts xterm into a parent HTML node and manages some setup state in a react-compatible way.

There was a bit of work to finagle a phoenix channel into reading and writing from xtermjs, but ultimately it was fairly straightforward. If anyone is curious about how it all fits together on the front end, you can view the source code for our terminal component here.

Wrapping Up

Overall, we had a ton of fun building this feature. It allowed us to delve into an often unexplored area of the Kubernetes API, which I am honestly happy that we got to explore. This project also took an unexpected turn in its use of tmux and exposed us to a genuinely mind-blowing project in xtermjs (I’m shocked the community had the patience to write a full shell in javascript!).

We’re also happy to see how much it's solving a real pain point for our users. It allows developers to see the value of plural quickly without the hassle of setting up a development environment they otherwise would have not gone through.

Hopefully, you can learn a bit from this, especially if a browser-based shell is useful in any projects you’re building.

To learn more about how Plural works and how we are helping engineering teams across the world deploy open-source applications in a cloud production environment, reach out to me and the rest of the team.

Ready to effortlessly deploy and operate open-source applications in minutes? Get started with Plural today.

Join us on our Discord channel for questions, discussions, and to meet the rest of the community.

Newsletter

Be the first to know when we drop something new.