Plural’s AI agents query, diagnose, and remediate across Kubernetes and Terraform — generating manifests, PRs, and workflows that keep your platform running smoothly.

Book a demoAI has the potential to transform infrastructure operations — but applying it directly to Kubernetes fleets and cloud resources isn’t trivial. The risks are high, the data is fragmented, and the guardrails most enterprises need just aren’t there. Without the right foundations, AI can create more problems than it solves.

Infrastructure data is sensitive. You can’t risk exposing credentials, configs, or tenant information to the wrong systems. AI infrastructure needs a unified permission layer that enforces governance by default — or you’ll be stuck building security controls from scratch.

A misstep in infrastructure isn’t just noise — it can bring production down. Autonomous AI without oversight is a recipe for outages. That’s why AI for infrastructure has to work within GitOps and PR flows — every recommendation reviewable, every change auditable.

AI is only as good as the context it has. With infrastructure, that context lives across Terraform state, Kubernetes APIs, and constantly changing cloud metadata. Without a retrieval-augmented generation (RAG) layer purpose-built for infra, your AI won’t know where to act — or worse, it’ll act on bad data.

Platform teams need more than suggestions — they need to trust the AI’s context, recommendations, and limits. Without transparency into how recommendations are made, every change risks introducing errors, slowing adoption, and eroding trust.

Plural is the AI-native control plane built for enterprise platform teams. We combine governance, human-in-the-loop workflows, and real-time infrastructure context into one system — so you can safely apply AI to the hardest problems in Kubernetes and cloud management.

Detect anomalies across Terraform, Kubernetes API, and cluster metadata with AI-powered diagnostics.

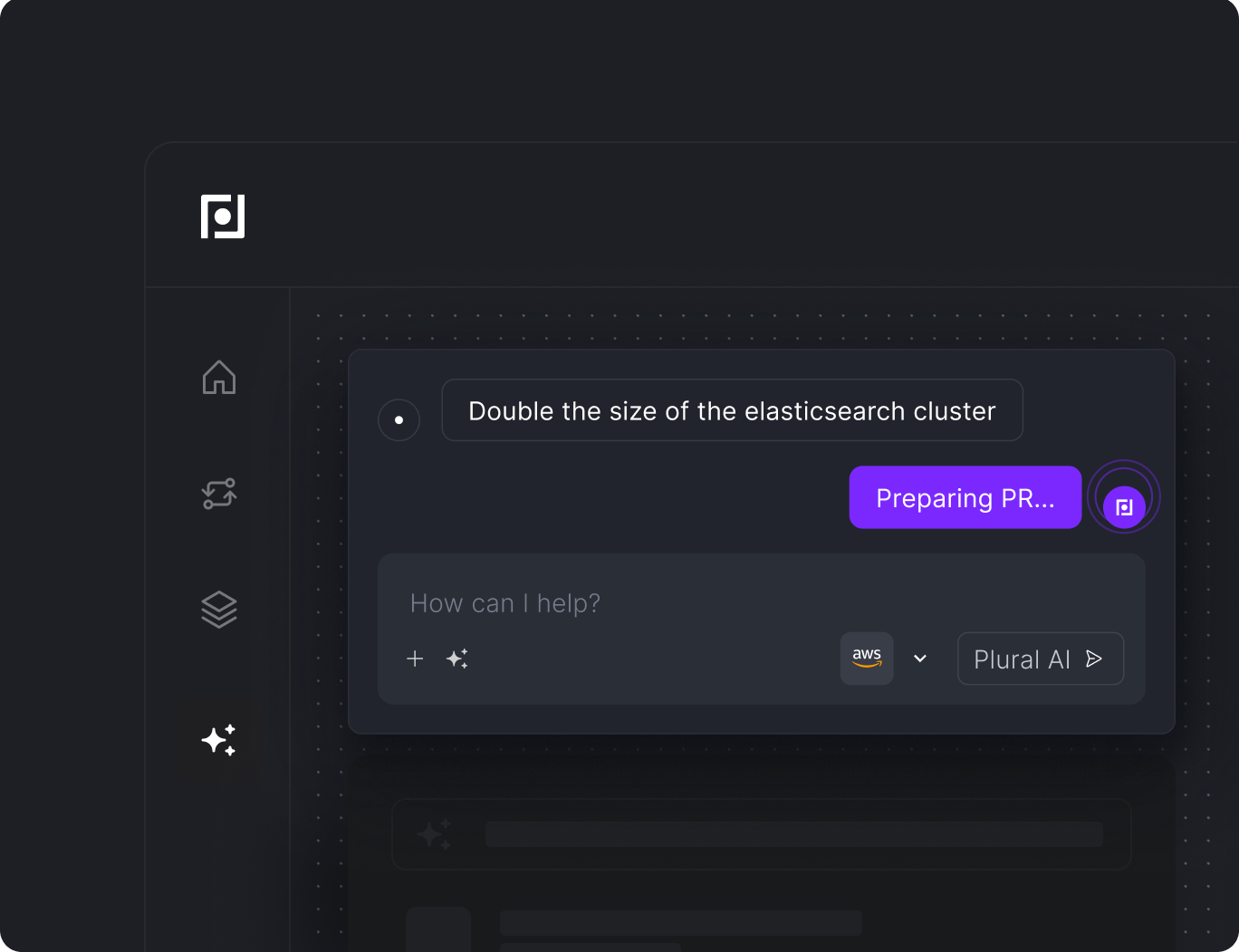

Automatically generate pull requests to remediate issues, keeping humans-in-the-loop for safe, auditable changes.

Connect context across your fleet with Plural’s semantic layer, powering richer queries and autonomous workflows.

Reduce manual toil with workflows that investigate, explain, and resolve problems in parallel.

Intelligent AI investigation of failing resources when alerts fire.

Get detailed root cause analysis and impact to reduce manual triage.

Supporting evidence showing exactly how issues were diagnosed.

Proactive detection of potential issues before they impact production.

One-click AI-generated fixes delivered through pull requests.

Ask questions about your infrastructure in plain English instead of digging through Terraform, YAML or consoles.

Context-aware responses based on your environment.

Ability to explain complex Kubernetes concepts.

Deep integration with your cluster data.

Instant answers to troubleshooting questions.

Continuously monitor resource usage across nodes, workloads, and clusters.

Identify over-provisioned deployments and underutilized resources.

Get AI recommendations to right-size memory and CPU requests.

AI-generated pull requests to apply cost-saving changes.

Support for major AI providers including OpenAI, Anthropic, Bedrock, and Vertex in a bring-your-own-LLM model.

Full integration with existing RBAC and permissions.

Complete audit trail of all AI interactions.

Data remains within your environment.

Agent-based architecture with egress-only communication.