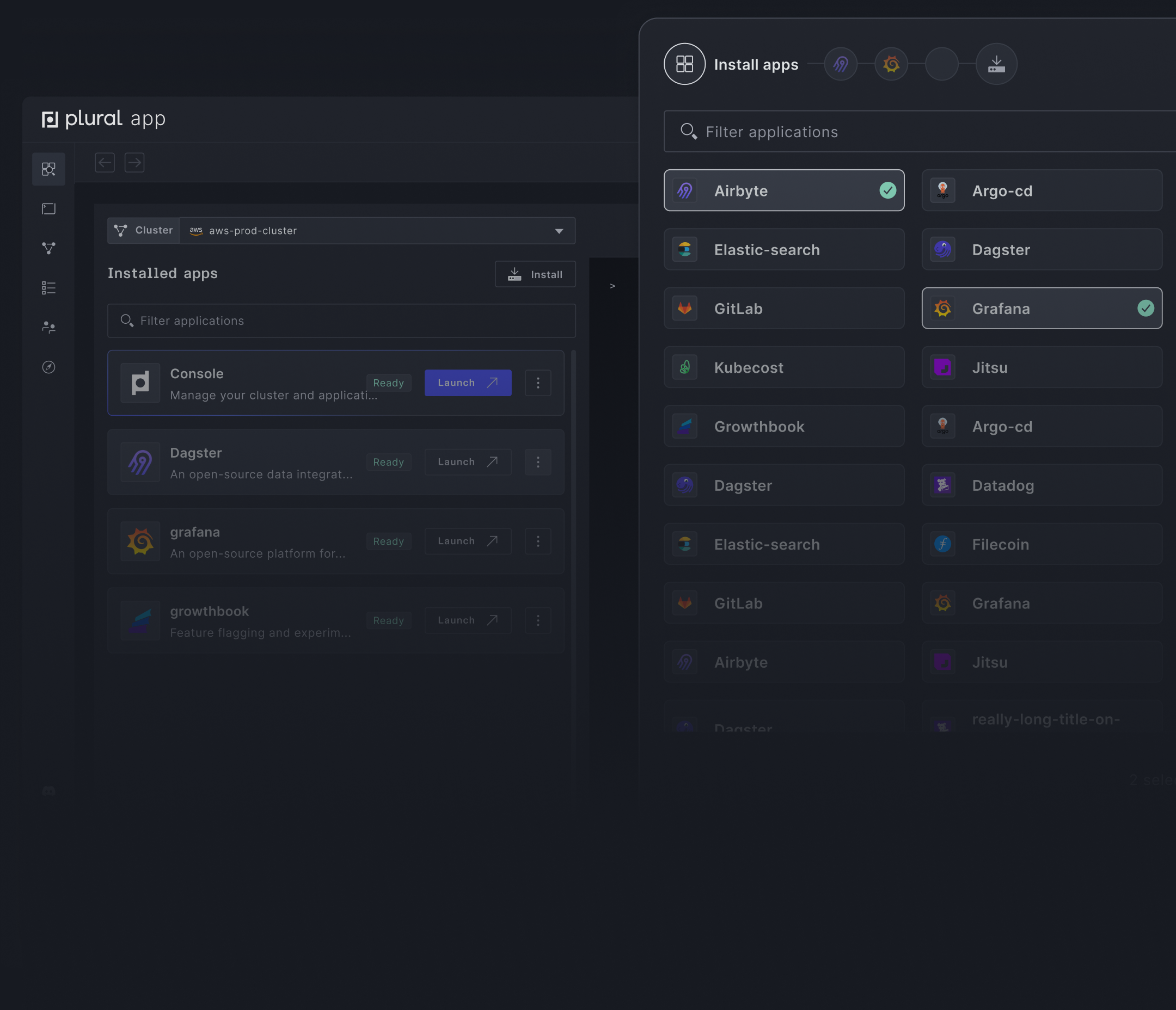

Select from 90+ open-source applications

Get any stack you want running in minutes, and never think about upgrades again.

Kubecost is a cost monitoring and cost optimization solution for teams running Kubernetes, helping teams to reduce Kubernetes spend in the cloud.

Available providers

While cost optimization with Kubecost is quick and easy, deploying and setting up the application itself is complex and requires specific (cloud) infrastructure, networking and Kubernetes knowledge.

Plural helps you deploy and manage the lifecycle of open-source applications on Kubernetes. Our platform combines the scalability and observability benefits you get with managed SaaS offerings with the data security, governance, and compliance benefits of self-hosting Kubecost.

If you need more than just Kubecost, take a look at Plural’s DevOps stack, a pre-integrated deployment of Kubecost, Grafana, and Argo CD, or look for other open-source DevOps tools in our marketplace of curated applications to leapfrog complex deployments and get started quickly.

plural bundle install kubecost kubecost-aws

plural build

plural deploy --commit "deploying kubecost"

We make it easy to securely deploy and manage open-source applications in your cloud.

Get any stack you want running in minutes, and never think about upgrades again.

You control everything. No need to share your cloud account, keys, or data.

Built on Kubernetes and using standard infrastructure as code with Terraform and Helm.

Interactive runbooks, dashboards, and Kubernetes api visualizers give an easy-to-use toolset to manage application operations.

Build your custom stack with over 90+ apps in the Plural Marketplace.

The team at Cayena needed a way to quickly and consistently deploy open-source applications like Airbyte into production environments. To Cayena, the most important thing is to deliver fast and powerful applications onto Kubernetes without being specialists in it.

Before using Plural, Cayena came really close to hiring a Kubernetes specialist to handle the deployment and monitoring of applications on Kubernetes. While the opportunity didn’t work out, Cayena’s data applications are operating fine thanks to Plural, which Oriel compared to having a DevOps specialist on board.