A Practical Guide to Kubernetes Encryption at Rest

Get clear, actionable steps for configuring encryption at rest Kubernetes to protect secrets, meet compliance, and secure your cluster’s sensitive data.

A common but risky misconception is that Kubernetes Secrets are secure by default. In reality, they’re only Base64 encoded. That means there's no cryptographic protection. Anyone with access to etcd can easily decode and read your sensitive data.

To safeguard your cluster, you need encryption at rest in Kubernetes. This feature encrypts Secrets and other resources before writing them to etcd, converting plaintext into unreadable ciphertext. It’s a foundational security control that mitigates the risk of credential leaks or data exfiltration.

This guide covers the complete workflow: defining an EncryptionConfiguration, integrating with a Key Management Service (KMS), and implementing key rotation strategies to maintain robust, long-term data security in your cluster.

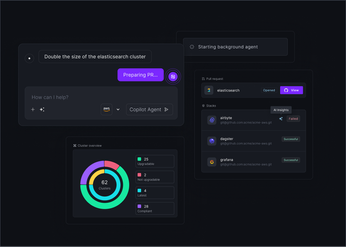

Unified Cloud Orchestration for Kubernetes

Manage Kubernetes at scale through a single, enterprise-ready platform.

Key takeaways:

- Protect data where it lives: Kubernetes Secrets are not encrypted by default—they are only Base64 encoded. You must explicitly enable at-rest encryption to secure sensitive data in etcd against direct storage access and meet compliance mandates.

- Use a KMS for robust key management: Configuration is done via an

EncryptionConfigurationobject passed to the kube-apiserver. For production environments, using thekmsprovider to integrate with a service like AWS KMS or Google Cloud KMS is the recommended approach, as it separates your keys from the cluster. - Manage encryption at scale with GitOps: Manually managing encryption configurations, key rotation, and RBAC policies across multiple clusters is error-prone. Adopting a GitOps workflow allows you to treat security configurations as code, ensuring consistent, auditable, and automated enforcement across your entire fleet.

What Is Encryption at Rest in Kubernetes

In Kubernetes, encryption at rest protects sensitive API objects (such as Secrets) by encrypting them before they are written to etcd. When a client requests one of these objects, the kube-apiserver transparently decrypts the data in memory before returning it. This ensures that even if an attacker gains access to etcd or its backups, they cannot read the underlying sensitive information.

By default, Secrets in Kubernetes are stored as Base64-encoded strings in etcd. Base64 is merely an encoding format, not encryption—it offers no real security. Enabling at-rest encryption converts these easily decodable strings into unreadable ciphertext, significantly strengthening your cluster’s protection against credential exposure and data theft.

The encryption and decryption processes are invisible to end users interacting with the Kubernetes API but provide a crucial safeguard against unauthorized access to cluster data.

The Layers of Data Protection

Encryption at rest is part of a defense-in-depth strategy, not a complete solution on its own. It complements lower-level protections, such as full-disk encryption for etcd storage volumes or host filesystems.

System-level encryption (e.g., encrypting the disk or volume) prevents data leaks from physical storage compromise. However, it doesn’t protect against logical access vulnerabilities, such as an attacker reading data directly from the filesystem. Kubernetes’ application-level encryption addresses this gap—ensuring the data written to etcd remains encrypted, even if the storage layer is compromised.

For a resilient setup, you should combine both:

- Disk or volume encryption at the system level.

- Application-layer encryption using Kubernetes’ built-in encryption-at-rest feature.

Key Components and Architecture

The foundation of Kubernetes encryption at rest is the EncryptionConfiguration object—a YAML configuration file supplied to the kube-apiserver via the --encryption-provider-config flag at startup. This file specifies:

- Which resources to encrypt (e.g.,

secrets,configmaps). - Which encryption providers to use.

Kubernetes supports multiple providers:

- aescbc for locally managed encryption keys.

- kms for integration with external Key Management Services like AWS KMS, Google Cloud KMS, or Azure Key Vault.

When configured, all writes for specified resources are automatically encrypted before being persisted to etcd. Reads are decrypted on the fly, with the kube-apiserver acting as the central encryption and decryption point—ensuring all cryptographic operations occur in a controlled and auditable manner.

Clearing Up Common Misconceptions

Many developers assume Kubernetes Secrets are encrypted by default—they aren’t. Secrets are only Base64 encoded, which means anyone with read access to etcd can trivially decode them. Encryption at rest must be explicitly enabled to provide real cryptographic protection.

It’s also important to understand what encryption at rest does not protect against:

- It does not secure data in transit—use TLS for that.

- It does not protect data in use or against users with legitimate API access. If a user can read a Secret via the API, the kube-apiserver decrypts it before returning it.

Therefore, encryption at rest should be paired with strong RBAC policies, network security controls, and restricted access to etcd. Together, these measures create a layered defense that minimizes both accidental exposure and malicious compromise of sensitive data.

Why Encrypt Data at Rest

Encrypting data at rest is a non-negotiable security requirement for production-grade Kubernetes environments. While network policies, authentication, and RBAC manage access to live resources, they do not protect data stored on disk or in backups. If an attacker gains access to your etcd datastore, persistent volumes, or backup files, they can extract sensitive information unless it’s encrypted.

At-rest encryption ensures that even if your storage layer is compromised, the underlying data—such as Kubernetes Secrets, ConfigMaps, or application credentials—remains unreadable. It acts as the final line of defense against data theft, privilege escalation, and unauthorized disclosure.

Mitigate Security Risks

The core purpose of encryption at rest is to protect sensitive information from unauthorized access, even in breach scenarios. etcd is the single source of truth for your cluster’s state—it contains configuration data, workload definitions, and all Kubernetes Secrets.

If an attacker gains access to the disk or backups storing etcd data, they effectively gain visibility into your cluster’s entire configuration and credentials. Encryption ensures that this data is cryptographically protected, rendering it useless without the decryption keys.

This means that in the event of a stolen backup, a compromised volume, or physical drive loss, critical assets like:

- API tokens

- Database passwords

- TLS certificates

remain secure and inaccessible.

Meet Compliance Requirements

Encryption at rest is also a compliance imperative. Regulatory standards such as PCI DSS, HIPAA, SOC 2, and FedRAMP require cryptographic protection of stored sensitive data. Implementing etcd encryption helps you align with these frameworks and demonstrate a strong security posture during audits.

More importantly, it ensures that if other layers of defense—such as access controls or network isolation—are breached, the data remains unintelligible to unauthorized users.

At scale, maintaining compliance across multiple clusters can become operationally complex. Automating encryption configuration and enforcement through policy management platforms like Plural helps teams maintain consistency and compliance without manual overhead.

Protect Data in Production Scenarios

Encryption at rest doesn’t just defend against attackers—it also protects data through the entire operational lifecycle of your infrastructure.

When a persistent volume is detached, replaced, or a physical disk is decommissioned, residual data can remain on the storage medium. Without encryption, this data can be recovered and exploited. Similarly, cluster snapshots and etcd backups stored in cloud object storage (like Amazon S3) can become attack vectors if a misconfiguration exposes them publicly.

With encryption at rest enabled, all stored cluster state and secrets remain encrypted—even in backup files or deprovisioned volumes. This ensures your Kubernetes environment maintains confidentiality and integrity throughout provisioning, operation, backup, and decommissioning phases.

Where Kubernetes Stores Data

To implement encryption at rest effectively, it’s essential to understand where Kubernetes stores data and how each storage layer contributes to the cluster’s overall security posture. Kubernetes data is distributed across multiple components—each with distinct risks and protection mechanisms. The main areas are:

- The etcd datastore, which maintains the cluster’s entire state.

- Persistent Volumes (PVs) used by workloads to store runtime data.

- Kubernetes Secrets, which contain credentials and other sensitive configuration values.

Each of these must be secured individually to achieve complete data protection.

Managing encryption, RBAC, and storage policies across multiple clusters quickly becomes operationally complex. Plural simplifies this by letting you define and enforce these configurations centrally. With its GitOps-based automation, you can apply consistent encryption settings and policies fleet-wide—making security a built-in, auditable part of your infrastructure management instead of a manual afterthought.

etcd: The Cluster’s State Database

etcd functions as the source of truth for Kubernetes. It stores everything about your cluster—node states, deployments, configurations, and especially Secrets. Every API call made via kubectl results in updates to etcd, which then reflects the current cluster state.

Because etcd contains the full configuration of your Kubernetes environment, it is a high-value target. Anyone with unauthorized access to etcd can read Secrets, impersonate users, or even take control of the entire cluster.

Encrypting etcd data at rest ensures that even if the datastore or its backups are compromised, the attacker cannot extract usable information. This makes etcd encryption one of the most critical steps in securing your cluster’s control plane.

Persistent Volumes and Storage Classes

While etcd holds cluster metadata, application data—such as database contents, logs, and user-generated files—resides in Persistent Volumes (PVs). These PVs are provisioned from a storage class, which defines how and where storage is allocated (e.g., AWS EBS, Google Persistent Disk, or an on-prem block device).

Since PVs store data outside the Kubernetes control plane, encryption for them must occur at the storage backend level. Most cloud providers support transparent disk encryption by default, and enabling this feature is essential for production workloads.

For on-prem environments, disk-level encryption solutions like LUKS or Ceph encryption should be used. The key takeaway is that protecting application data requires securing both the etcd layer and the persistent storage layer—each serving different parts of the data lifecycle.

Kubernetes Secrets Management

Kubernetes uses Secrets to manage credentials, tokens, and certificates securely within the cluster. These objects decouple sensitive data from manifests and container images, allowing for safer configuration management.

However, by default, Secrets are stored in etcd as Base64-encoded strings, which provide no cryptographic protection. Anyone who gains read access to etcd can trivially decode these values.

Enabling encryption at rest ensures that Secrets are encrypted before being written to etcd. This means that even if the datastore or backups are compromised, the decrypted content remains inaccessible without the appropriate keys—making at-rest encryption indispensable for protecting credentials and other sensitive data.

Available Storage Encryption Methods

Kubernetes provides several encryption mechanisms via the EncryptionConfiguration file passed to the kube-apiserver. This configuration specifies which resources (e.g., Secrets, ConfigMaps) should be encrypted and which provider should handle the encryption.

Supported providers include:

- aescbc – Uses AES-CBC with PKCS#7 padding.

- aesgcm – Uses AES-GCM with a random nonce for each encryption operation.

- kms – Integrates Kubernetes with an external Key Management Service (e.g., AWS KMS, Google Cloud KMS, Azure Key Vault).

For production environments, KMS integration is strongly recommended. It offloads key storage, rotation, and access control to a managed service designed for secure cryptographic operations, ensuring both reliability and compliance with security standards.

By combining encryption for etcd, secure persistent storage, and strong secret management practices, you create a defense-in-depth architecture that protects your Kubernetes data across every layer of the stack.

How to Configure Encryption at Rest

Configuring encryption at rest in Kubernetes is a deliberate, high-impact security process that ensures sensitive cluster data stored in etcd remains protected. The process involves defining a dedicated configuration file, choosing an encryption provider, and updating your API server configuration to apply it. When properly set up, even if an attacker gains read access to etcd or its backups, the stored Secrets and configuration data remain unreadable.

Since the configuration affects the kube-apiserver, it must be done carefully and consistently. For platform teams managing multiple clusters, maintaining uniform encryption settings across environments can be complex. This is where Plural’s GitOps-based approach provides significant value—it lets you define encryption settings as code and roll them out automatically across your entire fleet. The result is consistent enforcement, reduced human error, and an auditable record of all changes.

Create the EncryptionConfiguration Object

The foundation of Kubernetes at-rest encryption is the EncryptionConfiguration object. This YAML file tells the kube-apiserver which resources to encrypt (typically secrets) and which cryptographic providers to use.

A basic configuration might look like this

apiVersion: apiserver.config.k8s.io/v1

kind: EncryptionConfiguration

resources:

- resources:

- secrets

providers:

- aescbc:

keys:

- name: key1

secret: <base64-encoded-key>

- identity: {}

This configuration encrypts all Secrets using the aescbc provider. The file must be stored securely on your control plane node—typically under /etc/kubernetes/pki/encryption-config.yaml—with restricted permissions so only the kube-apiserver can read it.

Once created, this file becomes the blueprint for how etcd stores your cluster’s sensitive data.

Select an Encryption Provider

Within your EncryptionConfiguration, you define one or more encryption providers. Kubernetes supports multiple built-in options:

- aescbc – AES-CBC with PKCS#7 padding.

- aesgcm – AES-GCM with a random nonce for each encryption operation.

- secretbox – Uses XSalsa20 and Poly1305.

- kms – Integrates with an external Key Management Service (KMS).

The first provider in the list handles encryption for new data, while any additional providers are used for decryption. This ordering allows for seamless key rotation—you can introduce a new key by placing its provider first, without disrupting existing data access.

While local key providers are suitable for development or smaller clusters, KMS integration is the best choice for production, as it centralizes key management and improves compliance posture.

Integrate with a Key Management Service

Using a KMS provider offloads encryption key handling to a secure, external service such as AWS KMS, Google Cloud KMS, or Azure Key Vault. This separation ensures that encryption keys are never stored alongside your cluster data.

Your EncryptionConfiguration might define a KMS provider like this:

- kms:

name: awskms

endpoint: unix:///var/run/kmsplugin/socket.sock

cachesize: 1000

timeout: 3s

Here, the kube-apiserver communicates with the KMS plugin over a local UNIX socket. When encrypting or decrypting data, it sends the payload to the KMS, which performs the cryptographic operation and returns the ciphertext or plaintext.

With Plural, KMS integrations can be automated as part of your cluster provisioning workflow. Plural manages provider configurations and secret handling across environments, allowing teams to maintain secure, consistent setups at scale.

Step-by-Step Implementation and Validation

Once your EncryptionConfiguration file is ready:

Place it on your control plane node, typically under /etc/kubernetes/pki/.

Update the kube-apiserver manifest (found in /etc/kubernetes/manifests/kube-apiserver.yaml) to include:

--encryption-provider-config=/etc/kubernetes/pki/encryption-config.yaml

Restart the kube-apiserver. It will automatically reload the new encryption settings.

To confirm encryption is active:

Create a new Secret:

kubectl create secret generic test-secret --from-literal=password=MySecurePassRetrieve its value directly from etcd using etcdctl:

etcdctl get /registry/secrets/default/test-secret- You should see a value beginning with a prefix like

k8s:enc:kms:v1:followed by ciphertext, indicating that the data is now encrypted.

Analyze the Performance Impact

At-rest encryption adds a small amount of computational overhead to the kube-apiserver since each read and write of encrypted data involves cryptographic operations. However, this impact is minimal on modern CPUs with AES acceleration.

The greater challenge is operational, not computational—maintaining secure key rotation, consistent configurations, and strict RBAC controls. Misconfigurations or over-permissive roles can expose your encryption keys or configuration files, undermining encryption entirely.

Using Plural’s management console, you can:

- Enforce centralized RBAC policies.

- Automate key rotation through GitOps workflows.

- Track all encryption configuration changes for auditability and compliance.

This ensures your encryption policies remain consistent, secure, and verifiable across all clusters—without increasing operational burden.

How to Manage Encryption Keys

Enabling encryption at rest is only the beginning—the real challenge lies in managing encryption keys securely and continuously. Effective key management ensures the long-term integrity and resilience of your encryption strategy by defining clear processes for key rotation, access control, backup, and recovery.

In Kubernetes, keys shouldn’t be treated as static configuration files but as dynamic, lifecycle-managed assets. Proper automation and governance are essential, especially when managing encryption across multiple clusters. With Plural, teams can centralize key operations through a GitOps-driven model—enabling secure, repeatable, and auditable key management at scale.

Implement a Key Rotation Strategy

Static keys create risk. If a key is ever compromised, every Secret it encrypts becomes vulnerable. Regular key rotation minimizes this exposure window and strengthens your overall security posture.

Kubernetes supports non-disruptive key rotation through the EncryptionConfiguration object. The process involves:

- Adding a new key to the configuration file.

- Deploying the updated configuration to all API servers.

- Re-encrypting existing Secrets using the new key.

- Removing the old key after re-encryption completes.

While straightforward in principle, manually performing these steps across clusters can be tedious and error-prone. Automating key rotation through Plural’s GitOps workflows ensures that updates propagate consistently, safely, and with full version control. Every change is logged and traceable, enabling compliance and audit-readiness without downtime.

Use RBAC for Access Control

Encryption is only as strong as the permissions guarding it. Misconfigured Role-Based Access Control (RBAC) policies are among the most common causes of security breaches in Kubernetes environments.

To minimize risk:

- Restrict access to the

EncryptionConfigurationfile to a small group of administrators. - Create read-only ClusterRoles for applications that need to consume Secrets but cannot modify them.

- Prevent developers or workloads from accessing encryption keys directly.

Plural integrates with your identity provider (IdP) to simplify this process. Through Plural’s unified dashboard, you can apply RBAC rules and group-based access controls across clusters, ensuring consistent and secure permissions aligned with your organization’s identity management policies.

Establish Backup and Recovery Procedures

Losing encryption keys is equivalent to losing your data—without them, encrypted Secrets cannot be decrypted. A reliable backup and recovery plan is therefore essential.

Best practices include:

- Back up your encryption keys or KMS credentials securely, ensuring they are encrypted at rest and in transit.

- Store backups separately from your main cluster and restrict access to them.

- Regularly test key recovery procedures as part of your disaster recovery plan.

Storing your EncryptionConfiguration in version control, as promoted by Plural’s GitOps model, provides an additional safeguard. It ensures that you have a versioned, auditable record of your encryption settings and can recover quickly from misconfiguration or accidental loss.

When using a KMS, focus on protecting access credentials and IAM policies—not the encryption keys themselves, which remain securely managed within the external KMS.

Integrate with Your Cloud Provider’s KMS

While managing raw encryption keys locally is possible, it introduces operational risk and scalability challenges. For production environments, the best practice is to use a cloud-native Key Management Service (KMS) such as:

- AWS KMS

- Google Cloud KMS

- Azure Key Vault

A KMS centralizes key creation, storage, and access control, often backed by Hardware Security Modules (HSMs) for tamper-resistant protection. These services also support envelope encryption, where your Data Encryption Keys (DEKs) are encrypted by master keys stored in the KMS—adding another layer of defense.

Plural streamlines KMS integration by codifying provider configurations and automating deployment across clusters. Using Plural’s GitOps-driven model, you can:

- Define KMS integrations once as declarative configuration.

- Apply them consistently to every environment.

- Enforce key rotation policies automatically.

This approach ensures a standardized, scalable, and compliant encryption management workflow—protecting your Kubernetes data at every stage of its lifecycle.

Best Practices for a Secure Implementation

Enabling encryption at rest in Kubernetes is a major milestone—but it’s only one part of a comprehensive security strategy. Maintaining long-term data security requires continuous monitoring, strict configuration management, and well-defined operational procedures. Implementing the following best practices helps ensure your cluster remains resilient against misconfigurations, privilege misuse, and evolving threats.

Set Up Audit Logging for Compliance

Audit logging is indispensable for both security visibility and regulatory compliance. Kubernetes audit logs capture every request made to the kube-apiserver, including operations on Secrets, ConfigMaps, and other sensitive objects.

You should configure your audit policy to:

- Log all create, update, delete, and get operations for Secrets and configuration data.

- Include user identity, namespace, and timestamp metadata.

- Forward logs to a centralized observability or SIEM platform for retention and analysis.

Comprehensive logging not only supports investigations during a security incident but also helps satisfy compliance requirements for frameworks like SOC 2, HIPAA, and PCI DSS.

With Plural, you can automatically forward and aggregate audit logs across clusters. This ensures consistent visibility, centralized retention, and simplified compliance reporting across your entire fleet.

Avoid Common Pitfalls

Even with encryption enabled, security failures often stem from misconfiguration or incomplete coverage. Common mistakes include:

- Encrypting data at rest but not securing it in transit (e.g., missing TLS between components).

- Leaving Secrets unencrypted in etcd due to missing or incorrect configuration.

- Overly permissive RBAC policies granting unnecessary read access to sensitive data.

To mitigate these issues:

- Always use TLS for communication between control plane components.

- Regularly validate your

EncryptionConfigurationand RBAC rules. - Apply configuration changes through GitOps pipelines.

Using Plural’s GitOps-driven workflow ensures peer-reviewed, version-controlled configuration management. This reduces the risk of accidental exposure and provides a clear audit trail of who changed what and when.

Configure Monitoring and Alerting

Monitoring is essential for early detection of anomalies and rapid response to potential security incidents. You should set up alerts for:

- Unauthorized access to Secrets or ConfigMaps.

- Failed encryption or decryption attempts.

- Unexpected modifications to the

EncryptionConfigurationor API server flags.

Integrating monitoring systems like Prometheus, Grafana, or your cloud provider’s observability stack enables real-time visibility.

Plural’s unified console offers a single-pane-of-glass view across all clusters—aggregating events, metrics, and alerts in one place. This centralized observability reduces operational friction and improves incident response times by eliminating context switching between tools.

Troubleshoot Encryption Issues

If encryption at rest isn’t functioning as expected, follow a structured troubleshooting approach:

- Check kube-apiserver logs for messages related to encryption provider errors or KMS connectivity issues.

- Validate your EncryptionConfiguration syntax and confirm the correct provider is listed first.

- Verify encryption status by creating a test Secret and inspecting its etcd value using

etcdctl. Look for a prefix likek8s:enc:followed by ciphertext. - Review RBAC rules to ensure no unauthorized roles can modify the encryption configuration.

Plural simplifies this process with its AI Insight Engine, which automatically performs root cause analysis across cluster logs and configurations. It helps pinpoint issues such as misconfigured providers, API server flag mismatches, or connectivity failures with your KMS—allowing teams to resolve problems quickly and maintain strong data protection guarantees.

How Plural Simplifies Encryption at Rest

Configuring encryption at rest in Kubernetes involves managing EncryptionConfiguration objects, integrating with a Key Management Service (KMS), and ensuring consistent application across a fleet of clusters. While Kubernetes provides the necessary primitives, implementing them at scale requires significant manual effort and introduces operational complexity. Misconfigurations can lead to security vulnerabilities or data loss.

Plural provides a unified platform that streamlines the entire lifecycle of encryption at rest. By leveraging GitOps, Infrastructure as Code (IaC), and a centralized control plane, Plural automates configuration, simplifies key management, and ensures your security posture is consistent and auditable across all your Kubernetes environments. This approach reduces the operational burden on platform teams and minimizes the risk of human error, allowing you to secure sensitive data without slowing down development.

Use Plural's Built-in Security Features

Kubernetes natively supports encryption at rest for secrets stored in etcd via the EncryptionConfiguration object. However, manually creating, distributing, and managing these configuration files for every cluster is error-prone and doesn't scale. Plural abstracts this complexity through its built-in security framework. The platform allows you to define encryption policies centrally and apply them consistently across your entire fleet. Using Plural’s agent-based architecture, these configurations are enforced on each managed cluster, ensuring that all secrets are encrypted by default. This removes the need for engineers to manually configure individual clusters and guarantees a baseline security standard is met everywhere.

Automate Configuration with GitOps

Plural is built on a GitOps foundation, which treats your Git repository as the single source of truth for all configurations, including security policies. Instead of manually placing an encryptionConfig.yaml file on each control plane node, you commit your encryption configuration to a version-controlled repository. Plural’s continuous deployment capabilities automatically detect changes and apply them to the target clusters. This workflow ensures that every modification to your encryption settings is auditable through a pull request, providing a clear history of who changed what and when. This API-driven approach makes it simple to roll out updates or revert changes, bringing repeatability and reliability to your security operations.

Centralize Key Management

Effective encryption depends on secure and robust key management. Plural simplifies the integration of external Key Management Services (KMS) from major cloud providers like AWS KMS, Azure Key Vault, and Google Cloud KMS. Using Plural Stacks, you can manage the necessary Terraform configurations to provision and connect your KMS provider as part of your infrastructure-as-code workflow. This allows you to centralize the control and lifecycle of your encryption keys, including rotation and access policies, from a single platform. By managing KMS integration as code, you ensure that key management practices are standardized and consistently applied across all clusters, which is critical for meeting compliance requirements.

Use Seamless Integrations

Beyond etcd encryption, a complete secrets management strategy involves securely injecting secrets into running pods. Plural’s application catalog simplifies the deployment and configuration of essential tools like the Secrets Store CSI Driver and External Secrets Operator. These integrations allow your applications to consume secrets directly from your centralized KMS or secrets manager without exposing them as standard Kubernetes Secrets. You can deploy these operators across your fleet with a few clicks or a simple manifest definition in your Git repository. This seamless integration creates a secure, end-to-end workflow for managing sensitive data, from at-rest encryption in etcd to secure consumption by your applications.

Related Articles

Unified Cloud Orchestration for Kubernetes

Manage Kubernetes at scale through a single, enterprise-ready platform.

Frequently Asked Questions

Are Kubernetes Secrets not secure by default? I thought that's what they were for. This is a common point of confusion. By default, Kubernetes Secrets are only Base64 encoded, which is a way to represent data, not to secure it. Anyone with access to your etcd datastore can easily decode them. Enabling at-rest encryption is the necessary step to apply actual cryptographic protection, making the data unreadable to unauthorized parties who might access the underlying storage.

When should I use a local encryption key versus an external Key Management Service (KMS)? While using a local key is simpler to set up for development or testing environments, an external KMS is the standard for production. A KMS separates your encryption keys from your cluster, which is a major security advantage. If your cluster is compromised, the keys remain safe in a dedicated, highly available service. This separation of concerns is also often a requirement for meeting strict compliance standards.

How disruptive is rotating encryption keys? Do I need to schedule downtime? Key rotation in Kubernetes is designed to be a non-disruptive, online operation. The process involves adding your new key to the EncryptionConfiguration file while keeping the old key present for decryption. This allows the API server to read data encrypted with the old key while writing new data with the new key. Once all existing secrets are re-encrypted, you can safely remove the old key. With a GitOps workflow, this entire process can be automated and rolled out safely without any service interruption.

Does enabling at-rest encryption in the API server also protect my application data in Persistent Volumes? No, it does not. The at-rest encryption configured for the kube-apiserver specifically protects API objects stored in etcd, like Secrets and ConfigMaps. It does not encrypt the data your applications write to Persistent Volumes (PVs). For that, you need to enable encryption at the storage layer, typically through features provided by your cloud provider or your on-premise storage solution. A complete security strategy requires protecting data at both the application and storage levels.

How does Plural make managing encryption configurations easier than just doing it manually? Manually managing encryption involves placing configuration files on each control plane node and ensuring consistency across every cluster, which is prone to error at scale. Plural automates this by treating your encryption configuration as code within a Git repository. You define your policy once, and Plural's GitOps engine ensures it's applied correctly across your entire fleet. This provides a version-controlled, auditable workflow for managing encryption settings and key rotation, eliminating manual steps and reducing the risk of misconfiguration.

Newsletter

Join the newsletter to receive the latest updates in your inbox.