Kubernetes Basics: A Comprehensive Guide

Master Kubernetes basics with this comprehensive guide, covering deployment, scaling, and management of containerized applications for efficient operations.

Kubernetes has become the de facto standard for container orchestration, simplifying the deployment, scaling, and management of applications in the cloud-native era. But its power comes with complexity. For those new to this container management system, understanding Kubernetes basics is crucial for navigating its architecture and effectively deploying applications.

Whether you're a developer just starting with containers or an experienced DevOps engineer looking to deepen your Kubernetes knowledge, this guide provides a solid foundation for mastering this powerful platform. We'll also address potential challenges with Kubernetes and explore how platforms like Plural simplify this.

Unified Cloud Orchestration for Kubernetes

Manage Kubernetes at scale through a single, enterprise-ready platform.

Key Takeaways

- Kubernetes simplifies complex deployments: Managing containerized applications at scale presents significant challenges. Kubernetes automates deployments, scaling, and ongoing maintenance, allowing you to focus on application development rather than infrastructure management.

- Master core concepts for effective management: Understanding fundamental concepts like Pods, Nodes, Deployments, and Services is crucial for effectively deploying and scaling applications on Kubernetes. These concepts form the building blocks for managing your workloads and ensuring their stability and performance.

- Simplify Kubernetes complexity with Plural: While powerful, Kubernetes has a learning curve and added challenges. Plural is designed to help teams move beyond these limitations and confidently manage Kubernetes at scale.

What is Kubernetes?

Kubernetes (often shortened to k8s) is an open-source system for automating the deployment, scaling, and management of containerized applications. Think of it as a sophisticated container orchestrator that simplifies running and managing containerized applications at scale.

Problems Kubernetes Solves

Running containerized applications, especially at scale, presents several challenges. Kubernetes addresses these directly:

- Complex deployments: Deploying and managing even a handful of containers across multiple servers quickly becomes unwieldy. Kubernetes provides a declarative model for defining your desired state, simplifying complex deployments, and automating the process. You describe how your application should run, and Kubernetes makes it happen.

- Scaling difficulties: Manually scaling applications up or down to meet changing demand is time-consuming and error-prone. Kubernetes automates scaling based on metrics like CPU usage or requests per second, ensuring your application can handle fluctuating workloads.

- Infrastructure management: Keeping track of server health, resource allocation, and container lifecycles is a significant undertaking. Kubernetes automates these tasks, freeing you to focus on application development rather than infrastructure management.

- Monitoring and troubleshooting: Gaining visibility into the health and performance of your containerized applications can be challenging. Kubernetes offers robust monitoring and logging capabilities, making identifying and resolving issues easier.

Benefits of Container Orchestration

Using a container orchestrator like Kubernetes offers numerous benefits:

- Simplified container management: Kubernetes streamlines the deployment, scaling, and management of containerized applications, making it easier to run complex applications across a cluster of machines.

- Scalability and high availability: Kubernetes automatically scales your applications up or down based on demand, ensuring they can handle fluctuating workloads. It also provides high availability by automatically restarting failed containers and rescheduling them to healthy servers.

- Self-healing and resilience: Kubernetes constantly monitors the health of your containers and automatically restarts or replaces any that fail, ensuring your applications are always running. This automated recovery process minimizes downtime and ensures consistent service availability.

- Improved resource utilization: Kubernetes efficiently schedules containers across your cluster, maximizing resource utilization and reducing infrastructure costs. Kubernetes helps you get the most out of your infrastructure investments by optimizing resource allocation.

- Portability and flexibility: Kubernetes can run on various infrastructure providers, from public clouds to on-premises data centers, allowing you to choose the best environment for your applications. This portability simplifies migrating applications between different environments.

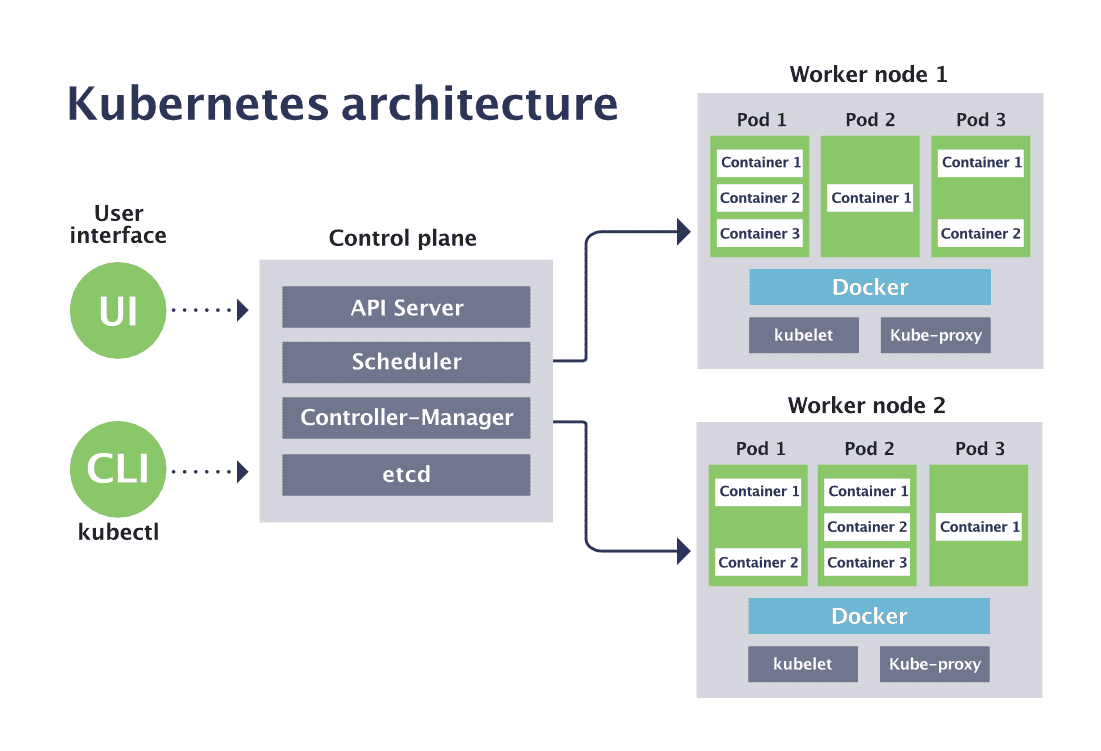

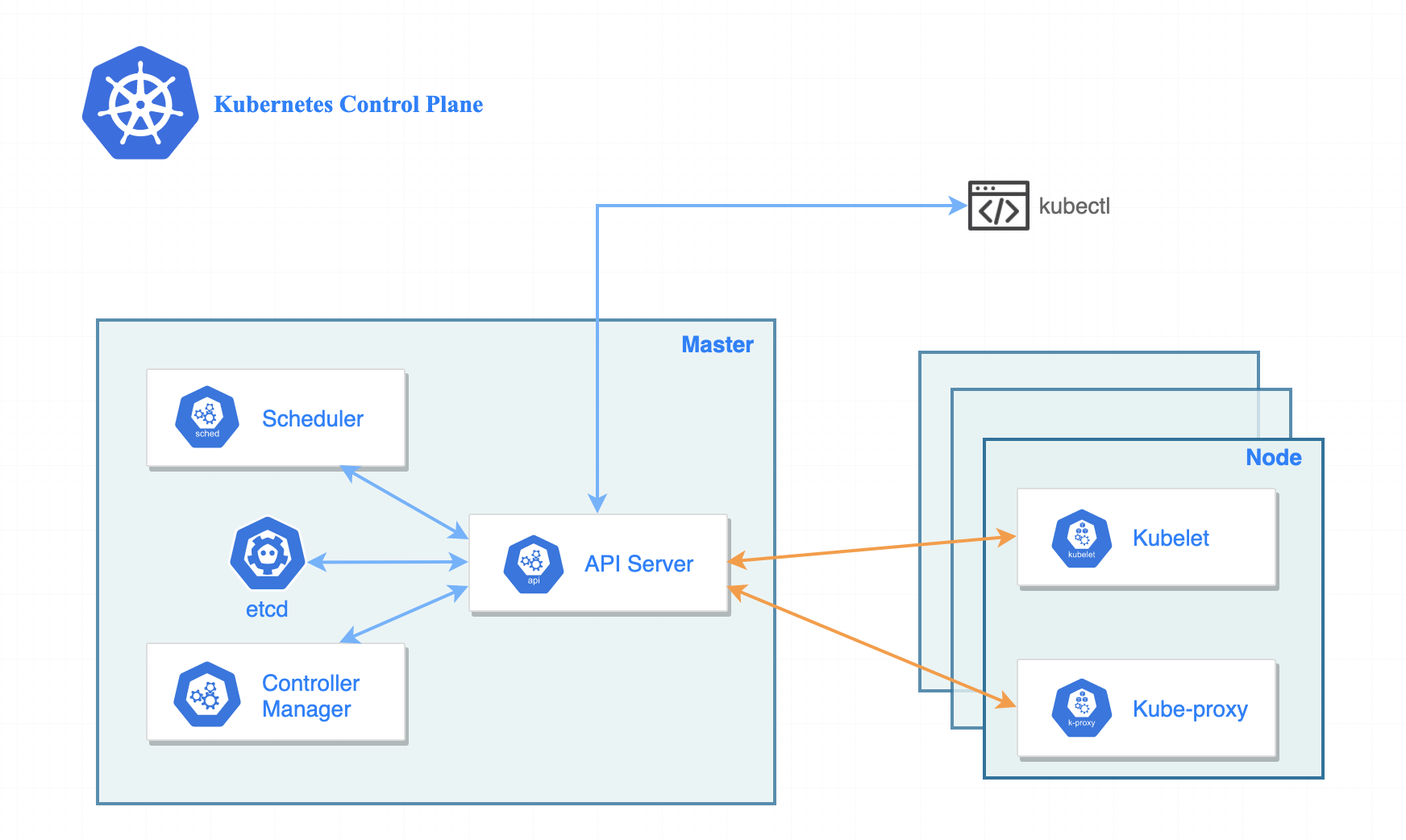

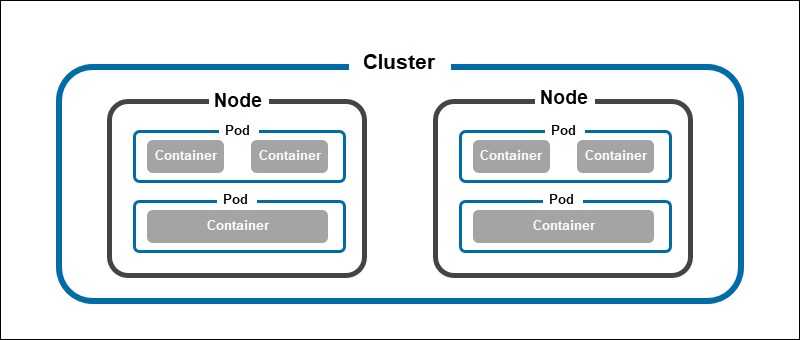

Kubernetes Architecture

Kubernetes uses a distributed system architecture, separating the components responsible for managing the cluster (the control plane) from the machines running your applications (the nodes). This design promotes scalability, resilience, and flexibility.

Control Plane

The control plane is the brain of your Kubernetes cluster, responsible for making global decisions, such as scheduling workloads and responding to cluster events.

Key control plane components include:

- API Server (kube-apiserver): The API server is the front end of the Kubernetes control plane. All other components interact with the cluster through the API server, which exposes the Kubernetes API, enabling users and other components to manage the cluster. It acts as the central point of communication.

- Scheduler (kube-scheduler): The scheduler assigns pods to nodes based on resource availability, constraints, and other factors. It ensures that workloads are distributed efficiently across the cluster. The scheduler considers factors like CPU and memory requests.

- Controller Manager (kube-controller-manager): The controller manager runs a set of control loops that continuously monitor the state of the cluster and make adjustments to maintain the desired state. For example, if a pod fails, the controller manager will create a new one to replace it.

- etcd: etcd is a consistent and highly available key-value store that Kubernetes uses to store all cluster data. This includes information about nodes, pods, services, and other resources. etcd's reliability is crucial for overall cluster stability.

Nodes

Nodes are the worker machines in a Kubernetes cluster. They can be physical or virtual machines responsible for running your applications. Each node runs several key components:

- Kubelet: The kubelet is an agent that runs on each node and communicates with the control plane. It receives instructions from the control plane about which pods to run and manages their lifecycle. The kubelet ensures that containers are running and healthy.

- Kube-proxy: Kube-proxy is a network proxy that runs on each node and manages network rules for pods. It ensures that pods can communicate with each other and the outside world. Kube-proxy enables service discovery and load balancing within the cluster.

- Container Runtime: The container runtime is the software responsible for running containers on the node. Popular container runtimes include Docker and containerd. The container runtime interfaces with the operating system kernel to manage containers.

Pods and Containers

Pods are the smallest deployable units in Kubernetes. A pod encapsulates one or more containers, along with shared storage and network resources. While you can have multiple containers in a pod, it's common practice for a pod to contain a single container.

Key characteristics of pods include:

- Shared Resources: Containers within a pod share the same network namespace and can communicate with each other using localhost. They can also share volumes for persistent storage. This simplifies inter-container communication and data sharing.

- Ephemeral Nature: Pods are designed to be ephemeral. If a pod fails, it is replaced with a new one, rather than being restarted. This ensures high availability and resilience. Kubernetes automatically manages this replacement process.

- Single Application Instance: Pods typically run a single instance of a given application. If you need to run multiple instances, you use a Deployment, which manages multiple replicas of a pod. Deployments provide scalability and rolling updates for your applications.

Managing a Kubernetes Cluster

Once your Kubernetes cluster is up and running, the real work begins: managing and deploying your applications. This involves understanding deployment strategies, scaling your applications based on demand, and effectively managing the underlying resources.

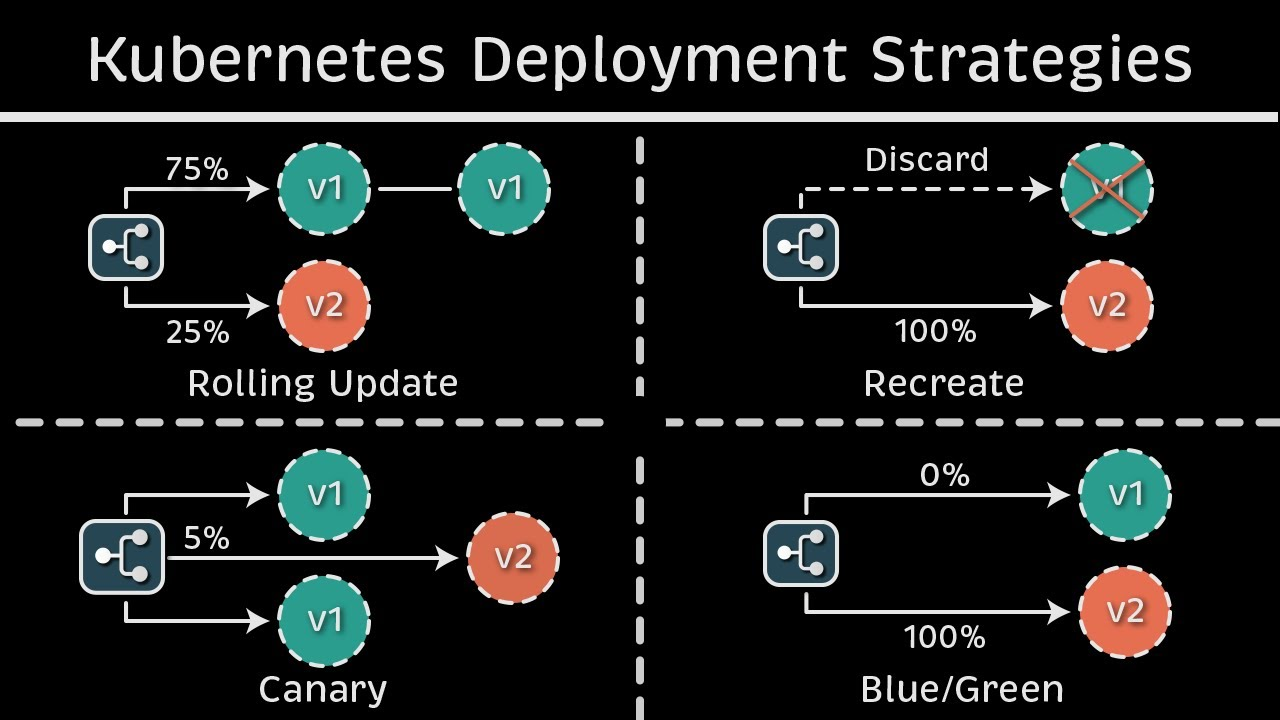

Deployment Strategies

Kubernetes offers several deployment strategies to control how updates roll out. Choosing the right strategy minimizes downtime and ensures a smooth transition. For example, a RollingUpdate is the default deployment strategy and gradually replaces old pods with new ones, allowing for zero-downtime deployment. On the other hand, a Blue/Green deployment switches traffic between two identical but separate deployments, providing a rapid rollback option if issues arise. Consider factors like application complexity, user impact, and rollback capabilities when making your decision. A misconfigured deployment can lead to disruptions, so careful planning and testing are essential. Tools like canary deployments and A/B testing offer more sophisticated release strategies for complex applications.

Scaling and Self-Healing

One of Kubernetes core strengths is its ability automatically scale and self-heal. Scaling allows you to adjust the number of replicas of your application based on demand, ensuring optimal performance and resource utilization. Horizontal Pod Autoscaler (HPA) automatically scales the number of pods based on metrics like CPU utilization or memory consumption. Kubernetes also has built-in self-healing capabilities. If a pod fails, Kubernetes automatically restarts it. This ensures high availability and resilience, even in unexpected issues.

Resource Management

Efficient resource management is fundamental to running a stable and cost-effective Kubernetes cluster. Kubernetes allows you to define resource requests and limits for each pod, ensuring that applications have the resources they need without starving other workloads. Resource quotas and limit ranges provide further control over resource allocation, preventing any single application from monopolizing cluster resources. Tools like Vertical Pod Autoscaler (VPA) can help automate adjusting resource requests and limits based on application behavior.

Core Kubernetes Concepts

This section covers core Kubernetes concepts, including namespaces, resource quotas, services, networking, storage, and persistence. Understanding these concepts is crucial for effectively managing and deploying applications on Kubernetes.

Namespaces and Resource Quotas

Namespaces provide a way to divide cluster resources among multiple users or teams. Think of them as virtual clusters within your physical Kubernetes cluster. This isolation is essential for multi-tenant environments, preventing resource conflicts and ensuring fair usage. Using resource quotas, you can limit the amount of CPU, memory, storage, and other resources a namespace can consume. This prevents any single team or application from monopolizing cluster resources. For example, you might create separate namespaces for development, testing, and production, each with its resource quota. Check out this comprehensive guide by Plural to master Kubernetes namespaces.

Services and Networking

Kubernetes Services act as an abstraction layer over a group of pods, providing a stable endpoint for accessing your application. The service IP remains consistent even if individual pods are created or destroyed due to scaling or failure. Services also offer built-in load balancing, distributing traffic across the healthy pods backing the service. Kubernetes manages internal DNS, allowing services to discover and communicate with each other using stable names, regardless of their location within the cluster. This simplifies inter-service communication and makes your applications more resilient.

Storage and Persistence

Kubernetes offers various storage options to suit different application needs. Ephemeral storage, which is tied to the lifecycle of a pod, is suitable for temporary files and caches. For data that needs to persist beyond the life of a pod, Kubernetes provides Persistent Volumes (PVs). PVs represent a piece of storage provisioned by an administrator, while Persistent Volume Claims (PVCs) are requests for storage by users or applications. This decoupling allows developers to request storage without knowing the underlying storage infrastructure. Storage classes further simplify storage management by allowing you to define different types of storage (e.g., fast SSDs, standard HDDs) that can be dynamically provisioned based on PVC requests. Discover this comprehensive guide by Plural to effectively learn how to manage Kubernetes persistent volumes.

Working with Kubernetes Workloads

Kubernetes offers several ways to manage your workloads, each designed for different application needs.

Deployments and ReplicaSets

Deployments are the standard way to manage stateless applications in Kubernetes. They provide a declarative approach, allowing you to specify the desired state of your application (e.g., the number of replicas, the container image, resource limits), and Kubernetes ensures that the actual state matches your desired state. A Deployment manages ReplicaSets behind the scenes. This simplifies rolling updates and rollbacks, allowing you to deploy new versions of your application without downtime. For example, a Deployment can gradually increase the number of pods running the latest version while decreasing the number of pods running the old version. If issues arise with the new version, the Deployment can quickly revert to the previous version. Discover tools to power up your Kubernetes Deployments in this guide by Plural.

StatefulSets and DaemonSets

While Deployments are ideal for stateless applications, StatefulSets and DaemonSets address specific needs for other workloads. StatefulSets are designed for stateful applications, such as databases, where each pod requires a unique identity and persistent storage. They provide guarantees about the ordering and uniqueness of pods, ensuring that data is preserved during scaling or upgrades. A practical example of a StatefulSet would be managing a Cassandra cluster, where each pod represents a database node. For more details on running stateful applications, refer to the StatefulSet master guide article by Plural.

DaemonSets ensure that a copy of a pod runs on every node (or a subset of nodes) in your cluster. This is useful for running system services like monitoring agents, log collectors, or node-local network components. For instance, if you need to collect logs from every node in your cluster, a DaemonSet can deploy a log collector pod on each node. Check out this ultimate guide by Plural to master Kubernetes DaemonSet.

Jobs and CronJobs

Jobs are used for tasks that run to completion, such as batch processing or data analysis. A Job creates one or more pods and ensures they terminate successfully after completing their work. Kubernetes tracks the status of the pods and restarts them if they fail, guaranteeing that the job eventually finishes. A common use case for Jobs is running a data processing script on a large dataset.

CronJobs extend the functionality of Jobs by allowing you to schedule them to run periodically, similar to cron jobs in Linux. This is useful for tasks that need to be performed regularly, such as backups, report generation, or scheduled maintenance. For example, you could use a CronJob to back up your database every night at midnight. For a comprehensive guide on jobs and cron jobs, explore this article by Plural.

Kubernetes Configuration and Security

Security and proper configuration are paramount in Kubernetes. This section covers essential aspects of configuring and securing your Kubernetes workloads and cluster.

ConfigMaps and Secrets

Managing application configuration and sensitive data effectively is crucial for a secure and adaptable Kubernetes environment. Kubernetes offers two primary resources for this: ConfigMaps and Secrets.

ConfigMaps store non-sensitive configuration data as key-value pairs, externalizing configuration from your application's code. This makes modifying settings easier without rebuilding container images. This separation also enhances portability, enabling the deployment of the same application across different environments with varying configurations. For example, a ConfigMap can define environment variables, command-line arguments, or configuration files for your application. For Kubernetes ConfigMap best practices, explore this article by Plural.

Secrets are designed to store sensitive data such as passwords, API keys, and tokens. They provide a secure mechanism to protect this information from unauthorized access. Like ConfigMaps, Secrets decouple sensitive data from your application code, improving security and portability. You can mount Secrets as volumes or environment variables within your pods, ensuring sensitive information isn't exposed in application code or configuration files. For Kubernetes Secret best practices, explore this article by Plural.

RBAC

Role-Based Access Control (RBAC) is fundamental to securing your Kubernetes cluster. RBAC governs cluster access and permitted actions. By defining roles and assigning them to users or groups, you control permissions granularly within your Kubernetes environment. A role defines a set of permissions, such as creating pods, accessing services, or managing deployments. These roles are bound to specific users or groups using RoleBindings or ClusterRoleBindings. This enforces the principle of least privilege, granting users only the necessary permissions for their tasks.

Refer to the Kubernetes RBAC Authorization Ultimate Guide by Plural for a comprehensive understanding of RBAC, including best practices and examples.

Network Policies

Network Policies offer robust control over network traffic within your Kubernetes cluster. They define rules specifying how groups of pods communicate with each other and external networks. This granular control enhances application security by limiting exposure to potential threats.

By default, all pods in a Kubernetes cluster can communicate freely. Network Policies restrict this communication based on labels, namespaces, and IP addresses. For example, a Network Policy can only allow traffic from pods within the same namespace or specific IP ranges. This prevents unauthorized access to your applications and reduces the impact of security breaches. The Plural guide on mastering Kubernetes network policy provides detailed information on implementing and managing Network Policies.

Getting Started with Kubernetes

This section provides a practical starting point for your Kubernetes journey, covering local environment setup, kubectl interaction, and manifest creation.

Setting Up a Local Environment

Before deploying to a production Kubernetes cluster, a local environment is crucial for learning and experimentation. Lightweight Kubernetes distributions simplify this process. Minikube runs a single-node cluster in a virtual machine on your personal computer. MicroK8s is another lightweight option focused on simple installation and minimal resource usage. K3s is designed for resource-constrained environments like IoT devices and edge computing. To test more complex deployments, Kind runs a multi-node cluster in Docker.

Check out Plural's comprehensive guide on Local Kubernetes to master local Kubernetes development.

kubectl Basics

In your local environment, you'll primarily interact with it through kubectl, the command-line tool for managing Kubernetes resources. kubectl allows you to perform a wide range of operations. Use kubectl get pods to list running pods. Create deployments with kubectl create deployment and adjust their size with kubectl scale deployment. Expose your applications using kubectl expose deployment. Apply configurations from YAML files with kubectl apply -f <filename>. Understanding these basic commands is fundamental to managing your Kubernetes cluster. The official kubectl documentation provides a comprehensive reference.

Writing Kubernetes Manifests

Kubernetes uses a declarative approach to configuration, where you define the desired state of your system in YAML files called manifests. These manifests describe the resources Kubernetes should create and manage. A basic pod manifest includes the container image, resource limits, and other settings. You'll need to learn how to define, update, and manage pods, including configuring their network settings, storage, and lifecycle parameters.

Simplify Kubernetes Complexity with Plural

Managing K8s environments at scale is a significant challenge. From navigating heterogeneous environments to addressing a global skills gap in Kubernetes expertise, organizations face complexity that can slow innovation and disrupt operations.

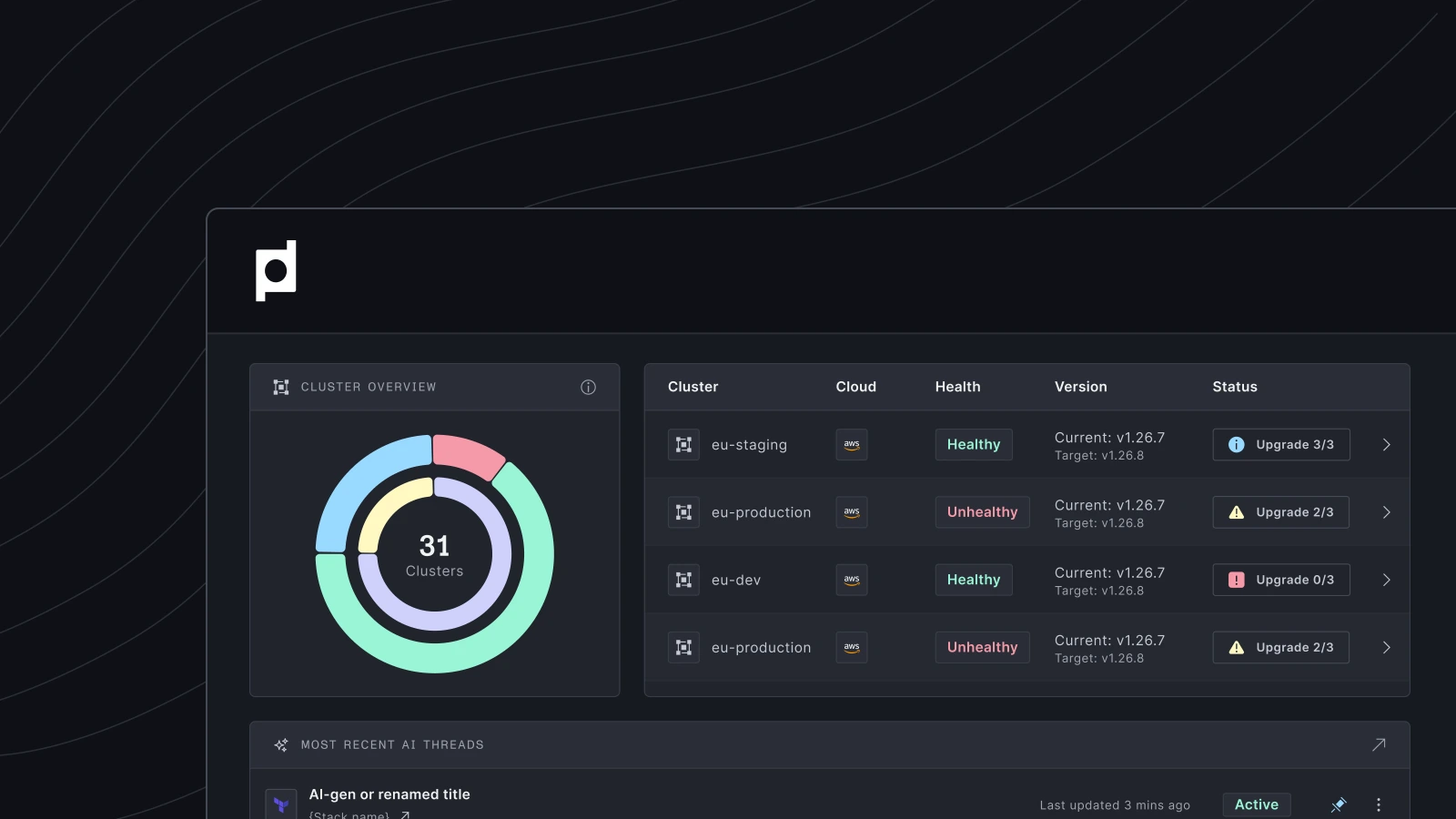

Plural helps teams run multi-cluster, complex K8s environments at scale by combining an intuitive, single pane of glass interface with advanced AI troubleshooting capabilities that leverage a unique vantage point into your Kubernetes environment.

Monitor your entire environment from a single dashboard

Stay on top of your environment's clusters, workloads, and resources in one place. Gain real-time visibility into cluster health, status, and resource usage. Maintain control and consistency across clusters.

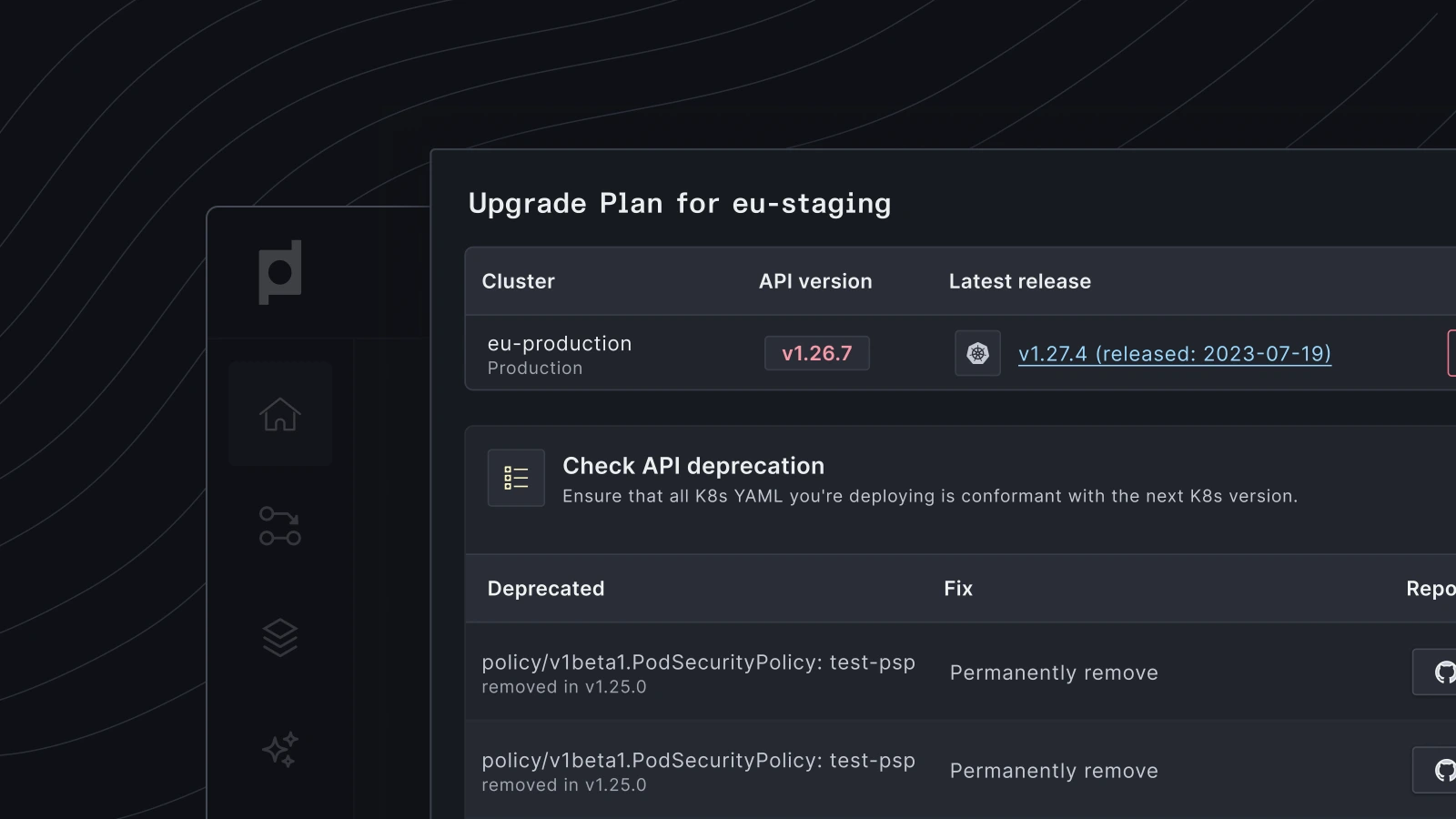

Manage and de-risk complex deployments and upgrades

Reduce the risks associated with deployments, maintenance, and upgrades by combining automated workflows with the flexibility of built-in Helm charts.

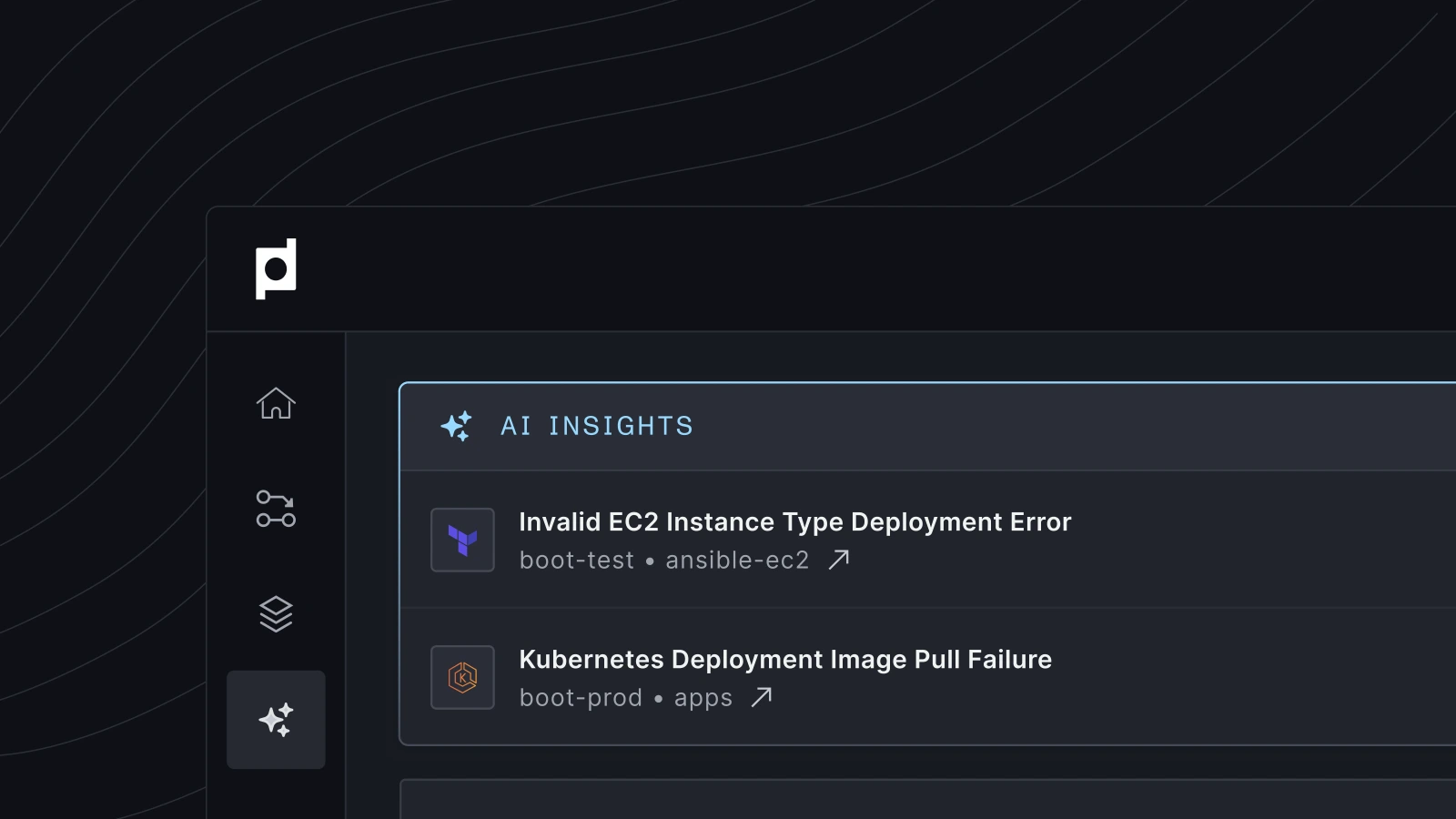

Solve complex operations issues with the help of AI

Identify, understand, and resolve complex issues across your environment with AI-powered diagnostics. Save valuable time spent on root cause analysis and reduce the need for manual intervention.

Related Articles

- Kubernetes Orchestration: A Comprehensive Guide

- Docker and Kubernetes: DevOps Made Easy

- Best Orchestration Tools for Kubernetes in 2024

Unified Cloud Orchestration for Kubernetes

Manage Kubernetes at scale through a single, enterprise-ready platform.

Frequently Asked Questions

How does Plural differ from other Kubernetes management platforms?

Plural distinguishes itself through its agent-based architecture, which enhances security and scalability. The management cluster doesn't require direct access to workload clusters, simplifying network configurations and reducing security risks. Plural also emphasizes GitOps principles, ensuring all changes are tracked and auditable. The platform's extensibility allows it to support various tools and frameworks, giving you flexibility in managing your Kubernetes infrastructure.

What are the key benefits of using Plural for Kubernetes deployments?

Plural streamlines Kubernetes deployments through automation and a unified workflow. It simplifies complex tasks like managing updates, enforcing security policies, and handling infrastructure as code. The self-service provisioning capabilities empower developers to spin up new environments while adhering to organizational standards quickly. This reduces operational overhead, accelerates development cycles, and improves infrastructure reliability.

How does Plural's self-service provisioning work?

Plural's self-service provisioning leverages GitOps and pull request (PR) automation. Developers use a simple UI to request new resources, automatically generating Infrastructure as Code (IaC) configurations. These configurations are then submitted as a pull request, allowing for review and approval before deployment. This process ensures compliance with organizational policies and best practices while empowering developers with rapid provisioning capabilities.

Can Plural manage Kubernetes clusters across multiple cloud providers or on-premises environments?

Yes, Plural's agent-based architecture enables management of Kubernetes clusters regardless of their location. Whether your clusters reside on AWS, Azure, GCP, on-premises, or even on a local machine, Plural can manage them through a single pane of glass. This simplifies multi-cloud and hybrid-cloud Kubernetes management.

What level of Kubernetes expertise is required to use Plural effectively?

Plural is designed to be accessible to engineers of all experience levels. While deep Kubernetes knowledge is beneficial, the platform's intuitive interface and automation features simplify many complex tasks. The AI-powered troubleshooting tools further assist users in resolving issues quickly and efficiently, reducing the reliance on specialized Kubernetes expertise.

Newsletter

Join the newsletter to receive the latest updates in your inbox.