What Is a CSI Driver? How It Works in Kubernetes

Learn what is CSI driver, how it works in Kubernetes, and how to manage storage with dynamic provisioning, snapshots, and advanced features for your clusters.

Before the Container Storage Interface (CSI), storage options in Kubernetes were limited and their development was tied directly to the Kubernetes release cycle. This in-tree model was inflexible and created a bottleneck for innovation. The introduction of CSI changed everything.

So, what is a CSI driver? It's the key that unlocks a vast ecosystem of storage providers by creating a standardized API that decouples storage from the orchestrator. This allows storage vendors to develop and update their drivers independently, giving you the flexibility to choose the best storage for your workload without being locked into a specific vendor or waiting for a new Kubernetes version.

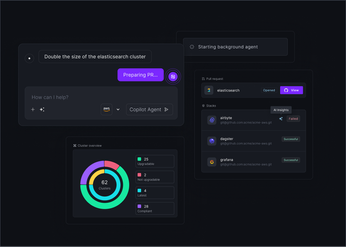

Unified Cloud Orchestration for Kubernetes

Manage Kubernetes at scale through a single, enterprise-ready platform.

Key takeaways:

- Standardize storage operations with CSI: CSI drivers use a standard API to separate storage systems from the Kubernetes core. This lets you use any compliant storage provider (from cloud block storage to on-prem SANs) and manage them with a consistent workflow, avoiding vendor lock-in.

- Enable self-service storage provisioning: Use

StorageClassobjects to define storage templates for your developers. They can then request storage on-demand by creating aPersistentVolumeClaim(PVC), which triggers the CSI driver to automatically provision aPersistentVolume(PV) without any manual steps. - Manage drivers as critical infrastructure: CSI drivers require active lifecycle management. Establish a clear process for updates, secure them with RBAC, and monitor their performance. A centralized platform like Plural simplifies this by managing driver configurations and providing a single dashboard to troubleshoot storage issues across your entire fleet.

What Is a CSI Driver?

A Container Storage Interface (CSI) driver is a standardized, out-of-tree storage plugin that lets vendors integrate their storage systems with Kubernetes without embedding vendor-specific code in the core project. It exposes a common set of gRPC APIs that handle the full lifecycle of a storage volume—provisioning, attaching, mounting, and deletion—so Kubernetes can work with any compliant storage backend. This decoupling is essential for modern platforms: vendors can ship updates independently of Kubernetes releases, and platform teams can adopt or switch storage systems without being tied to upstream timelines.

The Container Storage Interface Explained

CSI defines how container orchestrators request and consume block and file storage. Before CSI, Kubernetes relied on in-tree plugins compiled directly into the codebase. Adding support for a new storage system—or even fixing issues in an existing one—required changes to Kubernetes itself and had to wait for the next release cycle. CSI solved this by moving drivers out-of-tree. Vendors now deliver drivers as standalone components that can be developed, tested, and rolled out independently. For teams building on Kubernetes, that means faster access to new storage features and the ability to standardize storage operations across diverse infrastructure.

Key Components of a CSI Driver

A CSI driver usually runs as several containers inside your cluster:

Controller Plugin

Deployed as a central controller (typically a Deployment or StatefulSet), the Controller Plugin manages cluster-wide operations such as creating and deleting volumes, attaching or detaching them to nodes, and handling snapshots. These actions don’t depend on node locality, so they run once per cluster.

Node Plugin

Deployed as a DaemonSet on every worker node, the Node Plugin handles node-specific tasks like mounting and unmounting volumes to the host filesystem so pods can consume them. It ensures the storage is available at the exact path Kubernetes expects on each node.

CSI Drivers vs. In-Tree Storage Plugins

The key distinction is coupling. In-tree plugins lived inside the Kubernetes source tree and were tightly tied to its release cycle, making them slower to iterate, harder to test, and riskier—bugs in a storage driver could directly impact kubelet stability. CSI drivers are fully decoupled. Vendors maintain their own drivers, release patches at their own pace, and ship features without waiting for upstream Kubernetes. This model is more secure, modular, and scalable, and it enables a much broader storage ecosystem—one that platforms like Plural can manage consistently across clusters.

How CSI Drivers Work in Kubernetes

CSI drivers are built with a modular architecture that cleanly separates cluster-level and node-level responsibilities. This design lets Kubernetes orchestrate storage operations consistently while allowing vendors to implement only the logic specific to their backend. A CSI driver is composed of two main plugins—the Controller Plugin and the Node Plugin—along with a set of Kubernetes-managed sidecars that translate API events into CSI gRPC calls.

Controller Plugin

The Controller Plugin performs all cluster-wide storage operations. It provisions, deletes, attaches, detaches, and snapshots volumes by communicating with the storage provider’s control plane API. Because these operations don’t depend on the state of any individual node, the Controller Plugin typically runs as a Deployment or StatefulSet. When a PersistentVolumeClaim is created for a StorageClass that uses the CSI driver, the external-provisioner sidecar detects the request and issues a CreateVolume gRPC call to the Controller Plugin, which then creates the volume through the backend storage system.

Node Plugin

The Node Plugin handles node-specific operations and must run on every worker node, so it is deployed as a DaemonSet. When the kubelet schedules a pod that requires a volume, it invokes CSI gRPC calls on the Node Plugin running locally. The plugin stages the volume on the node—such as formatting a device and mounting it to a global path—and then publishes it by bind-mounting it into the pod’s filesystem. This is what makes the volume accessible to applications inside containers.

CSI Communication Workflow

Kubernetes interacts with CSI drivers through standardized sidecar containers, which watch the Kubernetes API and perform the required gRPC calls. The external-provisioner handles CreateVolume and DeleteVolume. The external-attacher manages ControllerPublishVolume and ControllerUnpublishVolume for attach/detach workflows. Additional sidecars handle node registration, resizing, snapshots, and more. All communication uses the CSI gRPC API, usually exposed over a Unix Domain Socket.

By standardizing these interactions, Kubernetes avoids embedding vendor-specific logic in its binaries. Any compliant storage system can plug into the cluster, and platforms like Plural can manage these drivers consistently across large fleets.

Key Features of CSI Drivers

CSI introduces a consistent, extensible set of storage capabilities that simplify how Kubernetes manages stateful workloads. These features let platform teams automate provisioning, expose advanced data operations, and deliver the exact storage characteristics applications need—without manual intervention or custom integrations.

Dynamic Volume Provisioning

CSI enables Kubernetes to create PersistentVolumes automatically when developers submit a PersistentVolumeClaim. The driver communicates with the storage backend using parameters defined in the StorageClass, provisioning volumes on demand. This removes the need for manual PV management, scales cleanly across large environments, and supports true developer self-service. For multi-cluster platforms, dynamic provisioning ensures predictable, uniform behavior across every deployment.

Snapshots and Clones

CSI exposes snapshotting and cloning as first-class operations through the Kubernetes API. Snapshots capture point-in-time states of a volume, making them useful for backup, disaster recovery, and safe rollbacks. Clones create new volumes from existing ones, enabling teams to replicate production datasets quickly for development or testing. These capabilities are essential for building resilient stateful applications and can be integrated directly into automated workflows.

Volume Expansion and Raw Block Access

CSI supports online volume expansion, allowing teams to grow storage capacity without downtime. A simple update to a PVC triggers the resize operation, and the driver handles the underlying changes. For latency-sensitive or high-throughput workloads such as databases, CSI also supports raw block volumes. These volumes are exposed directly to pods, bypassing the filesystem to deliver more consistent I/O performance.

Access to Advanced Storage Features

Because CSI acts as a standardized interface, vendors can expose their unique capabilities through the same Kubernetes primitives. Features like encryption, replication, Quality of Service controls, or other hardware-specific optimizations can all be surfaced through a CSI driver. This lets platform teams adopt diverse storage technologies without building bespoke integrations or tying their workflows to a single vendor. With tools like Plural, these storage policies can be managed declaratively and applied consistently across entire fleets.

The Benefits of Using CSI Drivers

The CSI model replaces Kubernetes’ legacy in-tree storage plugins with a standardized, decoupled interface that gives platform teams more flexibility, security, and performance. Because CSI drivers ship independently of Kubernetes releases, vendors can iterate faster, and engineering teams gain access to a broader, more modern storage ecosystem. This standardization makes it far easier to operate consistent storage workflows across cloud providers, bare-metal clusters, and hybrid environments.

Standardize Storage Across Vendors

CSI removes the need to modify Kubernetes itself to support new storage systems. Any vendor can build a CSI-compliant driver, and Kubernetes interacts with all of them using the same primitives—PersistentVolumeClaims, PersistentVolumes, and StorageClasses. This creates a uniform operational model across disparate providers and avoids vendor lock-in. Whether you're consuming block storage from a cloud provider or an on-prem file system, the workflow is the same. For platform teams, this consistency dramatically simplifies multi-cluster and multi-cloud management—especially when orchestrating fleets with tools like Plural.

Strengthen Your Security Model

CSI includes well-defined authentication and authorization mechanisms that integrate with Kubernetes’ existing security controls. RBAC can limit who can create, delete, or snapshot volumes, while the CSI spec supports securing driver communication with TLS. This ensures storage actions are performed only by authorized workloads and users, helping enforce least-privilege defaults. These capabilities are particularly important in multi-tenant environments where storage isolation and compliance requirements are strict.

Improve Performance and Scalability

Vendor-maintained CSI drivers are typically optimized for the underlying storage system, offering better performance and reliability than generic in-tree plugins ever could. Dynamic provisioning is central to this scalability story: instead of pre-allocating volumes, developers request storage via a PVC, and the CSI driver provisions it on demand. This eliminates manual intervention, reduces operational overhead, and allows workloads to scale smoothly as they grow. Combined with features like online expansion and raw block volumes, CSI supports both general-purpose applications and high-performance stateful workloads with equal ease.

Supported Storage Types

CSI is intentionally storage-agnostic, allowing Kubernetes to work with a broad range of storage backends without modifying core components. Any system that implements the CSI specification can integrate seamlessly, giving platform teams flexibility to choose the right storage abstraction—block, file, or object—for each workload. This portability is crucial for operating consistent environments across on-prem infrastructure, cloud providers, and hybrid deployments.

Block Storage

Block storage exposes volumes as raw block devices and is well-suited for low-latency, high-performance workloads such as databases and transactional systems. CSI drivers manage the full lifecycle of these volumes—provisioning, attaching, detaching, and deleting—regardless of whether the backend is a traditional SAN or a software-defined storage platform. Kubernetes treats CSI-provisioned block devices as persistent disks, ensuring data durability even when pods move between nodes. This abstraction allows developers to run demanding stateful applications on Kubernetes without needing intimate knowledge of the underlying hardware.

File Storage

File storage provides a shared filesystem accessible over the network, making it a natural fit for workloads that require concurrent reads and writes from multiple pods. Content platforms, web tiers, and shared caching layers often depend on this pattern. CSI standardizes how Kubernetes interacts with file services—whether NFS servers, on-prem NAS appliances, or cloud-native offerings. By exposing these systems through CSI, Kubernetes can support ReadWriteMany access modes and streamline integration across diverse environments, including managed services like AKS or GKE.

Object Storage

Object storage stores data as objects with metadata and an identifier, prioritizing scale and durability over POSIX semantics. Although Kubernetes doesn’t mount object storage as a filesystem, CSI drivers can still automate access. A CSI-compliant object driver can provision buckets, manage lifecycle policies, and inject connection details and credentials into pods. Applications then interact with the object store directly via its API. This pattern works well for backups, archives, dataset storage, and any workload dealing with large volumes of unstructured data.

By providing consistent mechanisms for all three storage types, CSI makes it easier for platforms like Plural to orchestrate heterogeneous storage across clusters while keeping developer workflows uniform.

How to Implement a CSI Driver in Kubernetes

Implementing a CSI driver connects your Kubernetes workloads to your storage backend through a fully declarative workflow. You deploy the driver, define how storage should be provisioned with a StorageClass, and let developers request storage through PersistentVolumeClaims. Kubernetes and the CSI driver handle provisioning, mounting, and lifecycle management, giving platform teams a consistent and policy-driven way to offer storage while keeping developers insulated from backend complexity.

Check Prerequisites and Install the Driver

Every CSI driver requires two components: a Controller Plugin (usually deployed as a Deployment) and a Node Plugin (always deployed as a DaemonSet). Vendors typically package these as Helm charts or YAML bundles along with the necessary sidecars. Before installing, confirm Kubernetes version compatibility, required node capabilities, and any special permissions.

If you're managing multiple clusters, keeping driver versions aligned can be challenging. Tools like Plural’s Global Services help enforce consistency by letting you define the driver once and replicate its configuration across your entire fleet.

Configure a StorageClass

After installation, define a StorageClass to describe the types of volumes the CSI driver should provision. A StorageClass specifies the CSI provisioner name and parameters such as performance tier, replication level, encryption options, and backend-specific settings. You can offer multiple StorageClasses—fast SSD storage for databases, cheaper tiers for backups, or replicated volumes for critical workloads.

Developers reference the StorageClass name in their PVCs. This abstraction separates intent (“I need 20Gi of fast storage”) from implementation (“this is backed by an SSD pool on a specific backend”), allowing storage systems to evolve without updating application manifests.

Set Up PersistentVolumes and PersistentVolumeClaims

With a StorageClass in place, developers create PersistentVolumeClaims requesting size and access mode. When a PVC references your CSI-enabled StorageClass, the external-provisioner sidecar triggers the Controller Plugin to provision a PersistentVolume that matches the request. Kubernetes then binds the PVC to the PV, and your pods mount the claim as a volume.

The PV/PVC model ensures persistence across pod restarts and rescheduling, decoupling application lifecycle from storage lifecycle. Platforms like Plural surface all PVs, PVCs, and related events in a unified dashboard, helping teams troubleshoot provisioning or binding issues across clusters.

Common Challenges with CSI Drivers

CSI standardizes how Kubernetes interacts with storage, but running these drivers in production introduces its own operational challenges. Issues typically arise in three areas: compatibility and configuration, lifecycle management of storage volumes, and monitoring or troubleshooting across the many moving parts that make up the CSI stack. Because storage failures directly impact application availability, platform teams need a disciplined, systematic approach to managing CSI at scale.

Solving Compatibility and Configuration Issues

Each CSI driver implements the CSI specification differently and often has its own configuration model. This flexibility is powerful, but it also puts the burden on platform teams to ensure version alignment across Kubernetes, the driver, and the backend. Even minor mismatches can cause volume creation, attach/detach, or mount operations to fail.

Drivers also introduce backend-specific parameters in StorageClasses, and getting these wrong can break pod startup or degrade data access. In large or multi-cluster environments, inconsistency becomes a real risk. Tools like Plural help mitigate this by centralizing CSI configurations and enforcing uniformity across clusters.

Managing Volume Complexity

As you deploy more stateful workloads, the number of StorageClasses, PVs, and PVCs grows quickly. Every application adds more resources to track, and each volume has its own lifecycle involving provisioning, attachment, expansion, snapshotting, and deletion. Without strong automation, orphaned volumes accumulate, consuming capacity and creating security concerns.

Platform teams often end up stitching together scripts or ad-hoc processes to clean up unused PVs or reconcile inconsistencies. A more scalable approach is to treat CSI management as part of your cluster automation layer, ensuring predictable provisioning and consistent cleanup across the entire fleet.

Monitoring and Troubleshooting

Diagnosing storage issues can be challenging because CSI involves many components: the controller plugin, node plugin, sidecars, kubelet, and the backend storage system. A failed mount might originate in any one of these layers. Effective debugging requires correlating logs, events, and metrics across them all.

A strong observability setup is essential. Centralized log aggregation, metrics for driver health, and visibility into PV/PVC status dramatically reduce troubleshooting time. Platforms like Plural provide a consolidated view across clusters, letting you inspect CSI pods, examine logs, and track PV/PVC bindings from a single dashboard—removing much of the friction typically associated with debugging storage issues in Kubernetes.

Best Practices for Using CSI Drivers

A CSI driver is a core part of your Kubernetes infrastructure, and maintaining it requires continuous attention. Strong testing, security controls, and lifecycle management help ensure storage remains reliable, performant, and safe as your clusters scale. Treating the CSI layer as production-critical infrastructure allows your stateful workloads to run smoothly while minimizing operational risk.

Test and Validate Your Setup

Deploying a CSI driver involves several interconnected components—the controller plugin, node plugin, sidecars, StorageClasses, PVs, and PVCs. Before promoting a driver to production, validate the full workflow in a staging environment. Provision a test StorageClass, issue a PVC, mount it in a simple application, and confirm that data persists through pod restarts and rescheduling. This end-to-end validation helps you catch configuration mistakes early and prevents disruptions caused by misconfigured parameters or backend incompatibilities.

Secure Your Storage with Access Controls

Storage is a sensitive resource, and enforcing strict access controls is essential. Kubernetes RBAC lets you define who can create or modify StorageClasses, PVCs, VolumeSnapshots, and other storage objects. Developers can be restricted to using approved StorageClasses, while platform teams retain control over creating or altering them. Managing these permissions declaratively is especially important across multi-cluster fleets. Plural supports fleet-wide RBAC synchronization so you can apply consistent policies everywhere and reduce the chance of misconfiguration.

Manage the Driver Lifecycle and Updates

CSI drivers evolve continuously, and vendors regularly ship updates for new features, bug fixes, and Kubernetes compatibility. Running outdated drivers increases security risk and can block your ability to adopt new volume capabilities. Establish a structured update process that treats the driver like any other application deployed in your clusters. GitOps-driven rollouts—such as those powered by Plural’s Continuous Deployment engine—allow you to store driver configurations in Git, review changes, and roll out updates uniformly across all clusters. This keeps your fleet aligned on validated versions and eliminates drift over time.

How to Monitor and Troubleshoot CSI Drivers

Reliable storage is fundamental for stateful workloads, and a malfunctioning CSI driver can cascade into pod failures, stuck deployments, or data unavailability. Effective monitoring and troubleshooting require visibility into both cluster-level and node-level CSI components, along with a workflow that makes it easy to correlate events, logs, and resource states. While kubectl provides the basic tools, managing storage across many clusters calls for a centralized view. Platforms like Plural aggregate logs and events across your fleet, eliminating the need for multiple kubeconfigs and simplifying access to private or on-prem clusters.

Monitor Key CSI Components

CSI drivers ship with two critical components: the controller plugin (running as a Deployment or StatefulSet) and the node plugin (running as a DaemonSet). To monitor them:

- Use

kubectl describeon controller and node plugin pods to check readiness, restarts, and recent events. - Inspect sidecar logs with

kubectl logs—particularly the external-provisioner, external-attacher, and node server containers—for failures in backend API calls or permission issues. - Review cluster events with

kubectl get events --sort-by='.lastTimestamp'to surface problems like attach/detach failures, scheduling issues, or missing StorageClass parameters.

Centralized dashboards like Plural’s provide the same insights across every cluster, making it far easier to diagnose CSI failures without switching contexts.

Apply Common Troubleshooting Techniques

A PVC stuck in Pending is one of the most common indicators of a CSI problem. Start with:

kubectl describe pvc <name>to inspect events for provisioning errors.- Validate that the PVC references the correct StorageClass and that the StorageClass uses the right CSI provisioner.

- Check the external-provisioner logs for detailed error output, especially if the driver is failing to create volumes on the backend.

If an application cannot mount its volume, the problem may lie on the node. Investigate:

- Node plugin logs for errors in staging or publishing the volume.

- Node readiness, labels, OS versions, or taints that might prevent attachment.

- Backend access issues, such as credentials or endpoint reachability.

These checks typically reveal configuration mismatches or environment inconsistencies that block volume creation or mounting.

Optimize for Performance

Troubleshooting is only part of CSI management—ensuring long-term performance and reliability is equally important. Effective practices include:

- Setting resource requests and limits for CSI pods to avoid noisy-neighbor effects and prevent the driver from overconsuming node resources.

- Testing snapshot creation and restore workflows regularly to validate recovery times and ensure data integrity.

- Managing CSI configuration through a GitOps workflow to enforce consistency and eliminate drift.

- Using infrastructure-as-code tools—such as Plural Stacks with Terraform—to codify backend storage settings and apply them uniformly across clusters.

These proactive steps help maintain predictable performance, reduce incidents, and ensure that your storage layer remains resilient as your Kubernetes footprint grows.

Start Using CSI Drivers Today

Integrating a Container Storage Interface (CSI) driver into your Kubernetes environment is a direct process that unlocks consistent and powerful storage management. The first step is to understand that CSI provides a standard API, decoupling storage logic from the core Kubernetes code. This abstraction allows you to use a wide range of storage backends without vendor lock-in, ensuring your storage operations are portable and future-proof, a key principle behind the Container Storage Interface (CSI) for Kubernetes GA.

The installation process involves deploying the driver's components—the controller and node plugins—as pods within your cluster. Once the driver is running, you must configure Kubernetes resources to interact with it by creating a StorageClass. This StorageClass acts as a template for creating storage, defining parameters like performance tiers or replication policies. Developers can then request storage by creating a PersistentVolumeClaim (PVC) that specifies the StorageClass, which triggers the driver to provision a PersistentVolume (PV) automatically. This workflow is central to enabling dynamic provisioning.

With the basic setup complete, you can leverage more advanced storage functionalities. CSI drivers often support volume snapshots and clones, which are critical for backup, recovery, and testing workflows. You can create point-in-time copies of your volumes or provision new volumes pre-populated with data from an existing one. These features are exposed through Kubernetes APIs like VolumeSnapshot and VolumeSnapshotClass, making complex data management tasks declarative and automated. For a deeper look at how these capabilities work, you can review practical examples that demonstrate both dynamic provisioning and advanced operations in real-world scenarios.

Related Articles

Unified Cloud Orchestration for Kubernetes

Manage Kubernetes at scale through a single, enterprise-ready platform.

Frequently Asked Questions

Why should I migrate from legacy in-tree storage plugins to CSI drivers? Migrating to CSI drivers is a strategic move for stability and flexibility. The primary reason is that CSI decouples storage provider logic from the Kubernetes release cycle. This means you can receive critical bug fixes, security patches, and new features from your storage vendor without having to wait for a Kubernetes upgrade. This out-of-tree model also improves cluster stability, as a bug in a CSI driver is isolated to its own pods and is far less likely to crash the kubelet, unlike the in-tree plugins.

Can I use more than one CSI driver in a single Kubernetes cluster? Yes, and it is a common practice in production environments. A single cluster can run multiple CSI drivers simultaneously to serve different storage backends. You differentiate between them using the StorageClass resource. Each StorageClass specifies a provisioner field that points to a specific CSI driver. This allows you to offer various storage tiers, such as a high-performance block storage driver for databases and a separate shared file storage driver for web applications, all within the same cluster.

What happens to my mounted volumes if the CSI driver's controller or node pods fail? Existing mounted volumes will continue to function without interruption. The CSI driver's pods are part of the control plane for storage operations, not the data plane for I/O. Once a volume is successfully mounted to a pod, the I/O path is directly between the pod and the storage system. A failure in the CSI controller would impact new provisioning or attachment operations, while a failure in a node plugin would affect new mount operations on that specific node. However, running applications with already-mounted volumes will not be affected.

How do I manage CSI driver updates and configurations across many clusters? Managing CSI drivers at scale is a significant operational challenge, as it requires ensuring that every cluster has the correct driver version and StorageClass configurations. Plural's Global Services feature is designed to solve this. You can define your CSI driver's deployment manifests and related configurations once in a Git repository. Plural will then automatically replicate and enforce that configuration across your entire fleet of clusters, ensuring consistency and simplifying the upgrade process through a controlled GitOps workflow.

How do I decide which CSI driver is right for my workload? The choice of a CSI driver depends entirely on your application's requirements and your underlying storage infrastructure. First, consider the access mode: does your application need exclusive access (ReadWriteOnce) like a database, or shared access from multiple pods (ReadWriteMany) like a CMS? Next, evaluate performance needs, such as IOPS and latency requirements. Finally, consider data management features like snapshots for backups or volume cloning for test environments. Your goal is to match the capabilities of the storage system, exposed through its CSI driver, with the specific needs of your stateful application.

Newsletter

Join the newsletter to receive the latest updates in your inbox.