Mastering EKS: Your Comprehensive Guide to AWS Kubernetes

Master AWS Kubernetes with this comprehensive guide, covering EKS setup, management, and optimization for efficient and scalable container orchestration.

Running containerized applications efficiently requires robust orchestration, and Kubernetes has emerged as the leading platform. However, managing Kubernetes itself can be a significant undertaking. Amazon EKS simplifies this process, providing a managed Kubernetes service on AWS.

This comprehensive guide explores the world of AWS Kubernetes, offering practical insights and actionable steps for managing your EKS deployments. Whether you're a beginner or an experienced Kubernetes user, we'll cover everything from cluster creation and configuration to advanced topics like auto-scaling, cost optimization, and security best practices. Join us as we delve into the key features and benefits of EKS, helping you streamline your containerized workflows on AWS.

Unified Cloud Orchestration for Kubernetes

Manage Kubernetes at scale through a single, enterprise-ready platform.

Key Takeaways

- Kubernetes automates application management: Focus on building software, not managing servers. EKS simplifies Kubernetes by handling the underlying infrastructure.

- EKS adapts to your needs: From fully managed to self-managed deployments, EKS integrates with AWS services like ECR, Fargate, and CloudWatch to enhance your workflows.

- Optimize EKS for efficiency and resilience: Address resource management, security, CI/CD, and scalability to maximize EKS benefits.

- Enhance EKS with a unified dashboard: Amazon EKS does not include a native, full-featured dashboard for comprehensive cluster monitoring and troubleshooting. Plural enhances EKS management by providing a unified dashboard with real-time visibility into workloads, networking, and logs.

What is Kubernetes and Why Does it Matter?

Kubernetes is open-source software for automating how you deploy, scale, and manage containerized applications. Instead of manually managing individual servers and containers, Kubernetes provides a framework to run applications reliably across a cluster of machines. Kubernetes has become the industry standard for container orchestration, enabling organizations of all sizes to deploy and manage complex applications efficiently.

The power of Kubernetes lies in its ability to streamline the management of containerized applications. By automating routine tasks such as scaling, failover, and deployments, Kubernetes frees up developers and operations teams to focus on building and improving their software. This increased efficiency is essential in today's fast-paced development cycles. Instead of spending time on manual infrastructure management, teams can concentrate on delivering new features and improving the user experience.

Amazon EKS: Simplified Kubernetes Management

Amazon EKS simplifies running Kubernetes, removing the complexities of self-management. As a managed and certified Kubernetes conformant service, EKS lets you run Kubernetes on AWS, on-premises, and at the edge, offering flexibility for diverse deployment needs. Whether you're a seasoned Kubernetes veteran or just starting, EKS makes the management process more accessible. Check out this practical guide by Plural that explores the ins and outs of managed Kubernetes, from core concepts to choosing the right provider.

Key Features and Benefits of Amazon EKS

EKS streamlines Kubernetes operations, providing several key advantages:

- Faster Deployment: EKS automates much of the setup process, accelerating the deployment of your applications and reducing time-to-market.

- Improved Performance & Reliability: With EKS, AWS manages the underlying infrastructure, dynamically scaling resources to ensure optimal performance and uptime for your applications.

- Enhanced Security: EKS leverages ephemeral computing resources and integrates them with AWS security services, minimizing security risks and protecting your workloads.

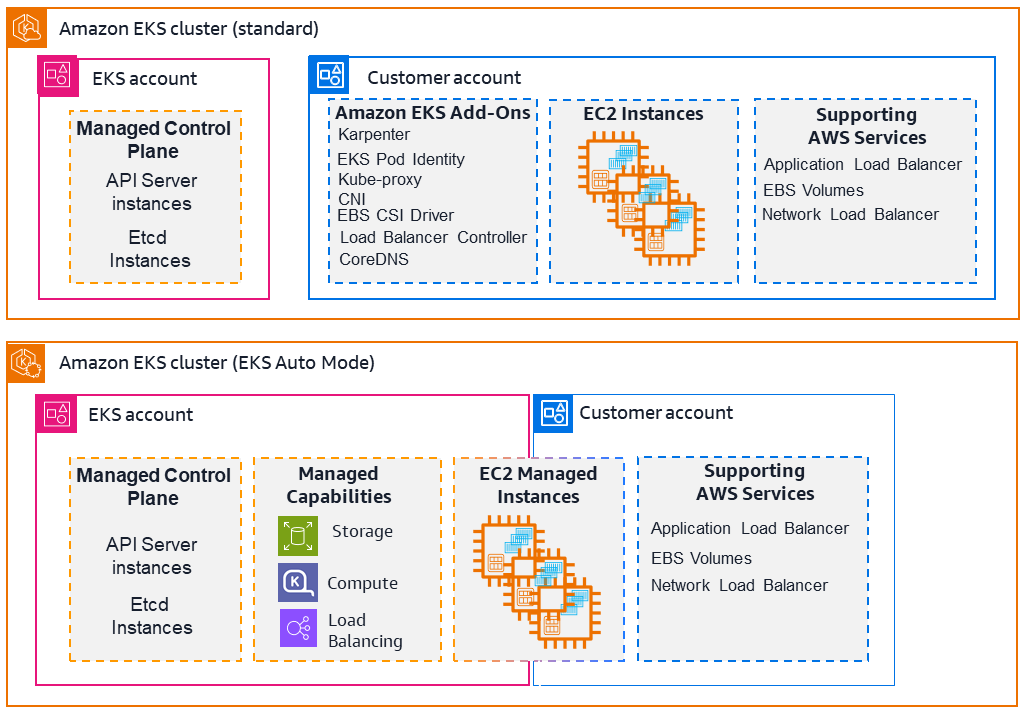

Amazon EKS vs. Self-Managed Kubernetes: Choose the Right Option

AWS offers two primary approaches for running Kubernetes: managing everything yourself on Amazon EC2 for maximum control, or opting for the managed Amazon EKS, where AWS handles the complex aspects. This choice depends on your team's expertise and operational preferences.

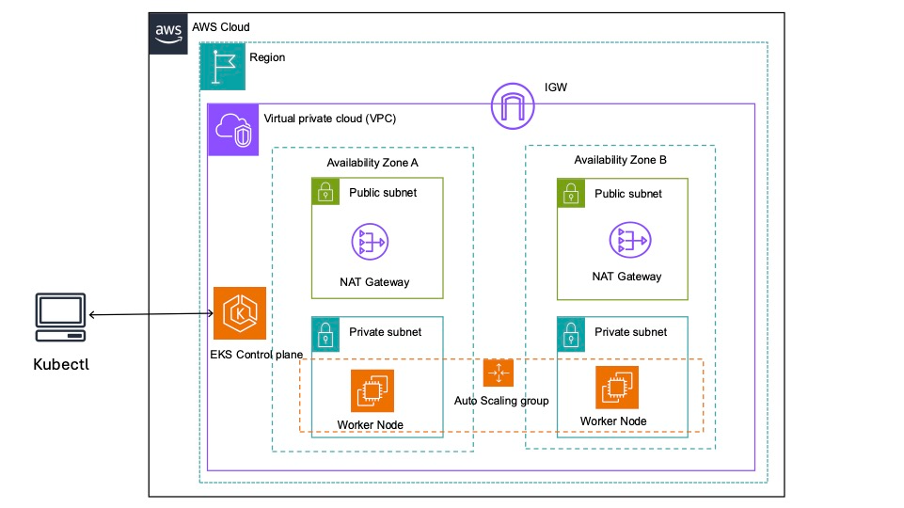

The following diagram illustrates how Amazon EKS integrates your Kubernetes clusters with the AWS cloud, depending on which method of cluster creation you choose:

EKS excels as a fully managed service. AWS takes on the heavy lifting of managing Kubernetes, freeing your team to focus on developing and deploying applications rather than server maintenance. If you're new to containers or have limited resources, consider AWS ECS for its simplicity. However, if your team is comfortable with Kubernetes and requires greater flexibility, scalability, and control, EKS is the ideal choice.

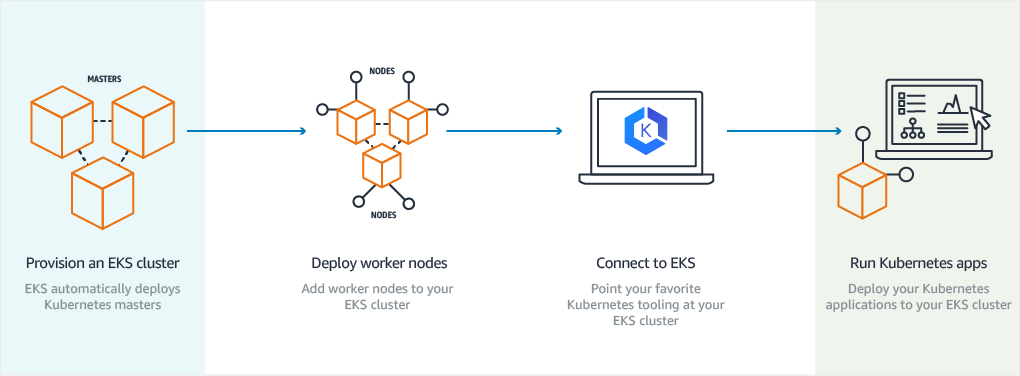

Create Your First EKS Cluster

This section guides you through setting up your initial EKS cluster. We'll cover planning, step-by-step creation, and configuring essential components like node groups and networking.

Prerequisites and Planning

Before diving into cluster creation, lay the groundwork. Since Amazon EKS is a managed service, AWS handles Kubernetes management. However, you still need an active AWS account and familiarity with basic AWS concepts like Identity and Access Management (IAM) and Virtual Private Clouds (VPCs). Decide on the Kubernetes version you want and plan your VPC and subnet configuration. Consider factors like desired capacity, availability requirements, and security best practices. Thorough planning ensures a smooth setup.

Create an EKS Cluster Step-by-Step

You have several options to create an EKS cluster: the AWS Management Console, the AWS CLI, or Infrastructure-as-Code tools like AWS CloudFormation and Terraform. The process typically involves defining your cluster's configuration, including the Kubernetes version, VPC settings, and necessary IAM roles for EKS to interact with other AWS services. If you're using the AWS Management Console, the process is relatively straightforward, guiding you through the required settings. With the AWS CLI or Terraform, you'll define these settings in code, providing greater control and automation. Choose the method that best suits your workflow.

Configure Node Groups and Networking

After creating your EKS cluster, you'll need to configure node groups. Node groups are the worker machines that run your applications. EKS offers flexibility in how you manage these nodes. You can use Amazon EC2 instances for more control or AWS Fargate for a serverless approach. Select the option that aligns with your workload requirements. Networking is crucial for a functioning EKS cluster. Configure your VPC, subnets, and security groups to control traffic flow and secure your cluster. Ensure your EKS cluster can communicate with other AWS services and the internet as needed, while adhering to your security policies.

Essential AWS Tools for Kubernetes

Managing a Kubernetes cluster, especially on AWS, involves a complex interplay of services. This section covers three key services: Amazon ECR for container images, AWS Fargate for serverless Kubernetes, and Amazon CloudWatch for monitoring and logging.

Manage Container Images with Amazon ECR

Amazon ECR (Elastic Container Registry) provides a fully managed container registry for storing, managing, and deploying your Docker container images. Its tight integration with Amazon EKS streamlines pulling images for your applications, simplifying deployments and updates. ECR handles security and scalability so you can focus on development rather than registry management.

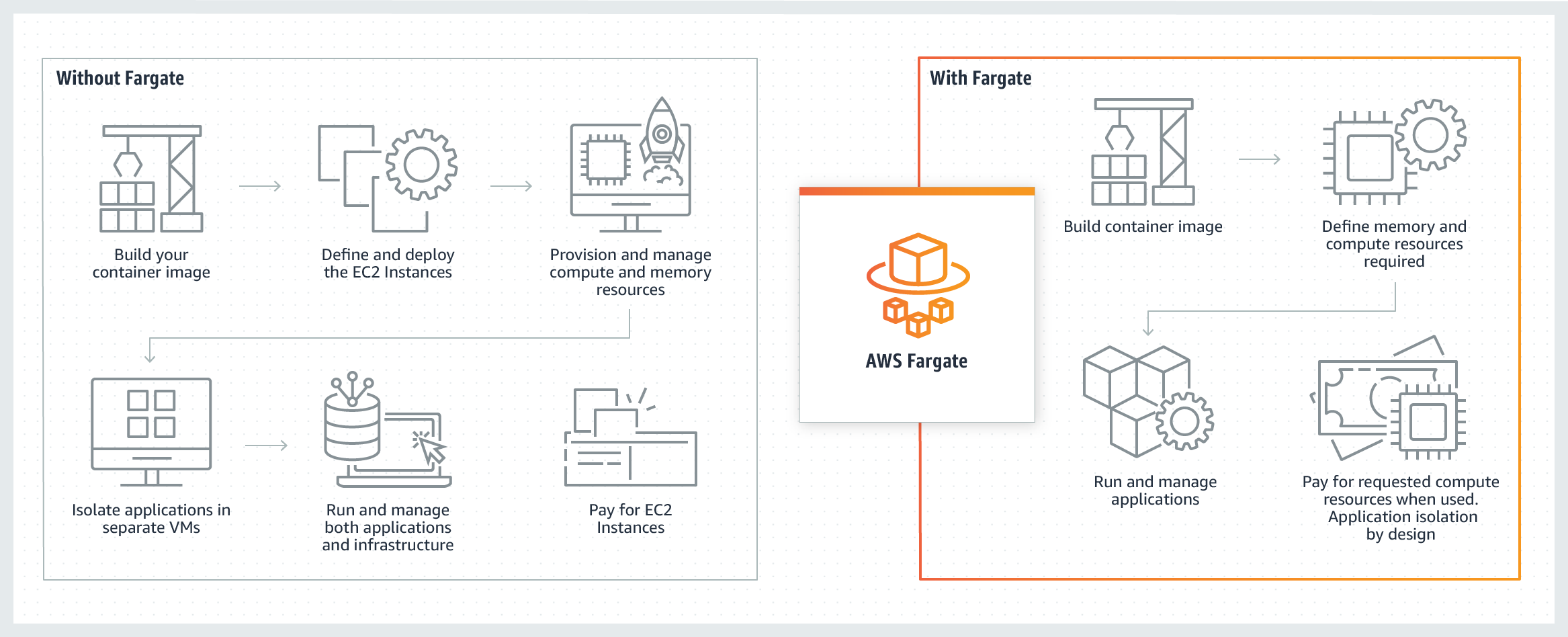

Serverless Kubernetes with AWS Fargate

AWS Fargate offers a serverless compute engine designed for containers and works seamlessly with Amazon EKS. This eliminates the need to manage the underlying EC2 instances that host your Kubernetes pods. With Fargate, you focus solely on building and deploying your applications, leaving the infrastructure management to AWS. This simplifies operations and allows for efficient scaling based on your application's demands.

Monitor and Log with CloudWatch

Amazon CloudWatch provides comprehensive monitoring and observability for your Amazon EKS clusters. You can collect and track key metrics, gather log files, and configure alarms to address potential issues proactively. CloudWatch Container Insights offers deeper visibility into your containerized applications, enabling performance optimization and troubleshooting. This centralized monitoring solution simplifies identifying and resolving problems, ensuring the health and stability of your EKS environment. Check out this comprehensive guide by Plural on Kubernetes monitoring best practices.

Best Practices for Amazon EKS

Once your EKS cluster is up and running, focus on optimizing its performance, security, and resilience. Implementing EKS best practices from the start will save you time and resources.

Optimize Resources and Manage Costs

Cost optimization is crucial for any cloud-based infrastructure. Start by selecting appropriately sized container instances. Monitor key metrics like CPU and memory utilization using tools like CloudWatch Container Insights or Kubernetes-native monitoring solutions like Kubecost. Right-sizing your nodes and using features like cluster autoscaler can significantly reduce costs. Consider leveraging spot instances for non-critical workloads to optimize spending further.

Secure Your EKS Deployment

Security should be a top priority for any EKS deployment. While EKS simplifies Kubernetes management, understanding the security configurations and best practices is essential. Implement role-based access control (RBAC) to manage permissions within your cluster. Regularly update your Kubernetes version and worker nodes to patch security vulnerabilities. Consider using network policies to control traffic flow between Pods and Namespaces. Monitoring tools like Prometheus and Grafana can help you identify and respond to security issues. Employing security best practices like pod security policies and image scanning can further enhance your cluster's security posture.

Ensure High Availability and Disaster Recovery

Building a resilient EKS infrastructure requires planning for high availability and disaster recovery. Design your applications with redundancy in mind, distributing pods across multiple availability zones. Test your disaster recovery plan to ensure you can restore your cluster and applications in case of an outage. Addressing these potential problem areas proactively will lead to a more robust and reliable system. Consider using managed services like AWS Backup for Kubernetes to simplify backup and recovery operations.

Advanced Configurations of Amazon EKS

This section covers more advanced EKS configurations, including load balancing, storage options, and implementing CI/CD pipelines.

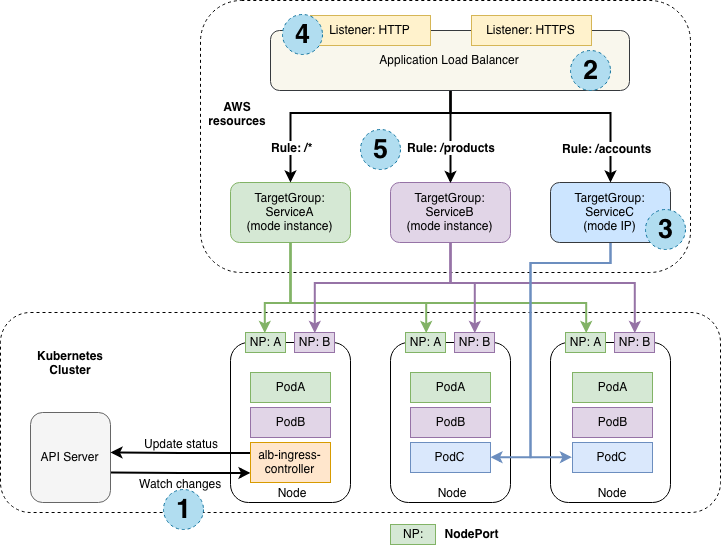

Load Balancing with AWS ALB Ingress Controller

The AWS Application Load Balancer (ALB) Ingress Controller integrates your Kubernetes services with the AWS Application Load Balancer (ALB). It routes traffic based on rules you define in your Kubernetes Ingress resources. This simplifies directing traffic to your applications, ensuring efficient distribution across your services for enhanced performance and reliability.

The following diagram details the AWS components that are aws-alb-ingress-controller creates whenever the user defines an Ingress resource. The Ingress resource routes ingress traffic from the ALB to the Kubernetes cluster.

Kubernetes Storage on AWS

Kubernetes provides Persistent Volumes (PVs) and Persistent Volume Claims (PVCs) for managing storage. On AWS, you can leverage Amazon Elastic Block Store (EBS) for persistent block storage, which is dynamically provisioned using Storage Classes.

For applications needing shared file access across multiple pods, Amazon Elastic File System (EFS) provides a scalable and elastic solution for applications needing shared file access across multiple pods. EFS simplifies managing shared data and ensures consistent access for all application instances.

Implement CI/CD for Kubernetes

A robust CI/CD pipeline is crucial for automating and streamlining deployments for your Kubernetes applications on AWS. Services like AWS CodePipeline, AWS CodeBuild, and AWS CodeDeploy automate your application lifecycle's build, test, and deployment phases. This accelerates development and improves the reliability of your deployments.

Scale Your EKS Environment

Scaling your EKS environment effectively is crucial for handling fluctuating workloads, optimizing costs, and ensuring application stability.

Auto-Scaling for Dynamic Workloads

Amazon EKS integrates seamlessly with the Kubernetes Cluster Autoscaler and Karpenter, enabling automatic adjustments to your cluster size based on workload demands. This dynamic scaling ensures you have the right resources available, scaling up during peak periods and down during lulls.

Save Costs with Spot Instances

Leveraging Amazon EC2 Spot Instances within your EKS cluster offers significant cost savings. Spot Instances allow you to utilize unused EC2 capacity at a considerably lower price. While Spot Instances can be interrupted, they are well-suited for fault-tolerant and flexible workloads. You can significantly reduce your infrastructure costs without impacting performance by deploying non-critical workloads or those with flexible scaling requirements on Spot Instances.

Implement Resource Quotas and Limits

Resource quotas and limits are fundamental Kubernetes features that provide granular control over resource allocation across namespaces and workloads. Resource quotas prevent any single application from monopolizing cluster resources, ensuring fair distribution and preventing resource starvation. Resource limits, on the other hand, define the maximum resources a container can consume, preventing runaway processes and ensuring predictable resource usage. For example, you can set resource quotas to limit the total CPU and memory that a namespace can consume. Simultaneously, using limits ensures that individual pods stay within their allocated resources, preventing resource contention and maintaining overall cluster stability.

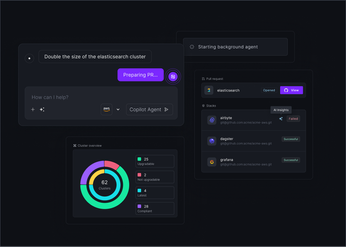

EKS Enhancement with Plural

Amazon EKS does not include a native, full-featured dashboard for comprehensive cluster monitoring and troubleshooting. While AWS provides essential visibility into cluster health, deeper insights into workloads, networking, and logs often require navigating multiple services like CloudWatch, IAM, and EC2. The open-source Kubernetes Dashboard is an option but requires manual setup, lacks built-in multi-cluster support, and does not natively integrate with AWS security controls.

How Plural Enhances EKS

Plural simplifies EKS management by providing a unified dashboard with real-time visibility into workloads, networking, and logs. Unlike solutions that require manual setup, Plural’s dashboard is pre-configured. It includes enterprise-ready features such as Single Sign-On (SSO) integration and comprehensive audit logging that tracks all API requests. Additionally, its reverse tunnel-based secure access allows private EKS clusters behind firewalls to be managed without exposing endpoints, enhancing security and reducing reliance on multiple AWS services.

Unlock the Full Potential of Kubernetes!

Simplify upgrades, manage compliance, enhance visibility, and streamline troubleshooting with Plural. Don’t just take our word for it—book a demo today or watch our demo video!

Related Articles

- Kubernetes Orchestration: A Comprehensive Guide

- Top 5 OpenShift Alternatives to Consider in 2024

- Kubernetes Clusters Solutions: The Ultimate Guide

- Kubernetes Management: A Comprehensive Guide for 2025

- Kubernetes Cluster Security: A Deep Dive

Unified Cloud Orchestration for Kubernetes

Manage Kubernetes at scale through a single, enterprise-ready platform.

Frequently Asked Questions

Why should I use a managed Kubernetes service like Amazon EKS?

Managing Kubernetes yourself can be complex and time-consuming. EKS takes care of the operational overhead, letting you focus on building and deploying applications. AWS handles tasks like patching, upgrades, scaling, and freeing your team. This particularly benefits organizations without dedicated Kubernetes expertise or those looking to streamline operations.

How does Amazon EKS compare to other Kubernetes services or self-managing Kubernetes?

EKS provides a managed Kubernetes experience on AWS, offering benefits like integration with other AWS services and simplified cluster management. Compared to self-managing Kubernetes, EKS reduces operational complexity. Other managed Kubernetes services exist, each with its strengths and weaknesses. The best choice depends on your specific needs and infrastructure preferences. Factors to consider include cost, integration with existing systems, and the level of control you require.

What are the key cost considerations when running EKS?

The primary cost drivers for EKS include the EC2 instances or Fargate capacity used for your worker nodes, data transfer costs, and the EKS control plane fee. Optimizing costs involves right-sizing your nodes, leveraging Spot Instances where appropriate, and efficiently managing storage and data transfer. Regularly monitoring your resource utilization and adjusting your cluster size based on demand can significantly impact your overall spending.

How do I ensure the security of my EKS clusters?

EKS integrates with AWS security services, providing a robust foundation for securing your Kubernetes deployments. Implementing role-based access control (RBAC), network policies, and security groups is crucial. Regularly updating your Kubernetes version and worker nodes helps mitigate vulnerabilities. Using image scanning tools and pod security policies further strengthens your security posture. Consider integrating with security information and event management systems for comprehensive monitoring and threat detection.

What are the best practices for scaling and managing EKS in production?

Scaling EKS effectively involves using the Cluster Autoscaler to adjust the number of worker nodes dynamically based on demand. Leveraging Spot Instances for cost optimization and implementing resource quotas and limits for efficient resource management are also important. Regularly monitor your cluster's performance and resource utilization to identify and address potential bottlenecks. Establish a robust CI/CD pipeline to automate deployments and rollbacks, ensuring smooth updates and minimizing downtime. Implementing these practices helps maintain stability, optimize performance, and control costs in your production EKS environment.

Newsletter

Join the newsletter to receive the latest updates in your inbox.