Essential Kubernetes Terminology for DevOps

Simplify your Kubernetes journey with this quick guide to essential Kubernetes terminology, helping you understand key concepts and terms with ease.

"Kubernetes is easy," said no one ever.

Kubernetes can feel intimidating. It's a complex topic with a lot of content to sift through. This guide acts as your cheat sheet for Kubernetes terminology, offering clear definitions and practical examples of core concepts. We'll demystify the jargon, from clusters and containers to ConfigMaps and Secrets. Whether you're a beginner or just need a refresher on Kubernetes terms and Kubernetes definitions, this Kubernetes glossary will help you confidently navigate the Kubernetes landscape.

On top of that, it is challenging to figure out what terminology and concepts you need to understand when getting started with Kubernetes.

In the world of Kubernetes, there are many terms that you'll likely be unfamiliar with at times.

Luckily, we've got you covered with our quick and dirty guide to Kubernetes terminology.

Kubernetes Explained

Kubernetes is a popular open-source platform for automating the deployment, scaling, and management of containerized applications. Developed by Google in 2014, it's now maintained by the Cloud Native Computing Foundation (CNCF), and is used by companies around the world.

Key Takeaways

- Kubernetes automates container orchestration: The control plane and worker node architecture simplifies scaling and management for applications of any size.

- Understanding core concepts is crucial: Terms like cluster, node, pod, and deployment are essential for navigating the Kubernetes ecosystem.

- Security is paramount: Implement RBAC, understand Pod Security Admission, and follow the 4C's of cloud-native security to protect your infrastructure.

What is Kubernetes? A Simple Analogy

Kubernetes can be likened to an orchestra conductor. Instead of coordinating musicians, Kubernetes manages numerous small, independent programs called “containers.” These containers encapsulate individual parts of a larger application—like microservices—and Kubernetes ensures they all work together seamlessly, even across multiple computers. Think of it as coordinating a complex performance, ensuring each instrument plays its part at the right time.

Think of a container as a box holding everything a program needs to run, including code, runtime, system tools, system libraries, and settings. Containers are designed to be stateless (they don’t retain past actions) and immutable (once created, they can't be changed; updates require a new container). This design makes containers easy to manage and replicate. Docker is a popular tool for building and running containers.

Within the Kubernetes ecosystem, a cluster represents a group of master and worker nodes that collaborate to form the entire Kubernetes system. These nodes are the physical or virtual machines where your containers run. Pods are the smallest deployable units in Kubernetes and serve as houses for containers, allowing one pod to contain multiple containers working together, sharing resources, and facilitating communication. This structure allows complex applications to be broken down into smaller, manageable units.

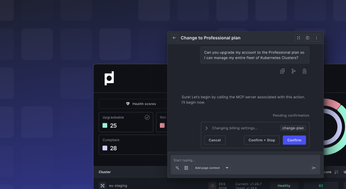

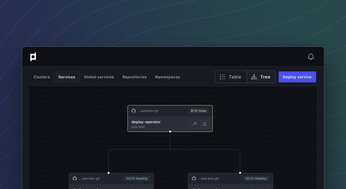

This analogy simplifies how Kubernetes orchestrates the deployment and management of containerized applications. If you're looking for a robust platform to manage your Kubernetes deployments, check out Plural. It’s designed to simplify Kubernetes management at scale.

Essential Kubernetes Terminology

Here is a list of the most common Kubernetes terms you should familiarize yourself with when getting started.

Cluster: A set of worker machines, called nodes, that run containerized applications orchestrated by a control plane, also called the kubernetes master.

Container: A lightweight and portable executable image that contains software and all of its dependencies.

Controller: Control loops that watch the state of your cluster and then make or request changes when needed.

Custom Resource: An extension of the kubernetes API defined by an external developer. Defines an api spec that the kubernetes API will support all the basic REST operations for, while a custom controller will listen to changes to the resource to perform reconciliations of the clusters state in response.

Deployment: A kubernetes resource that defines how many replicas should be running at any given time, how they should be updated, etc., and gives them labels so they can be referenced easily.

Node: A physical or virtual machine that runs containers as part of a Kubernetes cluster. A node is a server running the Kubernetes software. The nodes are managed by the master node and are responsible for scheduling containers across the cluster and storing data on disk.

Kubeadm: A tool that helps you set up a secure Kubernetes cluster quickly and easily, especially on-premises.

Kubelet: A daemon that runs on each node and takes instructions from the kubernetes api to perform any operations on that node in response, such as spawning new containers, provisioning ips, etc.

Namespace: Allow you to organize your cluster and set up logical divisions between domains or functions. Once set up, you can define policies and access rules for each. They simplify container management and reduce the risk of large-scale failure.

Pod: A group of one or more containers that are treated as a single entity by Kubernetes. Pods share an IP address and have their own filesystem namespace (similar to a chroot jail). Pods are created by using the pod kubernetes resource, which has a list of container specifications.

StatefulSet: Manages the deployment and scaling of a set of Pods, and provides guarantees about the ordering and uniqueness of these Pods alongside automating the creation and management of a persistent volume for each pod.

Core Concepts

Control Plane

The control plane is the brains of your Kubernetes cluster. It's responsible for making decisions, managing the cluster’s overall state, and ensuring everything runs smoothly. Think of it as the command center, constantly monitoring and adjusting things to keep your applications running. Several key components make up the control plane, each with a specific role.

API Server

The API server is the front door to your Kubernetes cluster. All requests to create, update, or delete resources go through the API server. It acts as a central communication point, ensuring all cluster components are in sync. It authenticates and authorizes requests, validates them against the cluster's current state, and persists changes to etcd.

kube-proxy

kube-proxy runs on each node in your cluster as a network proxy. Its primary job is managing network traffic and ensuring pods can communicate with each other and the outside world. It maintains network rules and load balances traffic across the pods comprising a service.

etcd

etcd is a distributed key-value store that's critical for Kubernetes. It stores all configuration data and the current state of your cluster. This includes the desired state of deployments, pod status, and other important information. The API server interacts with etcd to persist changes and retrieve cluster information.

Scheduler

The scheduler has one crucial task: deciding where to run your pods. When you create a pod, the scheduler evaluates factors like resource availability on each node, any constraints you've specified, and other criteria to find the best fit. It then assigns the pod to the most suitable node.

Controller Manager

The controller manager keeps the actual state of your cluster aligned with the desired state. It runs control loops, called controllers, that constantly monitor the cluster and take corrective action when necessary. For example, if a pod fails, the controller manager creates a new one to maintain the desired replica count.

Services

Services provide stable access to a group of pods, even if those pods are dynamically created or destroyed. They act as an abstraction layer, allowing you to access your application without knowing the specific IP addresses of individual pods. Services also offer load balancing and other useful features.

Ingress

Ingress manages external access to services within your cluster, typically via HTTP or HTTPS. It acts as a reverse proxy and load balancer, routing traffic from outside the cluster to the appropriate services based on rules you define. This simplifies external access to your applications and centralizes traffic routing management.

Volumes

Volumes provide persistent storage for your pods. Unlike the ephemeral storage of containers, which disappears when a container restarts, data stored in volumes persists even if the pod is terminated and rescheduled. This is essential for stateful applications, allowing you to store data that needs to be preserved across container restarts.

ConfigMaps and Secrets

ConfigMaps and Secrets inject configuration data into your pods. ConfigMaps are for non-sensitive data, while Secrets are designed for sensitive information like passwords and API keys. This separates configuration from your application code, simplifying management and updates.

Labels and Selectors

Labels are key-value pairs attached to Kubernetes resources. They offer a flexible way to organize and group objects. Selectors let you query resources based on their labels, making it easy to target specific object groups for operations like deployments or scaling.

Namespaces

Namespaces divide your cluster into logical partitions. This is useful for separating different environments (development, testing, production) or isolating resources used by different teams or projects. Namespaces help organize your cluster and prevent naming conflicts.

Kubernetes Workloads

Pods

Pods are the smallest deployable units in Kubernetes. They encapsulate one or more containers, sharing the same network namespace and storage. A pod is a single logical unit containing your application and its dependencies. Pods are typically ephemeral, meaning they are created and destroyed as needed.

Deployments

Deployments manage the rollout and scaling of your pods. They ensure the desired number of replicas are running and provide mechanisms for updating your application without downtime. Deployments also handle rollbacks if an update fails, simplifying application lifecycle management.

StatefulSets

StatefulSets are designed for applications requiring persistent state and stable network identities. They guarantee the ordering and uniqueness of pods, making them suitable for databases and other stateful applications. StatefulSets also manage persistent storage for each pod. Check out our recent blog post on why StatefulSets can be tricky.

DaemonSets

DaemonSets ensure a copy of a pod runs on every node (or a subset of nodes) in your cluster. They are useful for running system services or agents that need to be present on every machine. DaemonSets automatically handle scheduling and scaling to ensure the pod runs on all target nodes.

Jobs and CronJobs

Jobs manage finite tasks that run to completion. They create one or more pods to perform the task and ensure its successful completion. CronJobs are similar to Jobs but run on a schedule, making them suitable for recurring tasks like backups or report generation.

Other Key Terms

Container Runtime

The container runtime is the software responsible for running containers on your nodes. Popular container runtimes include Docker, containerd, and CRI-O. Kubernetes interacts with the container runtime to start, stop, and manage containers.

CNI (Container Network Interface)

CNI is a standard for configuring network interfaces in containers. It allows Kubernetes to use different networking plugins to provide connectivity for your pods. This flexibility lets you choose the networking solution that best fits your needs. For more in-depth information, you can explore CNI's GitHub repository.

CSI (Container Storage Interface)

CSI is a standard for exposing storage systems to containers. It allows Kubernetes to use different storage providers to provision persistent volumes for your pods. This simplifies integration with various storage solutions and provides greater flexibility for managing persistent storage. The CSI documentation offers a comprehensive overview.

Operators

Operators extend the functionality of Kubernetes. They manage the lifecycle of complex applications and automate tasks like deployment, scaling, and backups. Operators are often used for stateful applications and provide a more sophisticated way to manage application deployments. Learn more about Operators in the official Kubernetes documentation.

kubectl

kubectl is the command-line tool for interacting with your Kubernetes cluster. You use kubectl to create, update, delete, and manage resources, and to get information about your cluster's state. It's an essential tool for anyone working with Kubernetes. The kubectl overview is a great starting point.

Minikube

Minikube runs a single-node Kubernetes cluster locally on your machine. It's great for learning Kubernetes or testing deployments locally before deploying to production. Minikube provides a simple and convenient way to get started with Kubernetes. The Minikube documentation provides detailed information on getting started and using Minikube.

Why use Kubernetes in 2023?

Kubernetes is used by companies like Google and Amazon, and it's built to be highly scalable, so it can handle thousands of containers at once. It's also compatible with all major cloud platforms like AWS, GCP, and Azure.

Kubernetes allows you to run your application on multiple cloud providers or a combination of on-premises and cloud, allowing you to avoid vendor lock-in. In addition, it has a vibrant open-source community full of automation to do things like simplifying provisioning load balancers, managing secure networking with service meshes, automating DNS management, and much more.

Every engineering leader that I have spoken with agreed that Kubernetes is an extremely powerful tool, and developers at companies of all sizes can immediately reap the benefits of using it for their projects.

Benefits of Using Kubernetes

Kubernetes offers a robust set of features that simplify container orchestration and enhance application management. It's designed for scalability and can handle thousands of containers at once, making it suitable for applications of any size. Plus, it's compatible with all major cloud platforms like AWS, GCP, and Azure, giving you flexibility in your infrastructure choices. This cross-platform compatibility is a major advantage, helping you avoid vendor lock-in and maintain control over your deployments. For example, if you decide to migrate from one cloud provider to another, Kubernetes makes the transition smoother by abstracting away the underlying infrastructure differences.

Beyond multi-cloud support, Kubernetes empowers you to run your applications across a hybrid infrastructure, combining on-premises resources with cloud environments. This adaptability is crucial for businesses with diverse infrastructure needs. The platform also benefits from a vibrant open-source community, constantly developing tools and automation to streamline tasks like provisioning load balancers, managing secure networking with service meshes, and automating DNS management. This active community ensures Kubernetes remains at the forefront of container orchestration technology, providing access to a wealth of resources, support, and continuous improvements.

Kubernetes Statistics and Growth

Kubernetes has seen widespread adoption across the tech industry. From startups to established enterprises, engineering teams recognize the value Kubernetes brings to their projects. Its ability to simplify complex deployments and improve application reliability has made it a cornerstone of modern software development workflows. This widespread adoption isn't just a trend; it's a testament to the platform's maturity and its ability to solve real-world challenges in managing containerized applications. The increasing number of organizations relying on Kubernetes demonstrates its effectiveness in improving operational efficiency and accelerating software delivery.

For teams looking to streamline their Kubernetes operations, Plural offers a comprehensive platform for managing and scaling your deployments. Book a demo to see how Plural can simplify your Kubernetes workflows and help you manage your infrastructure more effectively.

How does Kubernetes work?

Kubernetes allows you to define your application's components in separate containers that can be deployed onto any node in your cluster.

The master then schedules workloads across the cluster based on resource requirements like CPU or memory usage. This means you don't need to worry about scaling up or down; Kubernetes will automatically scale up when needed, and scale down when not needed anymore.

When you use Kubernetes, you can also easily add new features or upgrade existing ones without being concerned about the underlying infrastructure. You can also manage resources like CPU and memory dynamically without worrying about running out of resources.

It has a lot of features that make it a great option for running containerized applications, including

- Multiple apps on one cluster

- Automatically scaling up or down based on demand

- High availability and reliability

Kubernetes provides several key benefits to users:

- It simplifies application deployment, scaling, and management.

- It helps users avoid vendor lock-in by allowing them to run multiple instances of the same application on different platforms (e.g., Amazon Web Services).

- It allows users to easily scale applications up or down as needed, which allows them to take advantage of unused capacity while avoiding overspending on resources they don't need at any given time.

- Has built-in self-healing for all of your running containers and ships with readiness and liveness checks. When containers go down or are in a bad state, things often return to the status quo automatically or with plug-and-play debugging workflows.

Kubernetes Architecture

Kubernetes clusters have two main parts: the control plane and the worker nodes. The control plane is the brain, making decisions and directing traffic, while the worker nodes are the brawn, executing the actual work. This division of labor is fundamental to Kubernetes’s power and scalability.

The control plane manages the entire cluster. It makes cluster-wide decisions, like scheduling, and responds to events, such as scaling a deployment when traffic spikes. Several key components comprise the control plane:

- API Server: The central communication hub for the cluster. All other components interact through the API server. It's essentially the front door to your Kubernetes cluster.

- Scheduler: This component determines where to run your pods (the smallest deployable units in Kubernetes, containing one or more containers). It considers factors like resource requests, constraints, and node health to make optimal placement decisions.

- Controller Manager: This runs a set of control loops, constantly monitoring the cluster's state. If the actual state differs from the desired state (defined in your configurations), the controller manager takes corrective action. For example, if a pod crashes, the controller manager restarts it.

- etcd: A distributed key-value store holding the cluster's state. All configuration data for your cluster resides here.

The worker nodes are the workhorses, running your containerized applications. Each node runs these key components:

- Kubelet: An agent on each node that communicates with the control plane. It receives instructions from the API server and manages the containers on that node.

- Kube-proxy: A network proxy on each node that manages networking within the cluster. It maintains network rules and load balances traffic to your applications.

- Container Runtime: The software that runs containers. Common examples include Docker, containerd, and CRI-O.

This architecture, with its clear separation of responsibilities and distributed design, enables Kubernetes to manage complex applications at scale. Contact Plural to discuss how we can streamline your Kubernetes management.

Kubernetes alternatives

There are a number of alternatives to Kubernetes. The most commonly used alternatives include Docker Swarm, Amazon ECS (Elastic Container Service), Apache Mesos, and Rancher.

One of the more common questions we get asked is whether or not it makes sense to invest time and resources into Kubernetes. We ultimately believe that Kubernetes is worth the investment (in terms of engineering resources) for most organizations.

If you have the right engineers, enough time, and resources to effectively run and upkeep Kubernetes, then your organization is likely at a point where Kubernetes makes sense.

I understand that these are not trivial prerequisites, but if you can afford to hire a larger engineering team you are likely at a point where your users heavily depend on your product to be operating at peak performance constantly.

However, if you’re considering deploying open-source applications onto Kubernetes, it has never been easier to do so than with Plural.

It requires minimal understanding of Kubernetes to deploy and manage your resources, which is unique for the ecosystem.

If you like what we’re doing here, head over to our GitHub and check out our code, or better yet, try your hand out at adding an application to our catalog.

Challenges of Kubernetes and When to Consider Alternatives

Kubernetes isn’t a perfect solution for every situation. While it offers powerful orchestration capabilities, it also presents certain challenges. Recognizing these challenges helps determine if Kubernetes is the right fit for your needs, or if alternative solutions might be more suitable. For teams looking to simplify Kubernetes adoption and management, platforms like Plural offer a streamlined approach.

High Costs

Beyond the time investment in DevOps expertise, Kubernetes can incur substantial infrastructure costs. The need for redundant management nodes and a tendency to over-provision resources to compensate for slower autoscaling contribute to these expenses. As Ben Houston points out in “I Didn’t Need Kubernetes,” these costs can become significant, especially for smaller projects or those with fluctuating workloads. If your team is struggling with the cost and complexity of managing Kubernetes infrastructure, consider exploring managed Kubernetes services or alternative platforms like Cloud Run.

Difficulty with Large Jobs

While Kubernetes is designed for scalability, it can encounter difficulties when managing large, complex jobs. Even with tools like Argo, the scheduler can struggle under heavy loads, adding another layer of complexity to an already intricate system. Again, Houston’s experience detailed in his article highlights these scaling challenges. For batch processing and large job management, consider exploring alternative solutions like Apache Spark or Hadoop.

Overwhelming Complexity

Kubernetes's extensive feature set, while beneficial for advanced use cases, can make even simple tasks complicated. This complexity often necessitates dedicated DevOps engineers, increasing both the financial and personnel investment required to manage a Kubernetes deployment effectively. This need for specialized expertise is a common theme among those who have explored alternatives, as highlighted in Houston’s piece. If your team lacks dedicated Kubernetes expertise, consider using a managed Kubernetes service or a platform like Plural to simplify management.

Slow Autoscaling

Compared to platforms like Cloud Run, Kubernetes’s autoscaling can be significantly slower. While Cloud Run can scale in seconds, Kubernetes often takes minutes, potentially impacting application performance and responsiveness during peak demand. This difference in scaling speed is a key factor in Houston’s decision to transition away from Kubernetes, as explained in his blog post. If rapid autoscaling is a critical requirement, explore platforms like Cloud Run or AWS Lambda.

Kubernetes Security Best Practices

Securing your Kubernetes deployments is paramount. Implementing robust security measures protects your applications and infrastructure from potential threats. Here are some key best practices to consider:

RBAC (Role-Based Access Control)

RBAC is fundamental to Kubernetes security. It allows you to granularly control access to cluster resources based on user roles. By defining roles and assigning them to users or groups, you can limit permissions and prevent unauthorized access. The Kubernetes Glossary provides a concise definition of RBAC and its importance. For simplified RBAC management, explore tools like Plural, which offer intuitive interfaces for managing roles and permissions.

Pod Security Policies (Deprecated)

While now deprecated in favor of Pod Security Admission, understanding Pod Security Policies is still valuable. They provided a way to control security-sensitive aspects of pod specifications, such as resource limits and access to the host network. The Kubernetes Glossary offers more details on Pod Security Policies and their function. Familiarize yourself with Pod Security Admission for current best practices.

The 4C's of Cloud Native Security

The 4C’s – Code, Container, Cluster, and Cloud – represent a comprehensive approach to cloud-native security. Addressing security concerns at each of these layers is crucial for a robust defense. Kubernetes Security Best Practices documentation delves deeper into the 4C’s and their significance. Consider using security scanning tools and implementing security best practices at each layer.

Further Resources for Learning Kubernetes

Kubernetes has a steep learning curve, but numerous resources are available to help you expand your knowledge. For a comprehensive overview and deeper understanding of Kubernetes concepts, check out the official Kubernetes Documentation. Additionally, resources like Kubernetes Terminology You Need to Know by Appvia offer valuable insights into essential terminology and concepts. For a more streamlined approach to learning and deploying Kubernetes, explore platforms like Plural, which offer guided tutorials and simplified management tools.

Related Articles

- The Quick and Dirty Guide to Kubernetes Terminology

- Kubernetes Orchestration: A Comprehensive Guide

- Deep Dive into Kubernetes Components

- Top tips for Kubernetes security and compliance

- Find the Right Fit: Kubernetes Alternatives Explained

Frequently Asked Questions

Why is Kubernetes so complex?

Kubernetes's complexity stems from its distributed nature and the numerous components involved in orchestrating containers. Managing networking, storage, security, and application lifecycle across a cluster of machines introduces inherent complexity. However, this complexity also brings flexibility and scalability, making Kubernetes suitable for managing large, complex applications.

When does Kubernetes make sense for my organization?

Kubernetes is a good fit if your applications require high availability, scalability, and automated management. If you're dealing with a growing number of containers and microservices, or if you need to deploy across multiple environments, Kubernetes can simplify these tasks. However, it's important to consider the operational overhead and the need for skilled DevOps engineers. If your team lacks Kubernetes expertise or your application is relatively simple, alternative solutions might be more suitable.

What are the main differences between Kubernetes and Docker?

Docker is a containerization technology that allows you to package and run applications in isolated environments. Kubernetes, on the other hand, is a container orchestration platform that manages and scales Docker containers (and other container runtimes) across a cluster of machines. Think of Docker as the tool for building and running individual containers, while Kubernetes is the system for managing those containers at scale.

What are some real-world examples of how companies use Kubernetes?

Companies like Google, Amazon, Spotify, and Airbnb use Kubernetes to manage their containerized applications. They leverage Kubernetes to automate deployments, scale their applications based on demand, and improve application reliability. For example, a streaming service might use Kubernetes to scale its video processing services during peak viewing hours, ensuring smooth playback for millions of users.

How can I simplify Kubernetes management?

Several tools and platforms simplify Kubernetes management. Managed Kubernetes services from cloud providers like AWS, GCP, and Azure handle the underlying infrastructure, reducing operational overhead. Platforms like Plural offer a streamlined approach to deploying and managing applications on Kubernetes, abstracting away some of the complexity and providing user-friendly interfaces. Choosing the right tool depends on your specific needs and technical expertise.

Newsletter

Join the newsletter to receive the latest updates in your inbox.