Local Kubernetes: A Comprehensive Guide to Local Development

Master local Kubernetes development with this comprehensive guide. Learn how to set up, configure, and optimize your local environment for efficient testing.

Kubernetes has become the de facto standard for container orchestration, but managing a production-ready cluster can be daunting. Before deploying your application to a live environment, setting up a local Kubernetes cluster provides a safe and efficient way to test and refine your configurations. This approach saves on cloud costs and allows for rapid iteration and experimentation.

This article explores the benefits of local Kubernetes development, guiding you through the setup process, popular tools like Minikube and kind, and best practices for managing your local cluster. Whether you're new to Kubernetes or a seasoned engineer, mastering local development is crucial.

Unified Cloud Orchestration for Kubernetes

Manage Kubernetes at scale through a single, enterprise-ready platform.

Key Takeaways

- Develop and test efficiently with local Kubernetes: Tools like Minikube and kind provide a cost-effective sandbox for building and debugging applications within a Kubernetes environment, saving on cloud resources and accelerating development cycles.

- Select the right tool for the job: Minikube is excellent for single-node setups, while Kind efficiently uses existing container runtimes. Docker Desktop integrates seamlessly with Docker workflows, and k3d facilitates multi-node experimentation. Choose the tool that best suits your project's needs and resource constraints.

- Beyond the local Kubernetes development: While local Kubernetes environments are ideal for prototyping and early-stage development, scaling to production, multi-region, or multi-cloud deployments introduces new challenges. Plural is designed to help teams move beyond these limitations and confidently manage Kubernetes at scale.

What is Local Kubernetes?

Local Kubernetes refers to running a Kubernetes cluster directly on your computer, whether a Mac, Linux, or Windows machine. This setup empowers developers to design, develop, test, and debug applications within a Kubernetes environment without needing a remote cluster in the cloud or a data center. It's a fundamental tool for learning Kubernetes, experimenting with configurations, and validating application behavior before deploying to production. For those new to Kubernetes, running a local cluster offers a practical, hands-on experience with the platform.

How Local Kubernetes Works

Various tools simplify running Kubernetes locally, each with its own approach and advantages. Generally, these tools establish a virtualized environment on your computer where a Kubernetes cluster can function. This might involve directly downloading and installing the required Kubernetes components. Alternatively, tools like Minikube and kind streamline this by bundling everything into a user-friendly package. These tools abstract away much of the underlying complexity, allowing developers to concentrate on building and deploying their applications.

Why Use Local Kubernetes for Development?

Running Kubernetes locally offers several key advantages for development workflows, enabling faster iteration, reduced costs, and improved reliability.

Cost-Effective Testing

Testing your Kubernetes deployments in the cloud can quickly rack up expenses. A local Kubernetes cluster lets you test thoroughly before deploying to a live environment, saving on cloud resource costs.

Simulate Production

Tools like Minikube allow you to run a single-node Kubernetes cluster on your local machine, providing a lightweight, production-like environment for development and testing. This empowers you to identify and address potential issues early in the development cycle.

Iterate and Experiment Quickly

Local Kubernetes clusters provide a sandbox for rapid iteration and experimentation. A local environment offers a safe and efficient way to refine your application deployment process. The ability to quickly test changes and experiment with new features without impacting a live environment accelerates development cycles and fosters innovation.

Popular Local Kubernetes Tools

Choosing the right local Kubernetes tool depends on your specific needs and resources. Here’s a breakdown of popular options:

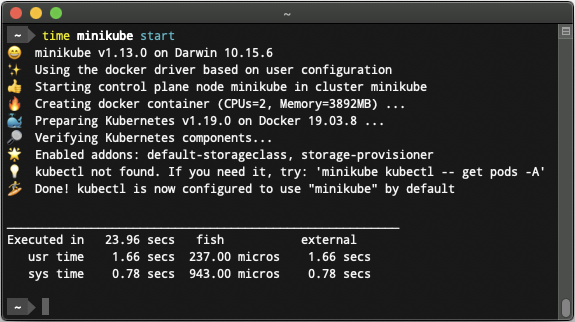

Minikube: Single-Node Clusters

Minikube is an open-source tool for getting started with Kubernetes. It sets up a single-node cluster on your local machine (Mac, Linux, or Windows), making it ideal for learning, experimenting, and developing simple applications. Because it’s a single-node setup, Minikube simplifies initial setup and requires fewer resources than multi-node solutions.

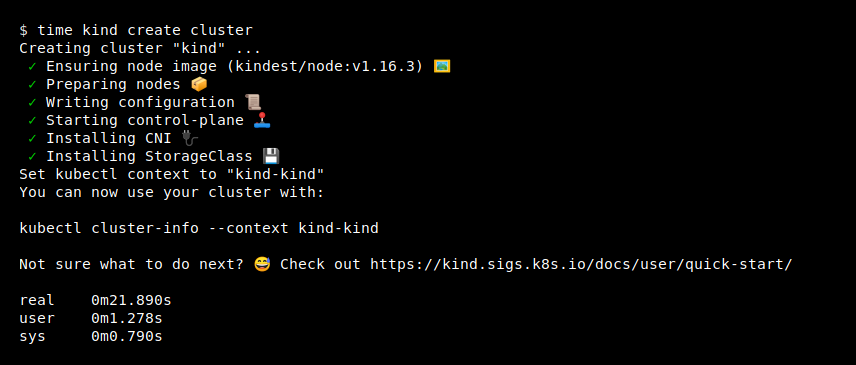

kind: Lightweight Clusters

Kind (Kubernetes IN Docker) is another excellent choice for local development. Kind was primarily designed for testing Kubernetes itself, but may be used for local development. If you already use Docker or Podman, kind leverages these existing container runtimes to create a lightweight Kubernetes environment. This makes it a resource-efficient option.

Other Options: Docker Desktop, k3d

Beyond Minikube and kind, other tools cater to specific use cases:

- Docker Desktop: If you’re already using Docker Desktop, its integrated Kubernetes server offers a convenient way to spin up a local cluster. This simplifies your workflow by keeping your Kubernetes environment within the familiar Docker Desktop interface.

- k3d: For those interested in experimenting with multi-node setups, k3d provides a lightweight way to run k3s (a minimal Kubernetes distribution by Rancher) in Docker. This allows you to simulate more complex, production-like environments while maintaining the convenience of a local setup.

Set Up Your Local Kubernetes Environment

This section guides you through setting up a local Kubernetes environment, from prerequisites to cluster configuration.

Prerequisites and System Requirements

Before diving in, ensure your system meets the requirements. While the Kubernetes control plane requires Linux, applications deployed within the cluster can run on various operating systems, including Linux and Windows, providing flexibility for your development workflows. Verify your system's compatibility and choose an appropriate operating system for your control plane. Sufficient CPU, memory, and disk space are also essential for smooth operation.

Install Local Kubernetes

Minikube is a popular choice for local Kubernetes development, allowing you to run a single-node cluster directly on your machine (Mac, Linux, or Windows). Its simplicity makes it ideal for learning and testing Kubernetes applications. Refer to Minikube's official getting started guide for detailed installation instructions tailored to your operating system.

# Start a cluster by running:

minikube start

# Access the Kubernetes dashboard running within the minikube cluster:

minikube dashboard

Deploy An Application

Once the local Kubernetes cluster has been started, you can interact with your cluster using kubectl, just like any other Kubernetes cluster. For instance, starting a server:

kubectl create deployment hello-minikube --image=kicbase/echo-server:1.0

kubectl expose deployment hello-minikube --type=NodePort --port=8080minikube makes it easy to expose a service as a NodePort and open this exposed endpoint in your browser:

kubectl expose deployment hello-minikube --type=NodePort --port=8080

minikube service hello-minikube

Configure Your Cluster

After installing Minikube, configuring your cluster for optimal resource management is crucial. Most minikube configuration is done via the flags interface. To see which flags are possible for the start command, run:

minikube start --helpFor more details on cluster configuration, refer to the minikube configuration page.

Best Practices for Local Kubernetes Development

Fine-tuning your local Kubernetes development workflow involves adopting best practices. These practices ensure your local environment mirrors production as closely as possible, allowing for efficient testing and debugging.

Manage Resources and Optimize Performance

Resource management is crucial, even in a local environment. Accurately defining resource requests and limits for your containers ensures your applications run smoothly and don't overwhelm your local machine. Requests specify the minimum resources a container needs, while limits prevent it from consuming more than its allocated share. Setting these parameters helps optimize performance and simulate resource constraints you might encounter in production. Kubernetes also provides detailed documentation on managing container resources.

Integrate Version Control

Managing your Kubernetes configurations (YAML manifests, Helm charts) with a version control system like Git is essential. This practice enables you to track changes, collaborate effectively with your team, and easily revert to previous configurations if needed. Version control provides a safety net, allowing you to experiment freely while knowing you can always restore a working state.

Debug and Troubleshoot

Troubleshooting is an inevitable part of development. Familiarize yourself with standard Kubernetes debugging tools and techniques. Use kubectl logs to check the logs of your application pods to identify errors and understand application behavior. Use kubectl describe to retrieve detailed information about resources, including their current status, events, and configurations. Ensure your resource requests and limits are set appropriately, as resource contention can lead to unexpected application behavior. For additional troubleshooting tips, refer to this Troubleshoot Kubernetes Deployments: A Practical Guide by Plural.

Overcome Local Kubernetes Development Common Challenges

Local Kubernetes development, while beneficial, presents unique challenges.

Solve Networking Issues

Networking can be tricky within the confines of a local Kubernetes setup, especially when simulating service interactions or accessing external resources. Exposing local services often involves non-trivial configurations. While kubectl port-forward helps access individual pods and services, an ingress controller becomes essential for more complex scenarios. Managing Kubernetes clusters across different environments introduces further complexity, often demanding specialized solutions for networking, data synchronization, and consistency. You can refer to this Kubernetes documentation on how to set up Ingress on Minikube with the NGINX Ingress Controller.

Manage Stateful Applications

Running stateful applications, such as databases, locally requires careful handling of persistent data. Ensure your local Kubernetes environment supports PersistentVolumes to handle data persistence across container restarts. For instance, minikube supports PersistentVolumes of type hostPath out of the box. These PersistentVolumes are mapped to a directory inside the running minikube instance.

Address Resource Constraints

Local machines, unlike cloud environments, have limited resources. Running multiple services or resource-intensive applications can quickly strain your system. Precisely defining resource requests and limits for your pods and containers is crucial. This practice optimizes performance and prevents resource starvation, ensuring critical services receive the necessary resources. Monitor resource usage with tools like kubectl top and adjust limits as needed to maintain a stable and efficient local Kubernetes environment.

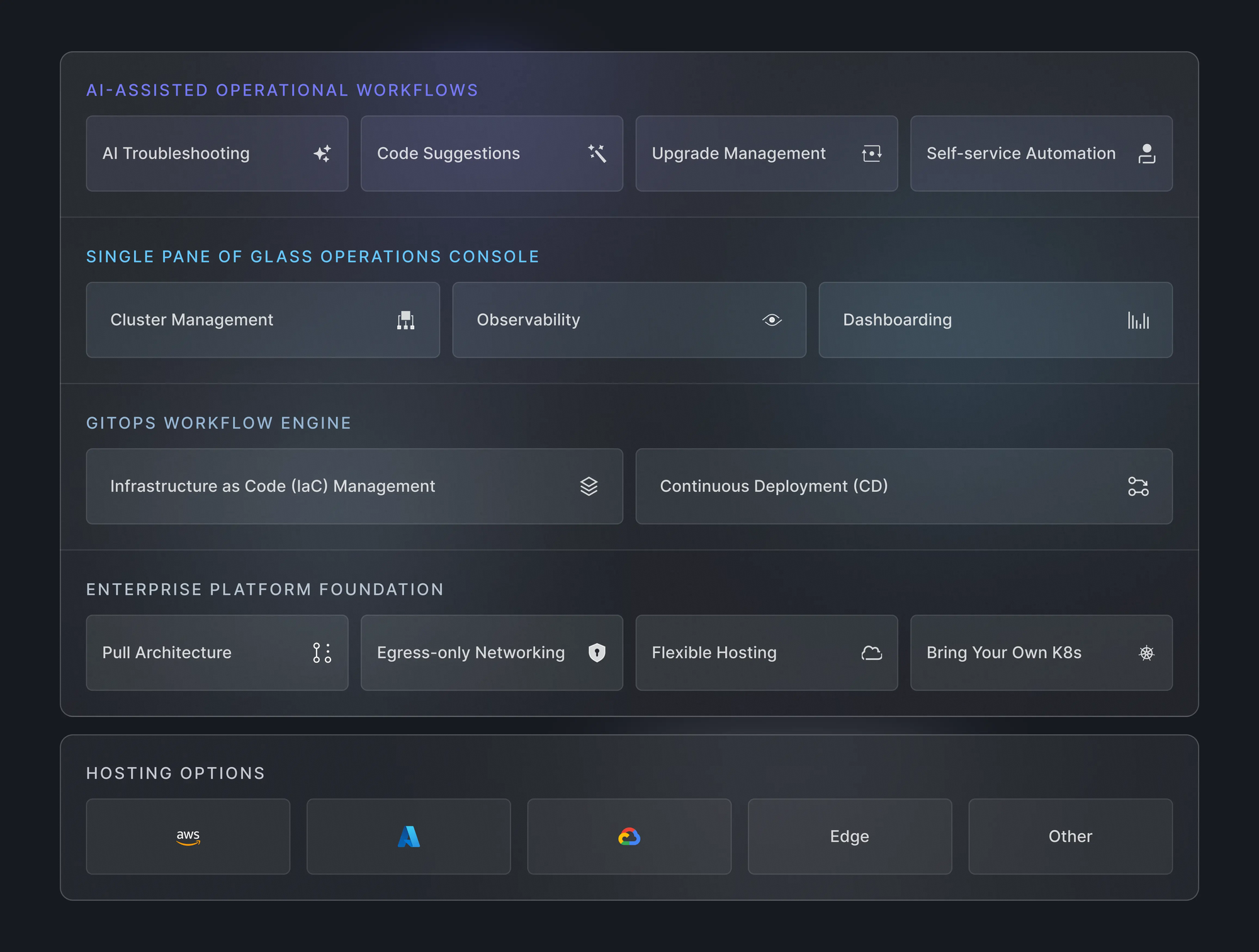

Beyond Local Kubernetes Development

While local Kubernetes environments are ideal for prototyping and early-stage development, scaling to production, multi-region, or multi-cloud deployments introduces new challenges—complexity, security, and operational overhead rapidly increase. Plural is designed to help teams move beyond these limitations and manage Kubernetes at scale with confidence.

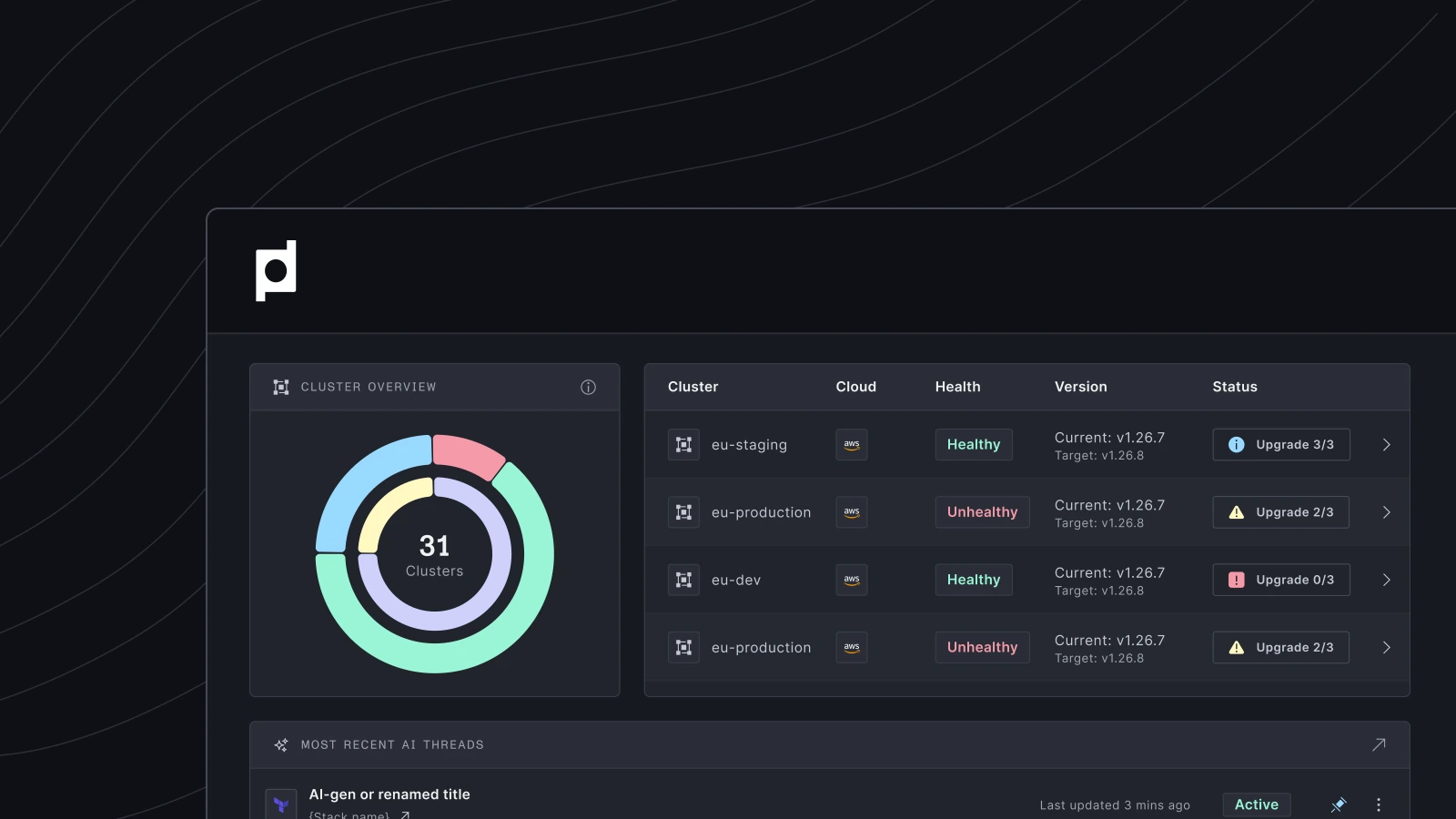

Plural helps teams run multi-cluster, complex K8s environments at scale by combining an intuitive, single pane of glass interface with advanced AI troubleshooting capabilities that leverage a unique vantage point into your Kubernetes environment. Plural helps you save time, focus on innovation, and reduce organizational risk.

Plural Fleet Management

Monitor your entire environment from a single dashboard

Stay on top of your environment's clusters, workloads, and resources in one place. Gain real-time visibility into cluster health, status, and resource usage. Maintain control and consistency across clusters.

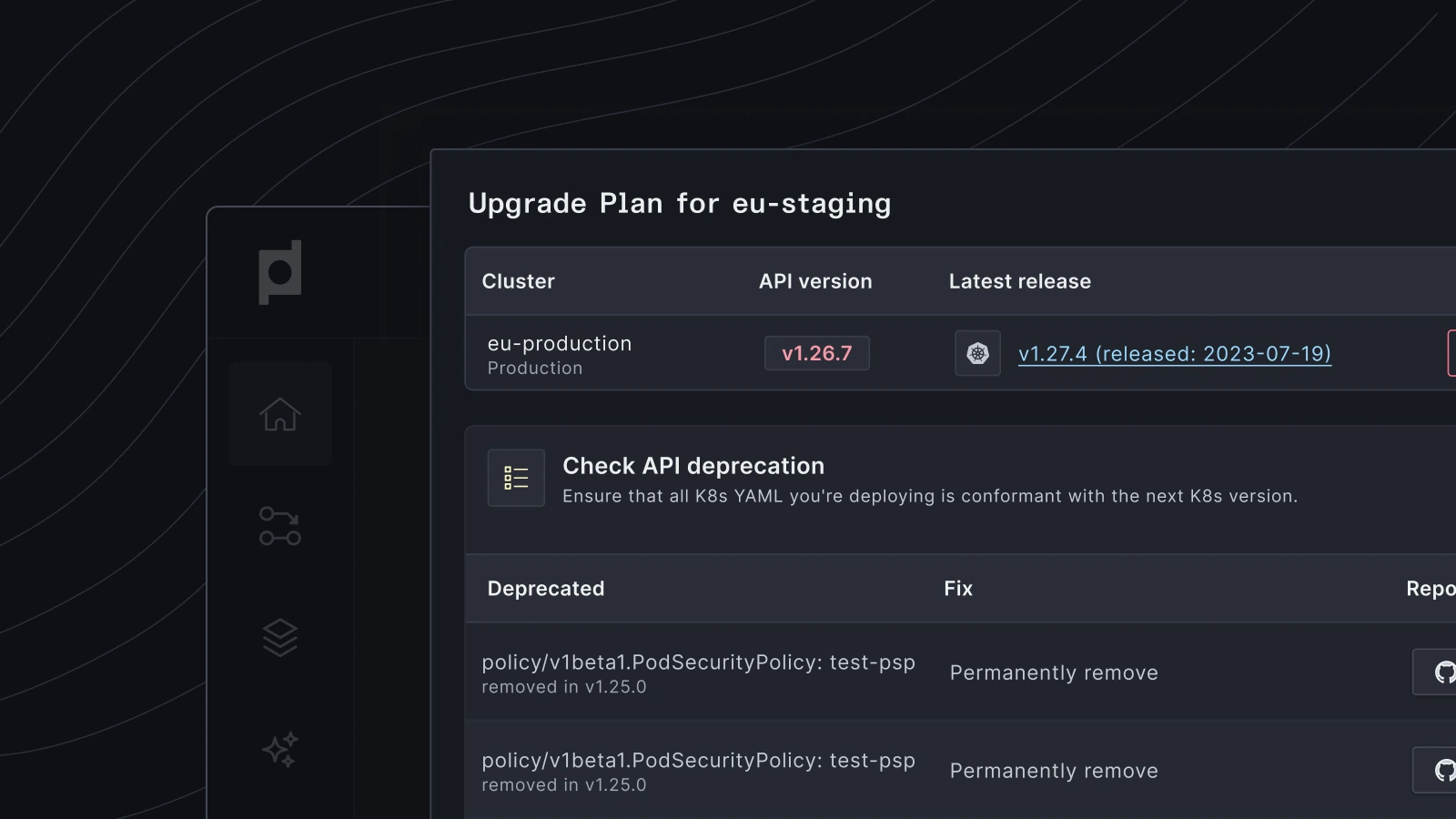

Manage and de-risk complex deployments and upgrades

Reduce the risks associated with deployments, maintenance, and upgrades by combining automated workflows with the flexibility of built-in Helm charts.

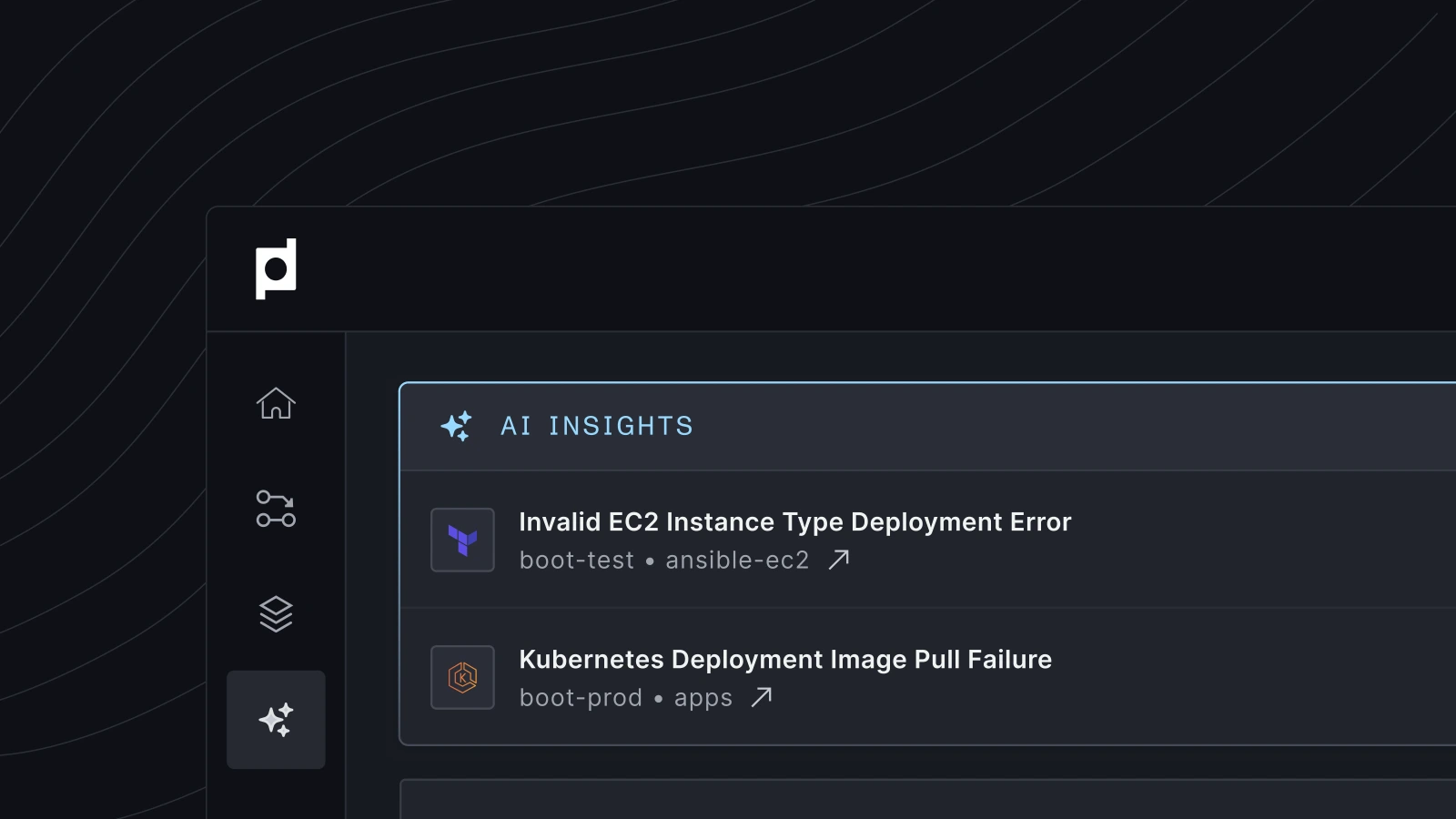

Solve complex operations issues with the help of AI

Identify, understand, and resolve complex issues across your environment with AI-powered diagnostics. Save valuable time spent on root cause analysis and reduce the need for manual intervention.

Related Articles

- Podman + Kubernetes: Simplifying Container Management

- Podman Kubernetes YAML: A Practical Guide

- Podman and Kubernetes: A Comprehensive Guide

- Kubernetes Terminology: A 2023 Guide

- Top Kubernetes Management Tools to Simplify K8s

Unified Cloud Orchestration for Kubernetes

Manage Kubernetes at scale through a single, enterprise-ready platform.

Frequently Asked Questions

Why should I use a local Kubernetes cluster instead of a cloud-based one for development?

Local Kubernetes clusters offer a cost-effective, isolated environment for development and testing. You can experiment freely without incurring cloud computing costs, iterate quickly on changes, and closely simulate your production environment before deploying, reducing the risk of unexpected issues. It's also a great way to learn Kubernetes without access to cloud resources.

What are the key differences between Minikube and Kind?

Minikube sets up a single-node Kubernetes cluster using a virtual machine or a container runtime, making it easy to get started. On the other hand, Kind runs a multi-node Kubernetes cluster within Docker containers, providing a more realistic, albeit slightly more complex, environment for testing. Choose Minikube for simplicity and ease of use, especially when starting with Kubernetes. Opt for kind when you need a multi-node setup or are already working extensively with Docker.

How do I manage resources effectively in my local Kubernetes cluster?

Resource management is crucial even locally. Define resource requests and limits for your pods and containers in your Kubernetes manifests. Requests specify the minimum resources a container needs, while limits prevent it from consuming excessive resources. This practice ensures your applications run smoothly without overwhelming your local machine and helps simulate resource constraints you might encounter in production. Use tools like kubectl top to monitor resource usage and adjust limits as needed.

What are some common challenges with local Kubernetes development, and how can I overcome them?

Networking, managing stateful applications, and resource constraints are common challenges. Use an ingress controller for complex networking scenarios, PersistentVolumes for stateful applications, and carefully manage resource requests and limits to avoid overwhelming your local machine. Be prepared to troubleshoot networking issues and understand how to configure persistent storage effectively.

How can I take my local Kubernetes development to the next level?

Integrate your local development workflow with a CI/CD pipeline to automate building, testing, and deploying your applications. Plan for the eventual transition to a cloud-based Kubernetes cluster for production deployments to leverage scalability and reliability. To manage multiple local or remote clusters, explore fleet management tools like Plural to streamline operations and maintain consistency.

Newsletter

Join the newsletter to receive the latest updates in your inbox.