Plural: Now with AI that Understands Your Infrastructure Stack

Plural's new AI capabilities bring the closest thing to Cursor-level integrated AI workflows to DevOps, with semantic understanding of your entire infrastructure stack and the ability to automate the repeatable work of in your devops workflow on your behalf.

Every DevOps engineer knows the frustration: you need to find a specific resource buried somewhere in your infrastructure stack, but you can't remember which Terraform file it's in, which cluster it's deployed to, or what the exact configuration looks like. The traditional approach is both time-consuming and error-prone: bouncing between poorly documented operator sites, hunting through multiple repositories, and manually piecing together how your distributed systems actually work.

For teams managing complex cloud-native infrastructure, these daily friction points add up to significant productivity losses. While AI tools like Cursor have revolutionized code editing with deep contextual understanding, DevOps teams are left with generic chatbots that don't understand the nuances of infrastructure management or have access to live system data.

Today, that changes. Plural's new AI capabilities bring the closest thing to Cursor-level integrated AI workflows to DevOps, with semantic understanding of your entire infrastructure stack and the ability to automate the repeatable work of in your devops workflow on your behalf.

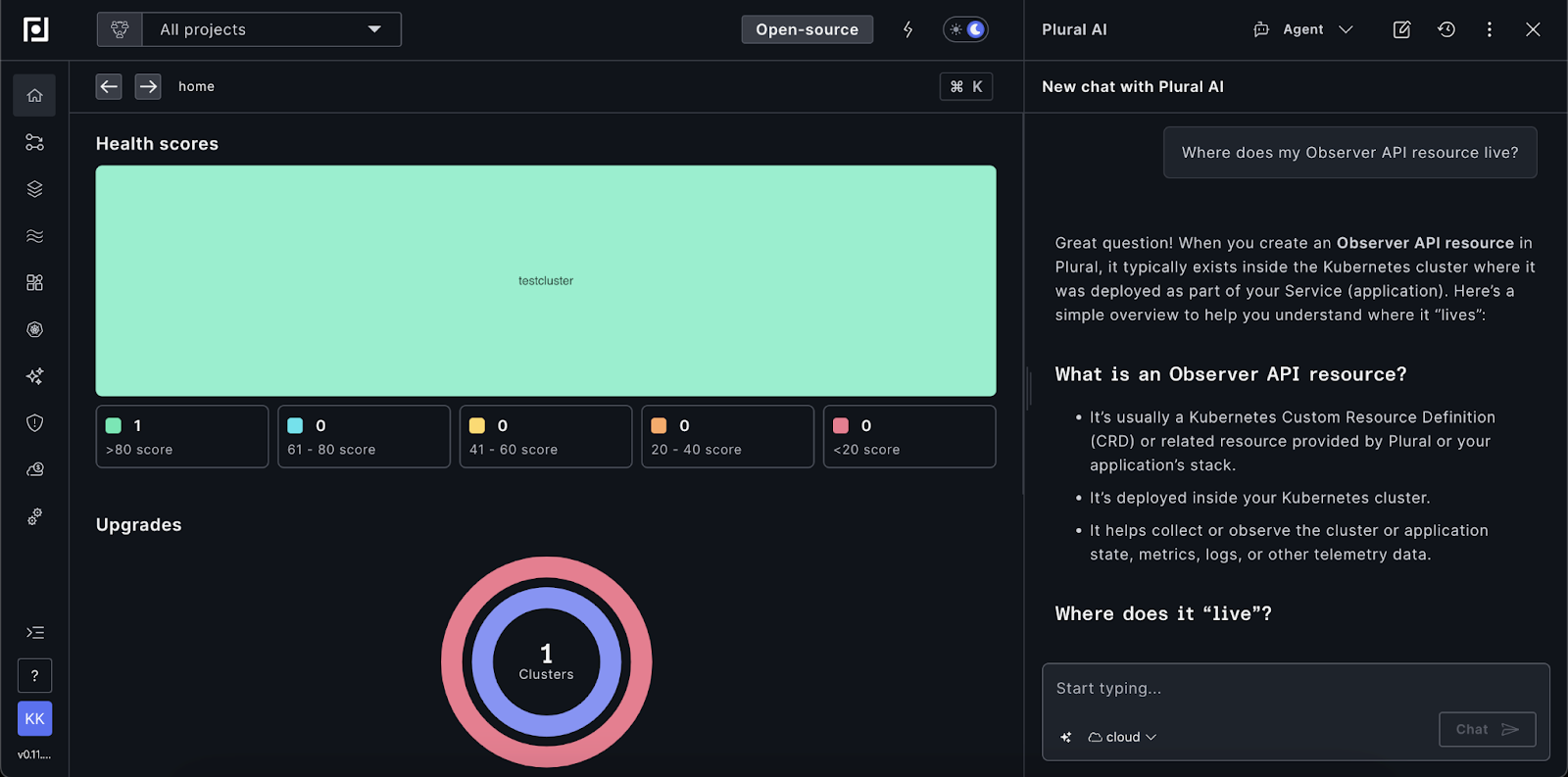

Real-time Infrastructure Intelligence

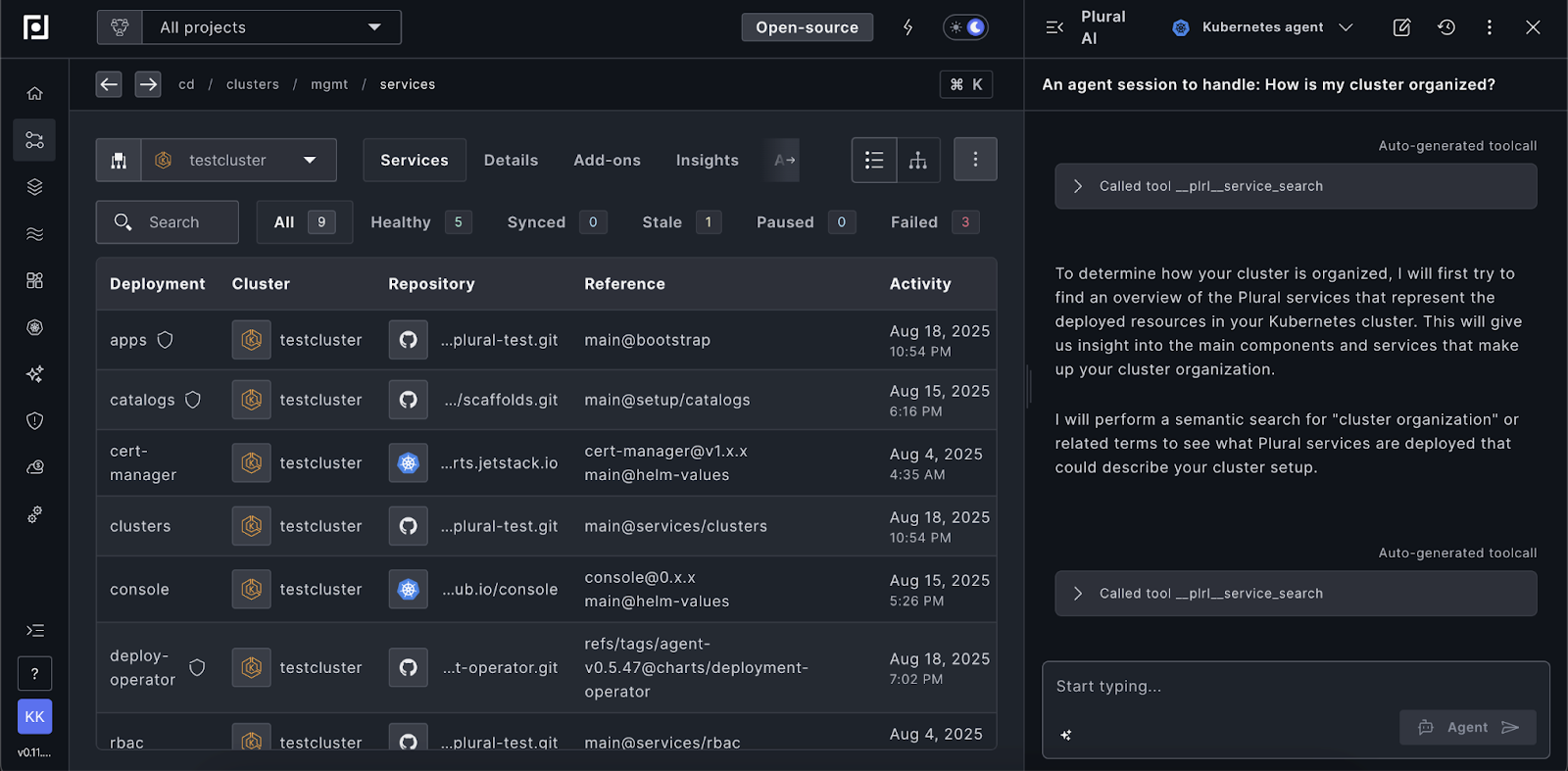

The foundation of Plural's AI upgrade is semantic vector indexing of your infrastructure's source data. Every Terraform state graph gets indexed, along with Plural service information and the complete hierarchy of objects you're instantiating. Instead of just matching keywords, the AI develops a contextual understanding of how your infrastructure components relate to each other.

When you ask, "Where does my Observer API resource live?" Plural AI doesn't just search file names. It understands the relationships between your resources, queries live Kubernetes clusters when you have the appropriate RBAC permissions, and can fetch and describe actual configurations in real-time.

This permission-aware approach ensures you only see information you're authorized to access, while the live querying capability means you're always working with current data. No more second-guessing whether that configuration file reflects what's actually running in production.

Three Modes of Intelligent Assistance

Plural's AI operates in three distinct modes, each optimized for different DevOps workflows.

Search Mode: Find Anything, Anywhere

Beyond simple resource location, search mode transforms how you explore your infrastructure. Ask the AI to find specific Terraform stacks, and it will return both file paths and provide descriptions of how resources are configured, security group rules, and contextual information about dependencies. The AI can search across multiple repository boundaries and even dive into live Kubernetes clusters to fetch real-time resource states.

Provisioning Mode: Self-service Infrastructure Deployment

When you need to deploy a new service, provisioning mode acts as an intelligent guide through your organization's service catalogs and PR automation workflows. Tell the AI you need Dagster for data orchestration, and it will search through available catalogs, present deployment options, and walk you through the entire provisioning process—from selecting the right cluster to configuring storage buckets with unique naming conventions.

This whole process works seamlessly with your existing service catalogs. The AI uses native tool calls integrated with Plural's platform, so any user with appropriate permissions can trigger the full self-service workflow.

Manifest Generation: Accurate Configs for Custom Operators

How do you efficiently verify the correct YAML structure for a custom resource definition that was built internally and isn't documented anywhere online? Traditionally, this would involve hunting through source code or poorly maintained documentation sites.

Manifest generation mode solves this by directly querying the Kubernetes API discovery endpoint to find the actual custom resource definition being used, then generating accurate configurations based on that live API specification. This is particularly powerful for internal operators that wouldn't be in any large language model's training data, ensuring you always get current, accurate manifests.

Real-world Example: Brand-new APIs

Consider Plural's recently released Federated Credentials resource, an API that's only been available for a week or two. This resource definition doesn't exist in the training data of any large language model, making it impossible for generic AI tools to provide accurate configuration guidance.

When you select a cluster and ask Plural's AI to generate a Federated Credentials manifest, it doesn't guess or hallucinate. Instead, it queries that specific cluster's Kubernetes API discovery endpoint to retrieve the live custom resource definition, then generates the YAML based on the actual API specification running in your environment.

This approach eliminates the common frustration of bouncing between outdated documentation sites and source code repositories, trying to understand what fields are required, what the expected data types are, or how the resource actually behaves in practice. The AI provides immediate, accurate guidance based on the truth of what's actually deployed in your infrastructure.

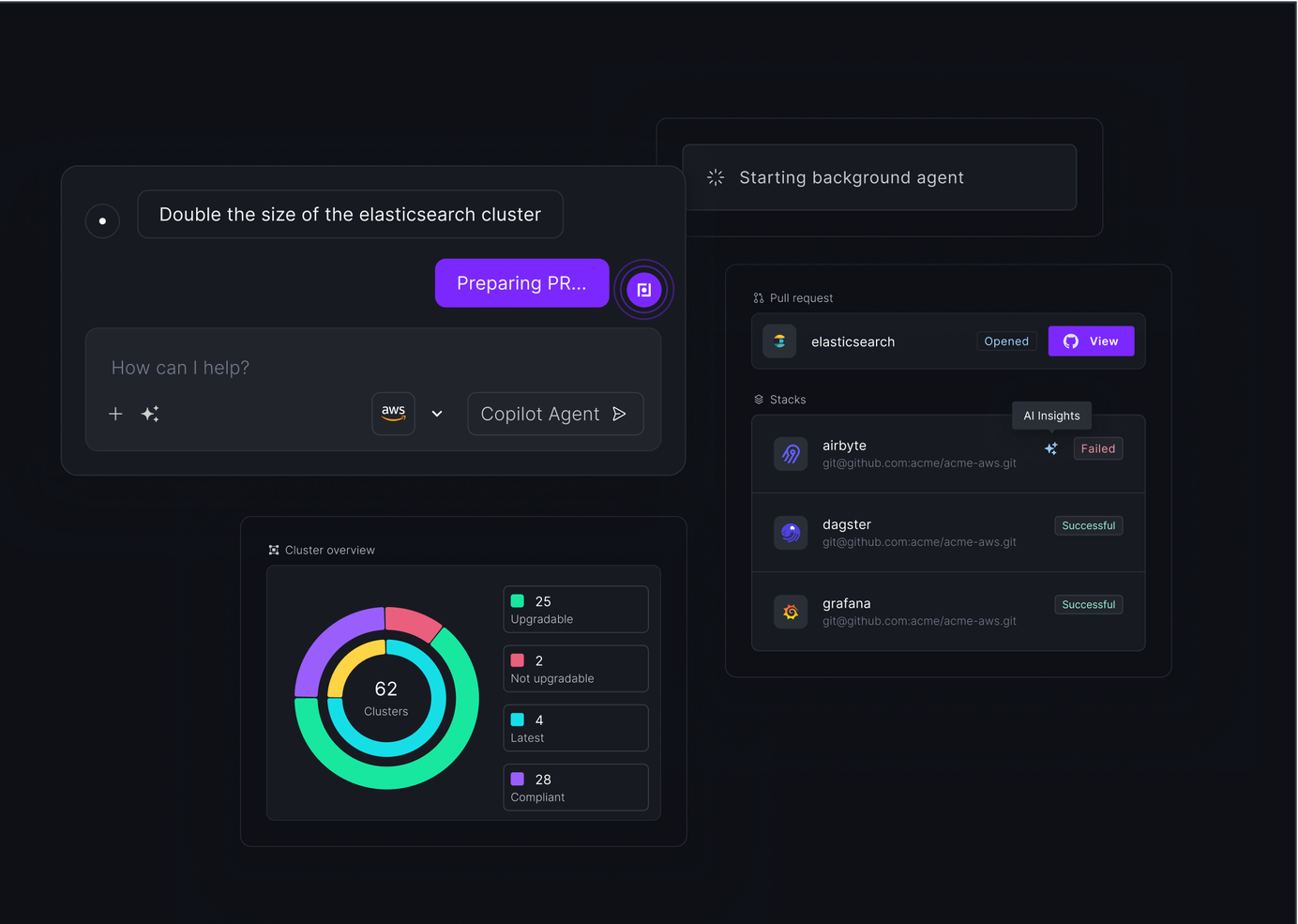

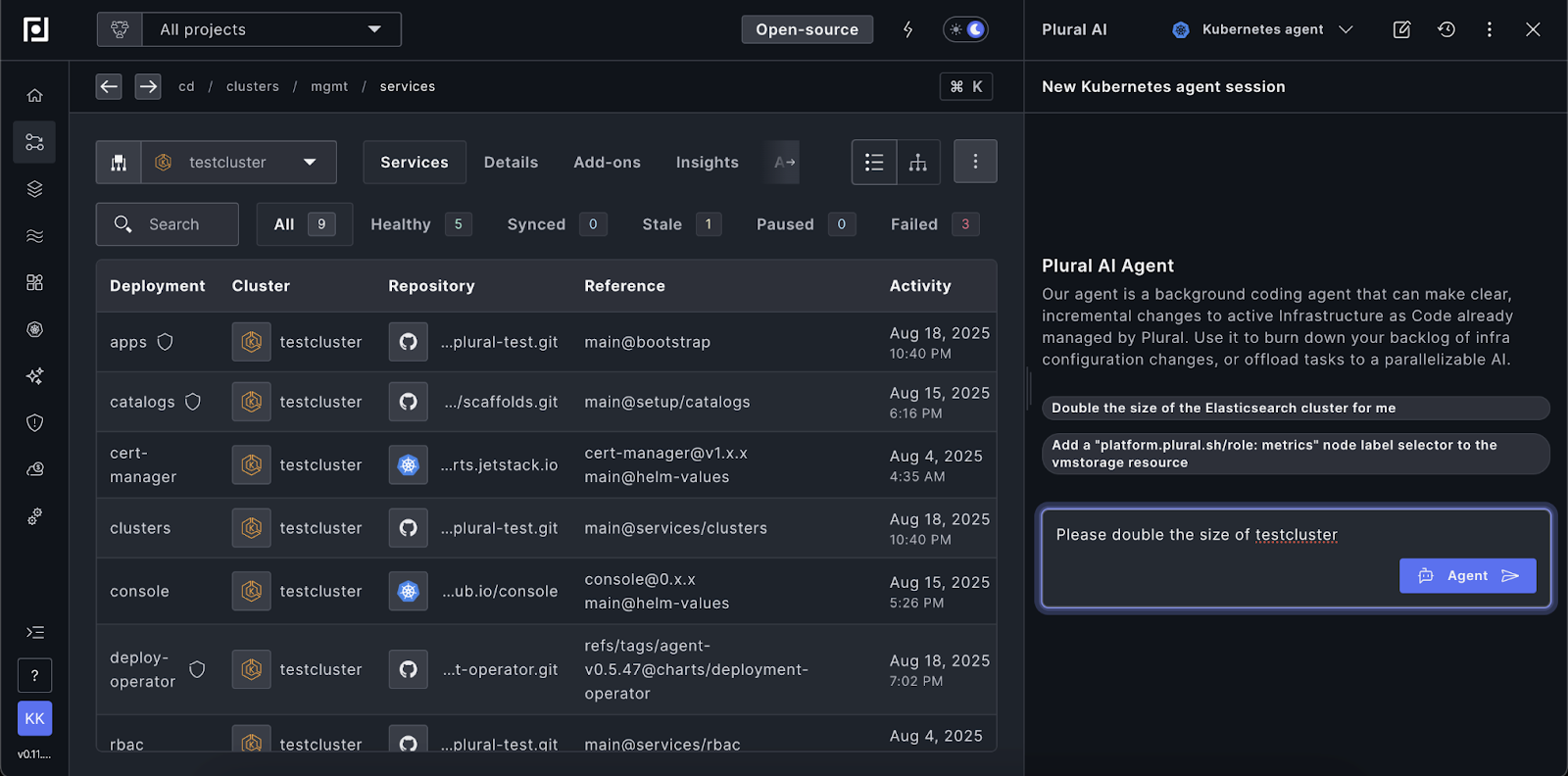

Infrastructure Coding Agents: From Chat to Pull Request

Beyond answering questions, Plural's AI can modify your infrastructure code. The platform includes dedicated agents for both Terraform and Kubernetes that can make real changes and create pull requests autonomously.

When you ask the Terraform agent to "double the size of the Elasticsearch cluster," it doesn't just tell you what to change—it uses semantic search to find the relevant stacks, plans out the necessary modifications, creates the actual code changes, and generates a pull request. What makes this particularly powerful is the self-reinforcing workflow: the agent reviews the Terraform plan output to verify that its changes will produce the intended result, and correct if needed.

If the plan reveals an error in the generated code, the agent learns from that feedback and issues additional commits to fix the problem. This creates a reliability layer that goes far beyond what you'd get from a generic code generation tool.

The Kubernetes agent follows a similar pattern, locating the relevant services and making the appropriate changes to manifests or Helm charts. While it doesn't have the same plan-based reinforcement loop as Terraform, it uses the same semantic understanding of your infrastructure to ensure changes are made in the right context.

These agents can be triggered via API, opening up possibilities for integration with project management tools like JIRA or Linear. Imagine tagging a ticket with "plural-ai" and having infrastructure changes automatically generated and submitted for review. For bandwidth-constrained DevOps teams, this kind of automation is a big deal.

The Vision: Cursor for DevOps Infrastructure

What we've built is the closest thing to Cursor for DevOps workflows. The combination of semantic understanding, live system access, and autonomous code generation creates an experience that goes far beyond traditional infrastructure-as-code tools.

And this is just the beginning. We're already working on additional capabilities that will extend the AI's reach to direct cloud account querying through SQL interfaces, enabling questions like "list all VPCs in my AWS account and their CIDR ranges" or "show me all EKS clusters and their associated security groups."

The traditional approach to infrastructure management doesn't scale with the complexity of modern cloud-native systems. Plural's AI capabilities eliminate these friction points while integrating seamlessly with your existing tools and permission structures.

Ready to experience infrastructure AI that actually understands your stack? Get started with Plural and see the difference context makes.

Newsletter

Join the newsletter to receive the latest updates in your inbox.