Continuous Deployment: Streamlining Your DevOps Workflow

Understand continuous deployment and how it automates software releases, ensuring faster delivery and improved collaboration in your development process.

Imagine this scenario: It is Monday morning and your team uncovers a critical security vulnerability in your flagship product. Without hesitation, you cancel the rest of the day's meetings, and a sense of urgency fills the air as your team races to rectify the bug before it jeopardizes your business. Swift mobilization ensues, but unfortunately, your team's conventional deployment process proves to be a hindrance due to its sluggishness and complexity.

Days turn into weeks, and by the time your team had a patch ready, news of the vulnerability had spread, causing widespread concern among your user base. Trust in the product has eroded, and customers grow frustrated with the delay.

Your company's reputation suffers and faces an uphill battle to regain any lost ground. Morale among the team starts to wane, as they realize the missed opportunities and the toll it took on their once-thriving company.

You shake your head at your computer and realize this isn’t the first time this has happened. Your engineering team's approach to deploying software isn’t cutting it anymore; it’s far too manual and bugs are constantly being pushed to production.

This nightmare scenario can be avoided through the use of Continuous Deployment.

So what does an ideal workflow look like for your engineering organization?

Developers working on code would push the changes to version control systems such as GitHub, GitLab, or BitBucket. From there, automated tests are run by an integration server such as Jenkins or TeamCity. If all tests pass, the changes are then pushed into production with no manual intervention required from IT staff or other personnel, ensuring that changes to the code base get released quickly and effectively without having to wait for IT staff availability.

Once in production, the application can be monitored closely using tools such as New Relic or AppDynamics, allowing the organization to keep track of how the application is performing to spot any issues quickly and resolve them before they become major problems.

By reducing manual actions and automating processes like testing and deployment, organizations can benefit from faster release cycles while maintaining stability within their software applications.

Modern engineering teams are adopting continuous deployment to ship code faster and more reliably. This post explains continuous deployment, contrasts it with continuous delivery, and offers five best practices for seamless implementation. We'll cover practical tips for managing your codebase and building effective pipelines for CI deployment and continuous deploy.

What is Continuous Deployment?

Continuous deployment is a modern and efficient software development practice that enables the automatic release of code changes into the production environment. It ensures that features are swiftly available to users as soon as they are ready. This approach not only reduces the time required for feature availability but also empowers developers to prioritize delivering value more rapidly.

In our scenario earlier, the lack of Continuous Deployment hindered the team’s ability to respond to user feedback effectively. Valuable insights and feature requests were piling up, leading to a growing disconnect between the team and their users. Meanwhile, competitors who had embraced Continuous Deployment were swiftly releasing updates, earning trust and loyalty from their customers.

Key Takeaways

- Continuous Deployment pipelines enable faster time to market and continuous improvement: Automating deployments allows you to release features quickly, gather user feedback, and iterate rapidly. This responsiveness is crucial for staying competitive.

- Managing Kubernetes deployments at scale requires a robust platform: Handling YAML manifests, upgrades, and multi-cluster deployments can be complex. Plural CD simplifies these tasks, allowing your team to focus on building and delivering value.

- Successful Continuous Deployment relies on automation and best practices: Implementing comprehensive automated testing, streamlined codebase management, and well-defined rollback procedures are essential for reliable and efficient CD. Plural CD provides the tools and features to support these best practices.

Defining Continuous Deployment

Continuous Deployment (CD) automates releasing software updates and features to users. Think of it as a pipeline that carries code changes from a developer’s keyboard to your users’ devices automatically. This automation eliminates the manual steps and delays that traditionally bogged down software releases, allowing teams to ship updates frequently and reliably. CD is about getting your work into the hands of your users as quickly and efficiently as possible. For a deeper dive into the principles of CD, check out Wikipedia's definition of continuous deployment.

How Continuous Deployment Works

Continuous deployment is the natural extension of continuous integration (CI). It takes the tested and validated code from CI and automatically deploys it to your users. This automation eliminates delays between coding and release, enabling faster feedback and quicker iteration. Automated tests are crucial in this process, ensuring that only thoroughly vetted code makes it to production. This rapid release cycle allows you to respond to user needs and market changes with agility. IBM's resource on continuous deployment offers further insights into this process.

Step-by-Step Breakdown of the CD Process

The CD process begins with developers merging code changes into a central repository, like Git. Automated builds and tests are triggered with each merge, catching issues early. If these tests pass, the code is automatically deployed to a staging environment for further testing. Finally, assuming all goes well in staging, the code is automatically released to production. This iterative process ensures quality and speed.

Artifact Management in Continuous Deployment

Managing your built software (artifacts) effectively is crucial for CD. This involves versioning (using semantic versioning is recommended), establishing clear retention policies, and securing access with methods like Personal Access Tokens. Proper artifact management ensures you can track, manage, and deploy the right versions of your software reliably. Consider tools like JFrog Artifactory or Sonatype Nexus to help manage your artifacts effectively.

Automated Testing Strategies for Continuous Deployment

Automated testing is the backbone of continuous deployment. A robust suite of tests, including unit, integration, and end-to-end tests, is essential to catch bugs before they reach production. These tests should be integrated into your CD pipeline, automatically running with every code change. Explore testing frameworks like pytest, Mocha, or Jest to build a comprehensive testing strategy.

Microservice Deployment Example

Microservices, small, independent components of a larger application, are well-suited for continuous deployment. Each microservice can be deployed independently, allowing for faster updates and reduced risk. If a problem arises with one microservice, it can be rolled back without affecting the entire application. This architectural pattern enhances the agility and resilience of your software.

Planning Your Continuous Deployment Workflow

Careful planning is essential for successful continuous deployment. Before implementing CD, map out your entire workflow. Consider factors like artifact management, your staging and production environments, deployment targets, and rollback procedures. A well-defined plan will ensure a smooth and efficient CD process. Plural’s agent-based architecture can simplify management of deployments across multiple clusters.

Benefits of Continuous Deployment

Continuous deployment offers numerous advantages, including faster time to market, reduced risk, and improved collaboration. By automating the release process, teams can focus on building and delivering value to users more quickly. This translates to a more responsive and customer-centric development process.

Faster Feedback and Reduced Cycle Time

CD shortens the feedback loop, allowing you to get user feedback on new features quickly. This rapid iteration cycle enables continuous improvement and faster product evolution. This responsiveness is key to staying competitive in today’s fast-paced market.

Increased Business Focus and Responsiveness

Continuous deployment frees up developers from manual tasks, allowing them to focus on building valuable features. This increased focus translates to greater business responsiveness and faster innovation. By automating repetitive tasks, you empower your team to concentrate on what matters most: delivering value to your users.

Improved Quality and Reduced Risk

By releasing smaller changes more frequently, CD reduces the risk associated with large, infrequent releases. Automated testing catches bugs early, further improving the quality of your software. This approach minimizes the impact of potential issues and allows for quicker remediation.

Enhanced Collaboration and Customer Experience

CD fosters better collaboration between development and operations teams. Frequent updates and faster feedback cycles lead to a continuously improving product, enhancing the overall customer experience. This collaborative approach ensures everyone is aligned on delivering the best possible product to your users.

Continuous Deployment vs. Continuous Delivery vs. Continuous Integration

Understanding the differences between CI, CD (Continuous Delivery), and CD (Continuous Deployment) is crucial. While related, they represent distinct stages in the software delivery process. Knowing these distinctions will help you choose the right approach for your team and organization.

Key Differences and Relationships

Continuous Integration (CI) focuses on automating the build and test process. Continuous Delivery extends CI by automating the release process up to a pre-production environment. Continuous Deployment takes it a step further, automating the release to production. These practices build upon each other, creating a streamlined and efficient software delivery pipeline.

Continuous Delivery requires a manual approval before releasing to production, while continuous deployment automatically releases to production after successful testing. This key difference highlights the level of automation and speed associated with each approach.

Benefits of Each Approach

CI improves code quality and reduces integration problems. Continuous Delivery enables faster releases and reduces risk. Continuous Deployment maximizes speed and agility, getting features to users as quickly as possible. Choosing the right approach depends on your team's maturity and business needs.

Related Deployment Strategies: Canary Releases and Blue-Green Deployments

Canary releases involve gradually rolling out updates to a small subset of users before a full release. Blue-green deployments involve switching traffic between two identical environments, minimizing downtime during deployments. These strategies can be used in conjunction with continuous deployment to further enhance the reliability and stability of your releases. Check out Plural's guide on canary deployments for a practical example.

Tools and Technologies for Continuous Deployment

A variety of tools support continuous deployment, from CI/CD platforms to version control systems. Choosing the right tools for your team and workflow is essential for successful CD implementation.

Jenkins and TeamCity

Jenkins is a popular open-source automation server that can orchestrate your entire CD pipeline. TeamCity is another powerful CI/CD platform with robust features for building, testing, and deploying software. Both tools offer extensive plugin ecosystems to integrate with various other tools and services.

Cloud-Based CI/CD Solutions (AWS, Azure, GitLab)

Cloud providers offer integrated CI/CD solutions. AWS CodePipeline and CodeBuild, Azure DevOps, and GitLab CI/CD provide managed services for building, testing, and deploying applications in the cloud. These services offer scalability, reliability, and seamless integration with other cloud services.

Version Control and Code Review

Git is the most widely used version control system, essential for tracking code changes and collaborating on software projects. Code review tools, often integrated with Git platforms like GitHub, GitLab, and Bitbucket, facilitate peer review and ensure code quality. These practices are fundamental to a healthy and efficient development workflow.

Configuration Management and Release Automation

Configuration management tools like Ansible, Chef, and Puppet automate infrastructure provisioning and configuration, ensuring consistency across environments. Release automation tools like Spinnaker orchestrate complex deployment workflows. These tools help manage the complexity of modern infrastructure and deployments.

Infrastructure Monitoring and Rollback Capabilities

Monitoring tools like Datadog, Prometheus, and Grafana provide visibility into application performance and infrastructure health. Rollback capabilities are essential for quickly reverting to a previous version if a deployment causes problems. Effective monitoring and rollback procedures are crucial for maintaining the stability and reliability of your applications.

Metrics and Reporting

Tracking metrics like deployment frequency, lead time, and change failure rate provides insights into your CD process and helps identify areas for improvement. Tools like Plural provide dashboards and reporting features to visualize these metrics, giving you a clear picture of your CD performance. By monitoring these metrics, you can continuously optimize your CD pipeline for maximum efficiency.

Prerequisites and Requirements for Continuous Deployment

Successful continuous deployment requires a strong testing culture, robust automation, and close collaboration between development and operations teams. A well-defined workflow and clear communication are also essential. These prerequisites ensure that your team is prepared for the demands of continuous deployment.

Costs of Implementing Continuous Deployment

Implementing continuous deployment requires investment in tools and infrastructure, as well as time and effort to automate processes and build a strong testing culture. However, the long-term benefits of faster releases, reduced risk, and increased agility often outweigh the initial costs. Plural can help streamline this process and reduce the overhead associated with managing Kubernetes deployments, making CD more accessible and cost-effective.

Continuous Deployment on Kubernetes

Continuous Delivery (CD) aims to guarantee the secure and seamless deployment of your changes into production, promoting efficiency throughout the process. Kubernetes, with its capacity for swift deployment, particularly in a recreate strategy that replaces all Pods at once rather than incrementally, offers speed but may result in downtime.

This can be problematic for most of us who rely on uninterrupted workloads. When continuous delivery is properly implemented, developers always have a deployment-ready build artifact that has passed through a standardized testing process.

The trust-building procedures applications underwent before Kubernetes' introduction remain just as relevant.

Mainly, we have seen organizations face the following challenges when manually deploying applications on Kubernetes.

1. Streamlining Kubernetes Deployments

Even in a world with services like EKS, GKE, and AKS providing “fully” managed Kubernetes clusters, maintaining a self-service Kubernetes provisioning system is still challenging.

Toolchains like Terraform and Pulumi can create a small cluster fleet but don’t provide a repeatable API to provision Kubernetes at scale and can often cascade disruptive updates with minor changes not caught in review.

Additionally, the upgrade flow around Kubernetes is fraught with dragons, in particular:

- Most control planes require a full cluster restart to apply a new Kubernetes version to each worker node, which is a delicate process that can bork a cluster.

- Deprecated API versions can cause significant downtime, even a sitewide outage similar to what the team at Reddit saw this year.

Companies with larger deployments end up dedicating a team to manage rollbacks and keep track of old and new deployments. This process becomes more challenging and ultimately riskier when dealing with a large team and a complex application.

2. Managing Kubernetes Codebases

Kubernetes specifies a rich, extensive REST API for all its functionality, often declared using YAML. While it is relatively user-friendly, larger application codebases interacting with that YAML spec can frequently balloon into thousands of lines of code.

There have been numerous attempts to moderate this bloat, using templating with tools like Helm or overlays with Kustomize. We believe all of these have significant drawbacks, which impair an organization's ability to adopt Kubernetes, in particular:

- No ability to reuse code naturally (there is no package manager for YAML)

- No ability to test your YAML codebases locally or in CI (preventing common engineering practices for quickly detecting regressions)

Common software engineering patterns, like crafting internal SDKs or “shifting left,” are impossible with YAML as your standard. The net effect is going to be more bugs reaching clusters, slower developer ramp times, and just general grumbling throughout an engineering organization from clunky codebases.

3. Building Effective Deployment Pipelines on Kubernetes

Kubernetes has a rich ecosystem of CD tooling, with the likes of Flux and ArgoCD, but most are built primarily for simple single-cluster deployment use cases out of a unique git repository. To use any of them, you still need lower-level Kubernetes management expertise to provision and administer the cluster to which they deploy.

In particular, we’ve noticed Kubernetes novices are quite intimidated by all the details of authenticating to the Kubernetes API, especially when using managed Kubernetes that goes through about three layers of Kubeconfig → IAM authenticator → bearer token auth + TLS at the control plane.

In order to get a CD system working, you’ll need to be capable enough to deploy something like Argo-CD to fully manage the Kubernetes auth layer to get the systems integrated and hardened. While this is not an insuperable task, it is probably enough friction to make people reconsider using an inferior tool like ECS for their containerized workloads.

And even if you get all that set up, you still need to be able to create a staged deployment pipeline from dev → staging → prod to allow for appropriate integration testing of your code before exposing it to users. That often requires hand-rolling a tedious, complex git-based release process and is manual enough that you can’t self-serviceably expose it to other teams.

Continuous Delivery vs. Continuous Deployment: What's the Difference?

As technology continues to evolve, the demand for faster software delivery and more frequent releases has significantly increased. In response to this demand, software development teams have adopted various methodologies and approaches to speed up the software development process. Two popular approaches are Continuous Delivery and Continuous Deployment.

Continuous Delivery and Continuous Deployment are related software delivery methodologies emphasizing agility and automation in software development. Continuous Delivery is a process of automating the software delivery process to enable frequent and efficient releases. Continuous Deployment, on the other hand, is an extension of Continuous Delivery where every successful build of the software is automatically deployed to production.

The main difference between Continuous Delivery and Continuous Deployment is the level of automation and control over the release process. With Continuous Delivery, the software is built, tested, and prepared for deployment, but the deployment is a manual process. This means the development team has more control over when and how the software is released. With Continuous Deployment, the software is automatically deployed to production after every successful build, enabling much faster releases and reducing human error.

Another significant difference between these two approaches is the level of risk involved. With Continuous Delivery, there is still a chance that a deployment could fail at the production stage, even though thorough testing was done in the development and testing environments. With Continuous Deployment, since every build is deployed to production automatically, the risk of a failed deployment is significantly higher, and the impact could be much greater.

One way to mitigate the risks associated with Continuous Deployment is to implement canary releases, where new features are gradually released to a subset of users to identify and fix any issues before fully rolling out the latest release. This approach is not possible with Continuous Delivery, where the development team has full control over when and how the software is released.

Continuous Deployment demands a greater degree of automation compared to Continuous Delivery. To achieve Continuous Deployment, an automated pipeline is necessary to seamlessly guide the code through various stages of development, testing, and deployment. This requires an elevated standard of code maturity, rigorous quality testing, and adherence to DevOps practices. On the other hand, Continuous Delivery allows for some manual processes, rendering it more accessible for organizations that are not fully prepared for complete automation.

Choosing between Continuous Delivery and Continuous Deployment depends on various factors, such as the development team's maturity level, risk tolerance, and the need for speed and agility. Continuous Delivery is a good starting point for organizations that are looking to automate their software delivery process and achieve more frequent releases.

On the other hand, Continuous Deployment is suitable for organizations that require maximum speed and agility in their software development process but are willing to take on more risk. Regardless of the approach, it is essential to have a solid Continuous Integration and Continuous Testing process to ensure the code quality and stability of the software. By implementing the right approach for your team, you can enjoy faster releases, improved quality, and reduced risk in your software development process.

Continuous Deployment Best Practices

Here are five crucial Continuous Deployment (CD) best practices:

1. Implement Automated Testing

- Implement a comprehensive suite of automated tests, including unit, integration, and end-to-end tests.

- Use tools like unit testing frameworks, integration testing frameworks, and automated testing services to ensure code changes are thoroughly tested before deployment.

2. Integrate CI for Seamless Deployments

- Practice continuous integration by frequently merging code changes into a shared repository.

- Use CI tools to automatically build and test code with every integration, ensuring it remains in a deployable state.

3. Deploy Incrementally

- Deploy small, incremental changes rather than large, monolithic updates. This reduces the risk of introducing major issues and allows for quicker rollbacks if needed.

4. Plan for Rollbacks and Canary Releases

- Have well-defined rollback procedures in case a deployment introduces critical issues.

- Implement canary releases to gradually roll out changes to a small subset of users, allowing for early detection of any problems before a full release.

5. Monitor and Observe Your Deployments

- Implement robust monitoring and observability solutions to track the health and performance of your application in real-time.

- Set up alerts to notify the team of any anomalies, ensuring rapid response to potential issues.

By adhering to these best practices, development teams can establish a reliable and efficient Continuous Deployment process, enabling them to deliver high-quality software to users quickly and with confidence.

Five Benefits Of Continuous Deployment

1. Ship Faster with Continuous Deployment

Time is of the essence in software development. With Continuous Deployment, bugs can be detected and fixed quickly, and new features can be pushed out seamlessly. This means that your organization can deliver high-quality software releases faster than ever before. With Kubernetes, you can take advantage of the built-in scaling capabilities to handle increased load during peak periods. The automated scaling ensures that your applications are always available, providing an uninterrupted flow of business value.

2. Improve Code Quality with Continuous Deployment

Continuous Deployment's automated testing ensures quality control that can catch problems before they reach production. Thorough testing at every stage of the deployment pipeline leads to a reduction in bugs and an improved user experience. By catching any issues early and addressing them immediately, your DevOps team can become more agile and responsive to customer needs.

3. Better Collaboration Through Continuous Deployment

Continuous Deployment encourages better collaboration between developers, testers, and operators. With a shared automated pipeline, teams learn to work together and communicate better. Collaboration ensures that the pipeline runs efficiently, from code testing to deployment. Kubernetes provides developers and operators with abstraction that makes it easier to handle versioning and deployment, thereby streamlining collaboration efforts.

4. Gain Visibility and Control

While deploying software through traditional means may be challenging, using Continuous Deployment provides increased visibility and control. With Kubernetes, you can easily monitor and track your application state and your deployment pipeline. Kubernetes provides an intuitive dashboard that allows you to troubleshoot and take corrective action proactively. You can use Kubernetes to label and organize your software, making it easier to keep track of the version of each component.

5. Reduce Costs with Continuous Deployment

Implementing Continuous Deployment eliminates the traditional deployment roadblocks that are faced with manual deployments. This leads to a reduction in the operational and maintenance costs associated with deployment, not only providing cost savings but also freeing up time and resources that can be used for further innovation and development

Continuous Deployment done right with Plural CD

Continuous Deployment with Plural CD

Let's face it, managing Kubernetes deployments can be a real headache. Wrestling with YAML files, juggling kubeconfigs, and troubleshooting deployments across multiple clusters can quickly drain your team's time and energy. What if there was a simpler way? That's where Plural CD comes in.

Simplified Kubernetes Deployments with Plural CD

Plural CD simplifies Kubernetes deployments by abstracting away much of the underlying complexity. Our platform acts as a single pane of glass for managing your entire Kubernetes fleet, regardless of where your clusters reside—public cloud, on-prem, or even your local machine. With Plural CD, you can say goodbye to tedious manual processes and hello to streamlined, automated deployments. This means less time spent on firefighting and more time focused on building and delivering great software.

Remember those tricky Kubernetes upgrades and the dreaded API deprecations? Plural CD helps you navigate these challenges with automated upgrade processes and built-in safeguards to minimize downtime. We leverage a secure, agent-based pull architecture, meaning Plural CD doesn't require direct access to your clusters. This simplifies networking and enhances security, allowing you to manage workloads anywhere with ease. Learn more about our architecture.

Streamlined Codebase Management with Plural CD

Managing sprawling Kubernetes YAML manifests can quickly become unwieldy. Plural CD addresses this by providing a structured approach to codebase management. We support popular tools like Helm and Kustomize, allowing you to leverage existing workflows. We go further by offering a robust, GitOps-driven system that ensures your deployments are consistent and reliable. This allows your team to focus on delivering value, not YAML wrangling.

Plural CD promotes code reusability and testability, enabling you to apply software engineering best practices to your Kubernetes configurations. This means fewer bugs, faster development cycles, and a happier, more productive team. No more battling with thousands of lines of YAML—Plural CD helps you keep your codebase clean, organized, and under control. See how Plural CD can improve your continuous deployment workflow.

Building Robust Pipelines with Plural CD

Building and managing a robust Continuous Deployment pipeline on Kubernetes can be a daunting task. Plural CD simplifies this process by providing a unified platform for orchestrating your entire deployment workflow. From code integration and testing to deployment and monitoring, Plural CD streamlines every step. This gives you a clear, concise view of your entire deployment process, making it easier to identify and resolve issues quickly.

Our platform integrates seamlessly with popular CI/CD tools like Jenkins and TeamCity, allowing you to leverage your existing investments. We also provide built-in support for multi-cluster deployments, staged rollouts, and automated rollbacks, giving you the control and flexibility you need to deploy with confidence. With Plural CD, you can build robust, scalable pipelines that empower your team to deliver value faster and with less risk. Book a demo today to see how Plural CD can transform your Kubernetes deployments.

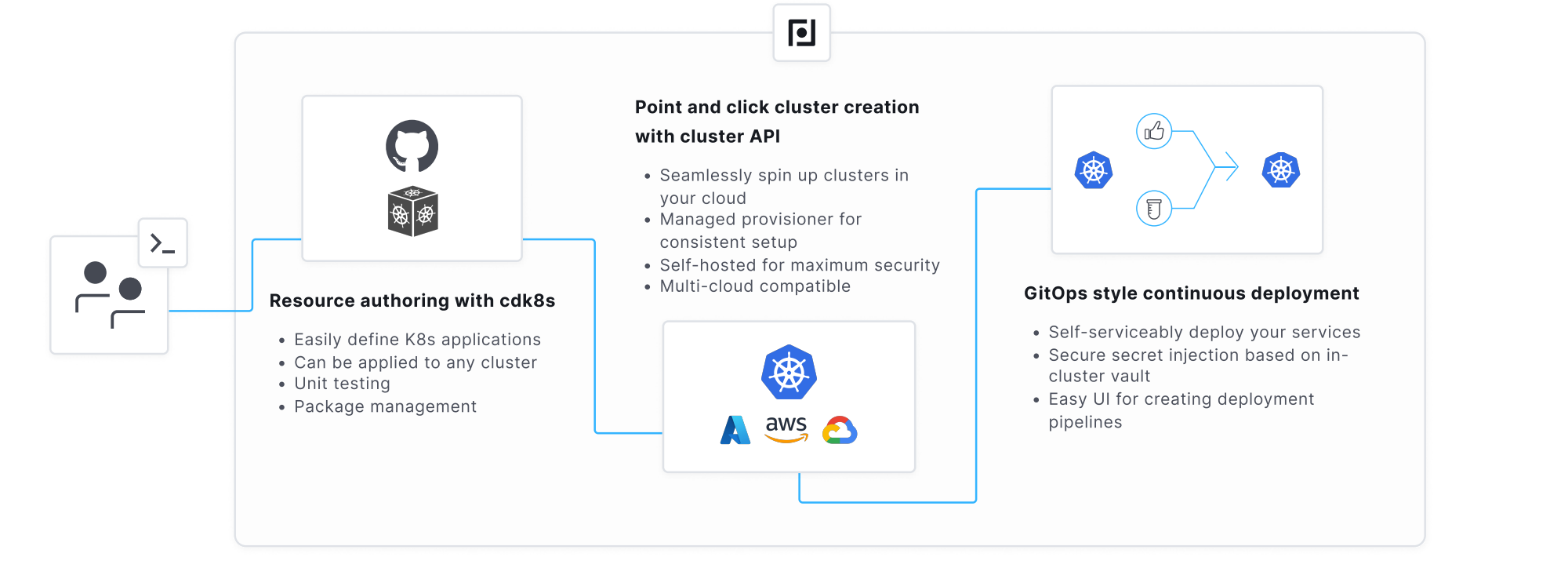

Plural is an end-to-end solution for managing Kubernetes clusters and application deployment. Plural offers users a managed Cluster API provisioner to consistently set up managed and custom Kubernetes control planes across top infrastructure providers.

Additionally, Plural provides a robust deployment pipeline system, empowering users to effortlessly deploy their services to these clusters. Plural acts as a Single Pane of Glass for managing application deployment across environments.

With Plural you can effortlessly detect deprecated Kubernetes APIs used in your code repositories and helm releases minimizing the effect deprecated APIs can have on your ecosystem.

Features:

- Rapidly create new Kubernetes environments across any cloud without ever having to write code

- Managed, zero downtime upgrades with cluster API reconciliation loops, don’t worry about sloppy and fragile terraform rollouts

- Dynamically add and remove nodes to your cluster node topology as you like

- Use scaffolds to create functional gitops deployments in a flash

- First-class support for cdk8s.io to provide a robust Kubernetes authoring experience with unit testability and package management

- Integrated secret management

- A single, scalable user interface where your org can deploy and monitor everything fast.

Related Articles

- What is Continuous Deployment?

- Plural | Continuous Deployment

- Evaluating the Top 10 Continuous Deployment Tools for Developers

Frequently Asked Questions

How does Continuous Deployment differ from Continuous Delivery? Continuous Delivery prepares code changes for release, but requires a manual step to deploy to production. Continuous Deployment automates that final step, deploying changes automatically after successful testing. This key difference makes Continuous Deployment faster and more efficient, but also requires a higher level of confidence in your automated testing and deployment processes.

What are the key prerequisites for implementing Continuous Deployment successfully? A robust automated testing suite covering unit, integration, and end-to-end tests is essential. You'll also need a well-defined CI/CD pipeline, a strong DevOps culture emphasizing collaboration between development and operations, and clear procedures for rollbacks in case of unexpected issues. Thorough planning and preparation are crucial for a smooth transition to Continuous Deployment.

What tools and technologies can help facilitate Continuous Deployment? Popular CI/CD platforms like Jenkins, TeamCity, or cloud-based solutions like AWS CodePipeline, Azure DevOps, and GitLab CI/CD can orchestrate your pipeline. Version control systems like Git, along with code review tools, are essential for managing code changes. Configuration management tools (Ansible, Chef, Puppet) and release automation tools (Spinnaker) can further automate your infrastructure and deployments. Finally, monitoring and observability tools are crucial for tracking application health and performance post-deployment.

How can Plural CD help simplify Continuous Deployment on Kubernetes? Plural CD streamlines Kubernetes deployments by providing a unified platform for managing your entire fleet. It simplifies complex tasks like upgrades and API deprecation handling, offers a structured approach to managing Kubernetes YAML with support for Helm and Kustomize, and provides a robust GitOps-driven system for reliable deployments. Plural CD also integrates with popular CI/CD tools and supports multi-cluster deployments, staged rollouts, and automated rollbacks.

What are the cost considerations for implementing Continuous Deployment? Implementing Continuous Deployment involves investments in tools, infrastructure, and the time required to automate processes and build a strong testing culture. However, the long-term benefits of faster releases, reduced risk, and increased agility often outweigh these initial costs. Solutions like Plural CD can help streamline the process and reduce the overhead associated with managing Kubernetes deployments, making Continuous Deployment more accessible and cost-effective.

Newsletter

Join the newsletter to receive the latest updates in your inbox.