A Comprehensive Introduction to Kubernetes

Master Kubernetes with this step-by-step tutorial. Learn orchestration essentials, from deploying applications to managing clusters, in our comprehensive Kubernetes tutorial.

For teams striving to automate and streamline the lifecycle of complex applications, Kubernetes offers an unparalleled toolkit. It’s the standard for container orchestration, enabling robust and scalable systems. But becoming proficient at Kubernetes means wrapping your head around all its moving parts—starting with the basics of deploying Pods and working your way up to the more complex stuff like networking and storage setups.

This article is designed to equip you with the knowledge and practical skills you need to operate Kubernetes effectively. We'll explore its core architecture, guide you through deploying and managing your applications, and discuss best practices that ensure your deployments are both resilient and efficient.

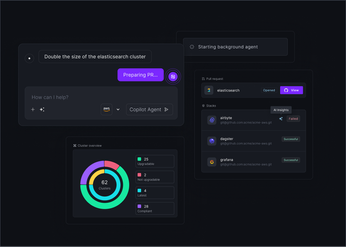

Unified Cloud Orchestration for Kubernetes

Manage Kubernetes at scale through a single, enterprise-ready platform.

Key takeaways:

- Learn Core Kubernetes Components: Understand Pods, Services, and Deployments as the building blocks for automating your application's deployment, scaling, and operational management.

- Implement Dynamic Application Management: Utilize features like Horizontal Pod Autoscaling and rolling updates to keep your applications responsive, highly available, and simple to update.

- Adopt Comprehensive Observability and Centralized Control for Growth: As your Kubernetes usage expands, establish strong monitoring, logging, and security practices, and use tools like Plural for streamlined multi-cluster management.

What Is Kubernetes and Why Does It Matter?

If you're working with modern applications, especially in a cloud environment, you've almost certainly heard of Kubernetes. But what exactly is it, and why has it become so central to how we build and run software? Understanding Kubernetes is key for DevOps and platform engineering teams aiming to automate and streamline application lifecycle management. Let's break down its core aspects.

What Is Kubernetes?

Kubernetes (often referred to as K8s) is an open-source system that automates deploying, scaling, and managing containerized applications. Think of it as the operational brain for your software, ensuring smooth and efficient execution across multiple computers.

Kubernetes handles the A-to-Z of container orchestration, from deploying them to managing their states and deleting them when not necessary. Kubernetes works with the declarative principle, meaning you tell it the state that you want, and it works to achieve and maintain that state. For example, if you want to run three instances of an application, Kubernetes will ensure three replicas of that app are always running. This means if one instance fails, Kubernetes will automatically restart it.

What are the Benefits of Kubernetes

Kubernetes excels at container orchestration, automating operational tasks for more resilient and efficient deployments. Key benefits include:

- Automatic Scaling: It dynamically adjusts application instances based on demand (e.g., CPU usage), ensuring performance during peaks and resource efficiency during lulls.

- Self-Healing: Kubernetes actively monitors containers and nodes, automatically restarting failed containers, replacing unhealthy ones, and rescheduling workloads from failed nodes.

- Rolling Updates and Rollbacks: You can deploy new application versions incrementally with no downtime. If problems occur, it supports automated rollbacks to stable versions.

- Portability: It offers a consistent platform across diverse environments, from on-premises setups to various public clouds, minimizing vendor lock-in. Being open-source, it is backed by the Cloud Native Computing Foundation and the OSS community.

A Quick Look: Kubernetes Evolution and Adoption

Kubernetes originated from Google's internal system, Borg, and was open-sourced in 2014, later joining the Cloud Native Computing Foundation (CNCF). It has since become a vibrant, community-driven project.

Now the industry standard, Kubernetes is offered as managed services (EKS, AKS, GKE) by major cloud providers, simplifying individual cluster setup. However, managing a fleet of clusters across multi-cloud or hybrid setups creates significant complexity. This is where Plural provides critical value, offering a unified platform to streamline Kubernetes fleet management and ensure consistent operations across all your environments.

How Does Kubernetes Architecture Work?

Kubernetes operates with a well-defined architecture. Core components manage the cluster, while others run your applications on individual machines. Understanding these parts and their interactions is key to effectively using Kubernetes. Let's explore the main architectural elements.

The Master Components

The master components, collectively forming the control plane, are the operational brain of your Kubernetes cluster. They make critical global decisions, like scheduling workloads, and react to cluster events. Key elements include:

- kube-apiserver, which exposes the Kubernetes API, acting as the front door

- etcd, a reliable key-value store for all cluster data

- kube-scheduler, which assigns new Pods to Nodes based on resource needs and policies

- kube-controller-managers, running processes to drive the cluster to its desired state.

Grasping how these components interact is essential. Plural's unified Kubernetes dashboard offers clear visibility into their operations across your fleet.

The Node Components

Nodes are the worker machines in your Kubernetes cluster where your applications execute. Each node, managed by the control plane, runs crucial services to host Pods. These include:

- kubelet, an agent that ensures containers run as specified by communicating with the control plane.

- Kube-proxy on each node maintains network rules, enabling communication between Pods and services.

- A container runtime (like Docker or containerd) pulls images and runs your containers. The control plane manages these nodes, orchestrating the work they perform for your distributed applications.

Key Objects: Pods, Services, and Deployments

Kubernetes uses objects to represent your cluster's desired state.

- Pods are the smallest deployable units, representing a single process instance and can contain one or more containers sharing resources.

- Services provide stable network access (a consistent IP address and DNS name) to a group of Pods, even as individual Pods change.

- Deployments manage your application's lifecycle, declaratively defining the desired state for Pods and handling automated updates and rollbacks.

Plural CD helps streamline the management of these deployments across your clusters, simplifying complex operational tasks.

Setting Up Your Kubernetes Environment

Establishing your Kubernetes environment is the foundational step to harnessing its orchestration capabilities. Whether you're taking your first steps with containerized applications or managing sophisticated, large-scale deployments, a correctly configured setup is paramount. This process involves deciding where your Kubernetes cluster will operate—be it locally on your personal machine for development and testing, or on a cloud provider for production workloads—and equipping yourself with the essential tools to interact with it.

For engineering teams responsible for an expanding array of Kubernetes clusters, potentially spanning multiple cloud platforms or on-premises data centers, a solution like Plural can provide a unified management layer, significantly streamlining operations and ensuring consistency. However, before tackling fleet management, let's focus on getting your initial environment operational.

We will cover common choices for local development, such as Minikube and Kind, then look into prominent cloud-based Kubernetes services. Finally, we'll discuss the indispensable command-line tools, kubectl and Helm, that you'll use daily. Building this solid base will enable you to deploy, manage, and scale applications with confidence. The objective here is to create a functional Kubernetes environment where you can learn, experiment, and ultimately, run your applications reliably.

Choose Your Local Setup: Minikube or Kind

When you're in the development and testing phases, running a Kubernetes cluster locally offers significant advantages in speed and convenience. Minikube is a widely adopted tool that facilitates running a single-node Kubernetes cluster directly on your laptop, typically within a virtual machine (VM) or as a container. It’s an excellent way to get hands-on experience with Kubernetes, test your application deployments, and validate behavior in a controlled setting without needing access to a cloud environment. Minikube is designed for ease of installation and use, making it a practical starting point for many developers.

Another strong contender for local Kubernetes development is Kind (Kubernetes IN Docker). Kind distinguishes itself by running Kubernetes cluster nodes as Docker containers. This approach can lead to faster startup times and a lighter resource footprint compared to traditional VM-based solutions. It's particularly well-suited for simulating multi-node cluster behaviors locally or for integration into CI/CD pipelines where ephemeral clusters are frequently created and destroyed. Both Minikube and Kind provide a complete Kubernetes environment, allowing you to explore most features effectively.

Explore Cloud Options: GKE, EKS, AKS

Once you're prepared to move beyond local experimentation or require a more resilient and scalable platform for your applications, managed Kubernetes services from major cloud providers become the logical next step. Leading offerings in this space include Google Kubernetes Engine (GKE), Amazon Elastic Kubernetes Service (EKS), and Azure Kubernetes Service (AKS). These services significantly reduce operational overhead by managing the Kubernetes control plane—this includes handling critical tasks such as upgrades, security patching, and ensuring high availability. This allows your team to concentrate on application development and deployment rather than the intricacies of Kubernetes infrastructure management.

Each cloud provider offers a comprehensive suite of integrated services, encompassing networking, storage, monitoring, and identity access management, tailored to their Kubernetes offering. The decision between GKE, EKS, or AKS often hinges on existing organizational cloud allegiances, specific feature set requirements, or comparative pricing analyses. Regardless of the choice, these managed services deliver production-grade Kubernetes environments. As your Kubernetes usage expands, potentially across multiple cloud providers or into hybrid scenarios with on-premises clusters, a platform like Plural can offer a consistent deployment and management experience across your entire distributed fleet.

Installing Essential Tools: kubectl and Helm

Irrespective of whether your Kubernetes cluster is hosted locally or in the cloud, specific tools are necessary for interaction and management. The primary command-line interface for Kubernetes is kubectl. This tool enables you to communicate directly with the Kubernetes API server, allowing you to deploy applications, inspect and manage cluster resources, retrieve logs, and troubleshoot operational issues. Consider kubectl your fundamental control mechanism for any Kubernetes cluster; proficiency with its commands is crucial for effective Kubernetes operations. You can install kubectl by following the official Kubernetes documentation tailored to your operating system.

Another vital tool in the Kubernetes ecosystem is Helm, often referred to as "the package manager for Kubernetes." Helm simplifies the definition, installation, and upgrading of even the most intricate Kubernetes applications through a packaging format known as "charts." A Helm chart bundles all the required Kubernetes resource definitions (such as Deployments, Services, and ConfigMaps) for an application, along with their configurations. This approach makes application deployment repeatable, versionable, and easier to manage, streamlining processes like updates and rollbacks. Many open-source projects provide official Helm charts, facilitating their straightforward deployment onto your cluster.

Deploying Your First Application on Kubernetes

With your Kubernetes environment configured and essential tools at your fingertips, you're ready for the exciting part: deploying your first application. This process involves a few key steps, from preparing your application to making it accessible. While these are the foundational steps for a single application, remember that platforms like Plural are designed to simplify and automate these workflows across entire fleets of applications and clusters, especially as your needs scale. Let's walk through the basics to get you started.

Creating an Application

Before deploying to Kubernetes, you need an application. The key is to have an application that can be containerized. Containerizing your application with Docker is a standard prerequisite, as Kubernetes excels at orchestrating containers. This involves creating a Dockerfile that specifies how to build your application—including its code and all dependencies—into a portable image. This image will then be pulled by Kubernetes to run your application in a Pod. This step ensures your application is packaged consistently, making deployments reliable across different environments, from your local machine to a production cluster.

Writing Your Kubernetes Manifests

Kubernetes operates on a declarative model. You define the desired state of your application using YAML files, commonly known as manifests. These manifests tell Kubernetes what container image to use, how many replicas to run, what ports to expose, and other vital configuration details. For instance, a Deployment manifest will manage your application's stateless instances, ensuring the desired number of replicas are running. A Service manifest will define a stable network endpoint (like an IP address and port) to access your application's Pods. The official Kubernetes website provides excellent browser-based tutorials that are invaluable for learning to write these essential configuration files and understanding core Kubernetes components.

Deploying Your Application

Once your application is containerized and your Kubernetes manifests are written, deploying is straightforward. You'll use the kubectl apply -f <your-manifest-filename.yaml> command, pointing to your manifest file (or a directory of files). Kubernetes then takes over, reading your manifest and working to achieve the desired state. This typically involves pulling your container image from a registry (like Docker Hub or a private registry) and scheduling Pods to run on available Nodes within your cluster. Kubernetes is designed to keep containerized applications running smoothly, automatically managing updates and ensuring availability, which is crucial for production environments. For more complex scenarios involving multiple applications or clusters, Plural's Continuous Deployment capabilities can automate this entire process.

Accessing Your Deployed Application

After Kubernetes confirms your application is running (you can check Pod status with kubectl get pods), the next step is to access it. By default, applications within Kubernetes are only accessible via their internal cluster IP addresses. To expose your application externally, you'll typically use a Kubernetes Service of type LoadBalancer or NodePort, or an Ingress resource. A LoadBalancer service, if supported by your cloud provider, provisions an external load balancer that distributes traffic to your application. An Ingress resource provides more advanced HTTP/S routing rules, allowing you to expose multiple services under a single IP address. Many Kubernetes learning modules include specific instructions on how to expose your application publicly, guiding you through configuring the necessary resources.

Manage Kubernetes Resources Effectively

Managing your Kubernetes resources effectively is fundamental to running reliable and scalable applications. It's not just about launching Pods; it’s about orchestrating a symphony of components that work together. This includes how you deploy and update your applications using Pods and Deployments, how you expose them to users or other services through Services and Ingress, and how you handle application configurations and sensitive data with ConfigMaps and Secrets. Getting this right means your applications are resilient, can scale on demand, and remain secure.

Poor resource management can lead to a host of problems, from applications that don't start, services that are unreachable, to critical security vulnerabilities due to mishandled secrets. As your Kubernetes footprint grows, manually tracking and managing these interconnected pieces across multiple clusters becomes a significant challenge. This is where understanding the core resources and leveraging tools that provide clarity and control is imperative. For instance, Plural offers a unified dashboard that simplifies visibility and control over these resources across your entire Kubernetes fleet. This centralized view is invaluable for consistent management, efficient troubleshooting, and ensuring your configurations adhere to best practices, especially as your environment scales. Let's look at how to manage these key resources.

Work with Pods and Deployments

In Kubernetes, the Pod is the smallest and simplest unit in the object model that you create or deploy. A Pod represents a single instance of a running process in your cluster and can contain one or more containers, such as Docker containers, along with shared storage and network resources, and a specification for how to run the containers. Think of a Pod as a wrapper around your application containers, providing them with a unique IP address and a shared environment.

While you can work with individual Pods, you'll more commonly use Deployments to manage them. A Deployment provides declarative updates for Pods and ReplicaSets (which ensure a specified number of Pod replicas are running). You describe a desired state in a Deployment, and the Deployment Controller changes the actual state to the desired state at a controlled rate. This allows you to roll out new versions of your application, roll back to previous versions, and scale your application up or down seamlessly. Plural’s dashboard offers clear visualization of Deployment statuses and Pod health, making it easier to track updates and diagnose issues.

Configure Services and Ingress

Once your application is running in Pods managed by a Deployment, you need a way to make it accessible. This is where Kubernetes Services come in.

A Service defines a logical set of Pods and a policy by which to access them, often referred to as a microservice. Services provide a stable IP address and DNS name, so even if Pods are created or destroyed, the Service endpoint remains constant, ensuring reliable communication. Common Service types include ClusterIP (internal access), NodePort (exposing the service on each Node’s IP at a static port), and LoadBalancer (exposing the service externally using a cloud provider's load balancer).

For managing external access to your HTTP/S services, you'll typically use an Ingress. An Ingress is an API object that manages external access to the services in a cluster, typically HTTP. It can provide load balancing, SSL termination, and name-based virtual hosting, allowing you to route traffic to different services based on the request host or path. Plural CD can help manage the deployment of these Service and Ingress configurations consistently across multiple clusters, ensuring your networking policies are uniformly applied.

Use ConfigMaps and Secrets Securely

Applications often require configuration data that might vary across environments (development, staging, production). ConfigMaps allow you to decouple this configuration from your container images, making your applications more portable. You can store configuration data as key-value pairs or as entire configuration files within a ConfigMap, which can then be consumed by Pods as environment variables, command-line arguments, or as files mounted into a volume. This approach keeps your container images generic and environment-agnostic.

For sensitive information like passwords, API keys, or TLS certificates, Kubernetes provides Secrets. Secrets are similar to ConfigMaps but are specifically designed and managed for confidential data. They are stored with additional security measures, such as being base64 encoded by default (though not encrypted at rest without further configuration like etcd encryption or using an external secrets manager). It's crucial to manage access to Secrets tightly using RBAC. Plural’s architecture, which includes an egress-only agent communication model and local credential execution, enhances the security posture when managing these sensitive configurations across your Kubernetes fleet.

Scale and Update Your Applications

Once your application is up and running on Kubernetes, the next crucial steps involve managing its lifecycle: scaling to meet demand and updating it with new features or fixes. Kubernetes excels at these tasks, providing robust mechanisms to ensure your application remains available and performant even as it evolves. Effectively managing scaling and updates is key to leveraging the full power of container orchestration. Let's explore how you can implement these essential operations to keep your applications resilient and current.

Implement Horizontal Pod Autoscaling

One of the most powerful features Kubernetes offers is Horizontal Pod Autoscaling (HPA). Imagine your application suddenly experiences a surge in traffic. Without autoscaling, your existing pods might become overwhelmed, leading to slow response times or even outages. HPA automatically adjusts the number of running pods in a deployment or replica set based on observed metrics like CPU utilization or custom metrics you define. For example, if CPU usage across your pods consistently exceeds a threshold you set, say 70%, HPA can automatically launch new pods to distribute the load. Conversely, when demand subsides, it scales down the number of pods, optimizing resource usage and costs. This dynamic scaling ensures your application maintains performance under varying loads without requiring manual intervention. Within Plural, you can monitor the behavior of your HPAs and the resulting pod scaling across your entire fleet, all from a unified dashboard, providing clear visibility into resource allocation.

Perform Rolling Updates and Seamless Rollbacks

Keeping your applications current with the latest features and security patches is vital, but updates can be risky if not handled correctly. Kubernetes enables you to perform rolling updates, a strategy that updates your application to a new version with zero downtime. During a rolling update, Kubernetes incrementally replaces pods running the old version of your application with pods running the new version. It carefully waits for new pods to become healthy and ready to serve traffic before terminating old ones, ensuring continuous availability throughout the update process.

Should an update introduce unexpected issues, Kubernetes also supports seamless rollbacks. With a single command or a change in your declarative configuration, you can swiftly revert your application to a previously deployed, stable version. This capability is invaluable for maintaining service reliability and minimizing the impact of faulty deployments. Plural CD enhances this process by providing a GitOps-based workflow, automating deployment pipelines. This means updates and rollbacks are managed through version-controlled configuration changes, offering greater consistency and auditability across all your clusters.

Implement Robust Health Checks

Implementing robust health checks is essential to ensure your application runs reliably and that Kubernetes can effectively manage its lifecycle. Kubernetes uses two main types of probes: liveness and readiness probes. A liveness probe checks if a container is still running correctly. If a liveness probe fails—for instance, if your application deadlocks or encounters an unrecoverable error—Kubernetes will automatically restart the container, attempting to recover the application.

A readiness probe, on the other hand, determines if a container is ready to start accepting traffic. If a readiness probe fails, Kubernetes won't send traffic to that pod, even if it's running, until the probe passes. This is particularly useful when an application needs time to initialize, such as loading large data sets or establishing critical database connections. By configuring effective health checks, you enable Kubernetes' powerful self-healing capabilities, ensuring traffic is only routed to healthy, fully functional pods. Plural's built-in Kubernetes dashboard provides clear visibility into the status of these probes, helping you quickly identify and troubleshoot unhealthy pods within your managed clusters.

Master Kubernetes Networking and Storage

Ensuring your applications communicate reliably and their data persists is crucial. Kubernetes provides robust networking and storage mechanisms, vital for scalable applications. Understanding these is key, and managing them effectively across a fleet—a task Plural simplifies—is the next step.

Understand Cluster Networking Fundamentals

At its core, Kubernetes manages containers—those small, self-contained units of software—across a cluster of machines, enabling easy scaling and high availability. For these containers, primarily running in Pods, to work together, they need to communicate. Kubernetes networking ensures every Pod gets its own IP address, allowing direct communication between Pods without needing to map host ports. Services then provide stable IP addresses and DNS names to access a group of Pods, abstracting away the dynamic nature of Pod IPs. This foundational layer allows your microservices to discover and talk to each other seamlessly within the cluster.

Configure Network Policies for Security

Securing your cluster network is critical since Pods can communicate freely by default. As IT professionals understand, keeping environments secure is a constant challenge. Kubernetes Network Policies act as a firewall for Pods, letting you define rules for ingress and egress traffic. You can specify which Pods can connect or restrict access by namespace—for example, allowing frontend Pods to talk to backends on a specific port while blocking other traffic. This granular control is key for isolating workloads.

Manage Persistent Storage with Volumes and Claims

Many applications, like databases, need data to persist beyond a Pod's lifecycle. Since Pods are ephemeral, data written to a container's filesystem is lost if the Pod restarts. Kubernetes offers a way to manage persistent storage using Volumes and PersistentVolumeClaims (PVCs). A Volume attaches storage (like a cloud disk) to a Pod. A PVC is a user's request for storage, abstracting the underlying infrastructure details. This allows developers to request storage without needing to know how it's provided, making applications more portable.

Use StatefulSets for Stateful Applications

While Deployments are great for stateless applications, some applications require stable, unique network identifiers and persistent storage tied to each instance. This is where StatefulSets come in. StatefulSets are used to manage applications that require persistent storage and unique identities for each instance, such as databases or distributed key-value stores. Each Pod in a StatefulSet gets a persistent identifier (e.g., web-0, web-1) and its own dedicated PersistentVolumeClaim. This ensures that even if a Pod is rescheduled, it comes back with the same identity and storage, maintaining its state reliably for critical applications.

Monitor, Log, and Troubleshoot in Kubernetes

Effectively managing applications in Kubernetes extends far beyond initial deployment; it demands constant vigilance over their operational health. The distributed and dynamic architecture of Kubernetes, while incredibly powerful, can also obscure the root causes of issues when they surface. Operating without robust monitoring, comprehensive logging, and efficient troubleshooting strategies is like navigating a complex system in the dark. Problems can escalate rapidly, leading to service disruptions, performance bottlenecks, and ultimately, a poor user experience. This is why establishing solid observability practices from the outset isn't just advisable—it's fundamental for any team committed to running reliable services on Kubernetes.

Consider monitoring as your proactive early warning system, continuously assessing the vitality of your applications and the underlying infrastructure. Logging, then, provides the detailed chronicle, capturing the sequence of events and errors that illuminate what transpired and why. Troubleshooting is the subsequent investigative process, utilizing data from both monitoring and logging to diagnose and resolve these issues. As your Kubernetes deployment expands, potentially across multiple clusters for various environments or geographical regions, the intricacy of these tasks intensifies. It's in such scenarios that a unified platform for fleet management proves invaluable, enabling you to implement consistent observability practices and derive insights across your entire Kubernetes estate from a centralized control plane. The following sections will walk you through establishing these critical components.

Set Up Monitoring with Prometheus

For Kubernetes monitoring, Prometheus has emerged as a de facto open-source standard. It excels at collecting time-series data by periodically scraping metrics from configured endpoints, including your Kubernetes nodes, pods, and services. This allows you to track vital signs like CPU and memory utilization, pod counts, API server latency, and custom application-specific metrics. This data is indispensable for understanding performance patterns, configuring alerts for abnormal conditions, and making data-driven decisions about scaling your applications.

To make sense of these metrics, visualization tools like Grafana are commonly used alongside Prometheus. For teams aiming to streamline this setup, Plural’s Service Catalog can simplify deploying a scalable Prometheus instance, often integrated with solutions like VictoriaMetrics, ensuring robust monitoring is an integral part of your Kubernetes foundation from the start.

Implement Centralized Logging (e.g., with Fluentd)

In Kubernetes, pods are designed to be ephemeral; they can be created, terminated, and rescheduled frequently as part of normal operations or in response to failures. Relying on logs stored within individual containers is therefore unreliable for any serious diagnostic effort. This is where centralized logging becomes essential. By systematically collecting logs from all your containers, nodes, and Kubernetes system components and forwarding them to a central, durable storage location, you create a searchable and persistent audit trail of events. This aggregated view is critical for debugging application errors, performing security audits, and gaining a comprehensive understanding of system behavior over time.

A widely adopted tool for this task is Fluentd. Typically, you would deploy Fluentd as a DaemonSet, ensuring an agent runs on every node to collect local logs and forward them to a backend such as Elasticsearch, Splunk, or a cloud provider's managed logging service. Plural simplifies this by offering pre-configured logging stacks, like an ElasticSearch setup, through its Service Catalog, facilitating a consistent and effective logging strategy across all your clusters.

Resolve Common Kubernetes Issues

Despite its many advantages, Kubernetes presents a steep learning curve, and troubleshooting can often feel like a complex puzzle. Some frequent issues you'll likely encounter include pods stuck in a Pending state (often due to insufficient cluster resources or unmet scheduling constraints), ImagePullBackOff errors (signaling problems with fetching container images from a registry), or CrashLoopBackOff status (indicating a container is starting, then repeatedly crashing).

Your primary tool for initial investigation is usually kubectl. Commands such as kubectl describe pod <pod-name>, kubectl logs <pod-name> -c <container-name>, and kubectl get events --sort-by=.metadata.creationTimestamp provide crucial diagnostic information. However, manually sifting through extensive logs and event streams across numerous components can be very time-consuming. This is an area where Plural's AI-assisted troubleshooting, a core feature of its Kubernetes fleet management platform, can significantly expedite issue resolution by offering clear explanations of errors and helping to rapidly identify the root cause.

Leverage Kubernetes Dashboards (and Plural's Unified View for Fleet Management)

While kubectl is an indispensable command-line tool, a visual dashboard can offer a more intuitive and immediate understanding of your Kubernetes clusters' overall health and operational status. Dashboards consolidate key metrics, resource states, and critical events into an easily digestible interface, enabling quicker identification of emerging problems and a clearer view of resource allocation and consumption.

Plural enhances this experience significantly with its built-in multi-cluster dashboard, which provides a genuine single pane of glass for your entire Kubernetes fleet. This dashboard offers profound visibility into core resources like pods, deployments, and services, maintaining live updates of cluster states and resource conditions across all managed environments. It securely connects to your clusters, even those residing in private networks or on-premises, utilizing a sophisticated reverse tunneling proxy. Furthermore, it integrates seamlessly with your existing Single Sign-On (SSO) solution for unified and secure access control, allowing you to manage and troubleshoot all your clusters efficiently from one central location.

Explore Advanced Kubernetes Concepts and Best Practices

As you become more comfortable with Kubernetes, you'll naturally start to encounter more sophisticated concepts and tools. These aren't just for show; they can significantly enhance your orchestration capabilities, helping you build applications that are more robust, scalable, and secure. Mastering these will take your Kubernetes skills to the next level. Let's look at a few key areas that are essential for advanced Kubernetes use, including how a platform like Plural can streamline some of these complex practices, making them more manageable for your team.

Understand Custom Resource Definitions (CRDs) and Operators

Custom Resource Definitions (CRDs) are a powerful feature that allows you to extend Kubernetes capabilities by adding your own, unique resource types. Think of CRDs as creating new nouns for the Kubernetes API, tailored to your specific operational needs. For instance, you might define a ScheduledBackup CRD to manage database backups in a declarative way. This means you can manage specialized applications and services using the familiar kubectl patterns, rather than being limited to standard Kubernetes objects.

Operators build upon CRDs by introducing custom controllers that actively manage these custom resources. An operator essentially encodes human operational knowledge into software. Plural, for example, utilizes CRDs within its Stacks feature to manage infrastructure-as-code components like Terraform. This integrates them natively into the Kubernetes ecosystem, enabling API-driven automation and consistent management of complex infrastructure alongside your applications.

Apply Essential Kubernetes Security Practices

Securing your Kubernetes clusters is not an afterthought; it's a continuous process. Start by using Pod Security Standards to define varying levels of security restrictions for your pods. A fundamental aspect is managing access control with Role-Based Access Control (RBAC). RBAC allows you to define granular permissions, specifying who can do what to which resources within your cluster. Don't overlook network policies; these act as a firewall within your cluster, controlling traffic flow between pods and namespaces, which is vital for isolating workloads and limiting the blast radius of potential breaches.

Plural enhances cluster security by integrating with your existing identity provider for Single Sign-On (SSO) to its embedded Kubernetes dashboard. This dashboard uses Kubernetes Impersonation, meaning RBAC policies resolve directly to your console user email and groups, simplifying secure access and auditability across your entire fleet without juggling individual kubeconfigs.

Simplify Multi-Cluster Management with Plural

As organizations scale, managing a single Kubernetes cluster often evolves into overseeing a fleet of clusters. These can span different environments—development, staging, production—or even various cloud providers and on-premises data centers. This growth introduces significant complexity in maintaining consistent deployments, managing configurations, and achieving unified observability.

"Plural is a platform that simplifies the management of multiple Kubernetes clusters, allowing you to deploy and manage applications across different environments seamlessly," as noted by Pomerium in their review of Kubernetes management tools. Plural provides a unified interface and a consistent GitOps-based workflow. Its architecture, featuring an agent-based, egress-only communication model, ensures secure and scalable operations without exposing cluster API endpoints. This allows you to manage clusters in private networks just as easily as those in the public cloud, offering a true single pane of glass for your Kubernetes operations.

Continue Your Kubernetes Journey

Mastering Kubernetes is an ongoing process, not a one-time achievement. The ecosystem is constantly evolving, with new tools, patterns, and best practices emerging regularly. As you gain more experience, you'll discover deeper layers of complexity and new ways to optimize your deployments. Committing to continuous learning and exploration is key to effectively leveraging Kubernetes for your applications. This involves seeking out robust solutions to manage complexity, actively engaging with educational resources, and staying informed about the latest developments in the Kubernetes landscape.

Find Resources for Continuous Learning

The Kubernetes community offers a wealth of resources for learners at all levels. For those starting out or looking to solidify their foundational knowledge, hands-on experience is invaluable. The official Kubernetes website hosts browser-based tutorials powered by Katacoda, covering essential concepts and providing practical exercises. These interactive scenarios allow you to experiment with kubectl commands and understand core objects without needing to set up a complex local environment immediately.

For a more structured approach, courses like "Kubernetes for the Absolute Beginners — Hands-on" are excellent for building a strong practical understanding. As you progress, look for resources that delve into specific areas like networking, storage, or security. Engaging with community forums, attending webinars, and contributing to open-source projects related to Kubernetes can also significantly accelerate your learning and provide real-world insights. Remember, practical application is key to truly internalizing Kubernetes concepts.

Stay Current with Kubernetes Developments

Kubernetes is a dynamic and rapidly evolving technology. Its architecture, with its many interacting components like nodes, pods, services, and controllers, can be complex to fully grasp, and new features and improvements are released regularly. Keeping your clusters up-to-date with the latest versions is crucial for security and access to new functionalities, but handling upgrades and rollbacks, especially in production, requires careful planning and execution.

Staying informed about these developments is essential. Follow the official Kubernetes blog, release notes, and community channels. Pay attention to discussions around new features, deprecations, and emerging best practices. Understanding the direction of the project and the challenges being addressed by the community will help you make informed decisions about your own Kubernetes strategy. Tools that simplify aspects like multi-cluster management and provide consistent deployment pipelines, such as Plural's GitOps-based continuous deployment, can also help mitigate the complexity associated with keeping pace with Kubernetes evolution, allowing your team to focus more on application development.

Related Articles

- What is Kubernetes Used For? A DevOps Guide

- Essential Kubernetes Terminology for DevOps

- Is Kubernetes Worth It? A Practical Guide

- How Kubernetes Works: A Guide to Container Orchestration

- Managing Kubernetes Deployments: A Practical Guide

Unified Cloud Orchestration for Kubernetes

Manage Kubernetes at scale through a single, enterprise-ready platform.

Frequently Asked Questions

I'm just starting with Kubernetes. What's one key area I should focus on to avoid early frustrations? Understanding how Kubernetes objects like Pods, Deployments, and Services relate to each other is crucial. Initially, focus on getting a simple application deployed and accessible. Don't try to learn everything at once; instead, build a solid grasp of these core building blocks. Using local tools like Minikube or Kind can provide a safe space to experiment. As you progress, you'll find that managing these across many applications or clusters is where tools like Plural really help by providing a consistent operational view.

My application is deployed, but how do I ensure it stays healthy and performs well without constant manual checks? This is where Kubernetes' self-healing and scaling capabilities shine. You'll want to implement health checks—liveness and readiness probes—so Kubernetes knows if your application is truly operational and can restart or reroute traffic accordingly. For performance, Horizontal Pod Autoscaling (HPA) allows your application to automatically scale based on demand. Setting up monitoring with tools like Prometheus will give you visibility into performance metrics, and Plural's unified dashboard can centralize this view, especially when you're managing multiple applications or clusters.

Managing configurations and sensitive data like API keys across different environments seems complex. What's a good approach? Kubernetes offers ConfigMaps for general configuration and Secrets for sensitive data. The key is to decouple these from your application image. For Secrets, always ensure you have strong RBAC policies controlling access. When you're dealing with multiple clusters or environments, maintaining consistency and security for these configurations can become challenging. Plural helps by integrating with your existing identity provider for secure access to manage these configurations through its dashboard, ensuring consistent RBAC is applied across your fleet.

As my Kubernetes usage grows, what's the biggest operational challenge I'm likely to face, and how can I prepare for it? One of the most significant challenges is managing a growing fleet of Kubernetes clusters, perhaps across different cloud providers or for various development, staging, and production environments. Maintaining consistent deployments, configurations, and observability across all of them can quickly become overwhelming. Preparing for this involves adopting standardized practices early on, like Infrastructure-as-Code. Platforms like Plural are designed specifically for this fleet management problem, offering a single pane of glass and consistent workflows to simplify operations at scale.

I've heard about 'GitOps' in the context of Kubernetes. Why is it important, and how does it help? GitOps is a powerful operational model where your Git repository serves as the single source of truth for your Kubernetes cluster configurations and application deployments. All changes are made via pull requests to Git, providing an auditable, version-controlled history. This approach makes deployments more reliable, repeatable, and easier to roll back. Plural’s Continuous Deployment (CD) capabilities are built around GitOps principles, automating the synchronization of your desired state from Git to your target clusters, which greatly simplifies managing updates across your infrastructure.

Newsletter

Join the newsletter to receive the latest updates in your inbox.