Guide to Kubernetes Deployments: Basics & Best Practices

Learn the essentials of Kubernetes deployments, including key components, strategies, and best practices for managing and optimizing your applications.

Kubernetes has become the de facto standard for container orchestration, but managing applications within a Kubernetes cluster can be complex. This is where Kubernetes Deployments come in. They provide a declarative, automated way to manage stateless applications, ensuring high availability and simplifying updates.

This guide explores the core concepts of Kubernetes Deployments, from their basic definition and purpose to advanced configuration options and best practices. Whether you're new to Kubernetes or looking to refine your deployment strategies, this comprehensive guide will provide the knowledge and practical examples you need to manage your applications effectively. We'll also show you how Plural simplifies Kubernetes deployments, making managing multiple clusters easier than ever.

Unified Cloud Orchestration for Kubernetes

Manage Kubernetes at scale through a single, enterprise-ready platform.

Key Takeaways

- Deployments automate application management: Use Deployments to manage stateless applications, automate rollouts and rollbacks, and ensure high availability through self-healing and scaling. Configure resource limits and health checks for optimal performance.

- Effective management requires understanding the Kubernetes ecosystem: Learn how Deployments, ReplicaSets, and Pods work together. Choose the right deployment strategy (rolling, recreate, canary, blue/green) and monitor application health and resource usage.

- Plural streamlines Kubernetes deployments: Simplify multi-cluster management, automate deployments with GitOps, and gain enhanced visibility into your applications through Plural's unified dashboard.

What are Kubernetes Deployments?

Kubernetes Deployments offer a declarative approach to managing stateless applications within a Kubernetes cluster. They ensure a specified number of application instances, running in containers inside Pods, remain available. A Deployment acts as a supervisor, automatically creating and updating these instances. If a Pod crashes due to a node failure or other issues, the Deployment automatically replaces it, maintaining the desired state and ensuring application reliability. This self-healing capability is fundamental to Deployments, making them crucial for production environments. Deployments also simplify updates by supporting various update strategies, including rolling updates and rollbacks, minimizing downtime and risk.

Key Components

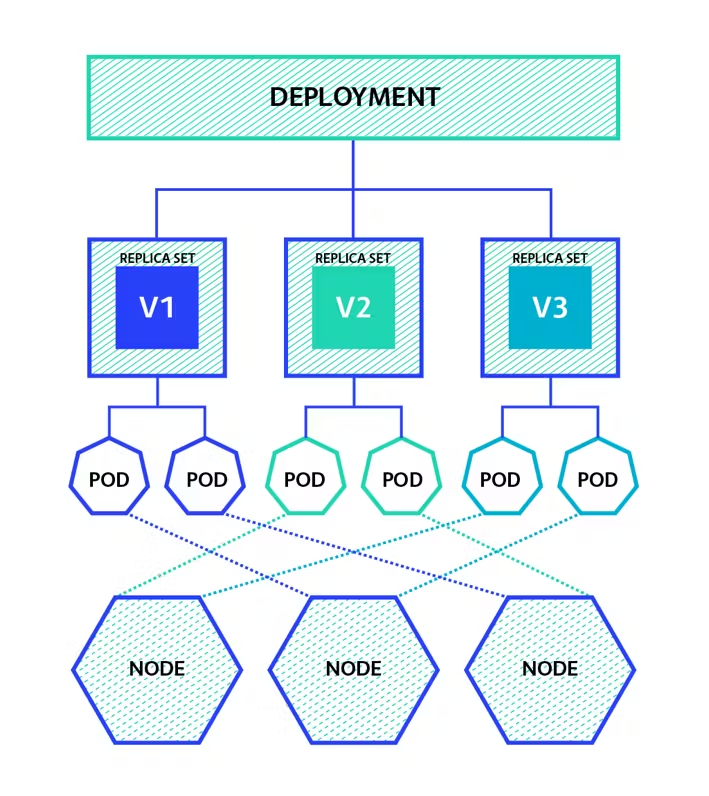

A Kubernetes Deployment manages Pods and ReplicaSets. Pods are the smallest deployable units in Kubernetes, containing one or more containers. A ReplicaSet ensures that a specified number of identical Pods are running. The Deployment sits above the ReplicaSet, defining the desired state—the number of Pods, the container image, and other configuration details. It uses the ReplicaSet to maintain this desired state, automatically scaling the number of Pods up or down as needed. Updating a Deployment creates a new ReplicaSet and gradually rolls out the changes, ensuring a smooth transition and minimizing disruption. This layered approach provides a robust and flexible mechanism for managing application workloads in Kubernetes.

Kubernetes Deployment Core Features and Benefits

Kubernetes Deployments offer several key features that simplify application management and improve reliability.

Declarative Management

Kubernetes embraces a declarative approach to managing applications. Instead of defining a sequence of imperative commands, you declare the desired state of your application in a YAML file. This declarative model simplifies configuration and makes it easier to manage complex deployments. You describe how many replicas your application should run, which container image to use, and what resources are required. Kubernetes then takes over, ensuring the actual state of your application matches your desired configuration. For example, if a node fails, Kubernetes automatically reschedules the affected pods to maintain the desired number of replicas.

Rolling Updates and Rollbacks

Updating applications in a live environment can be risky. Kubernetes Deployments mitigate this risk by providing built-in support for rolling updates. A rolling update gradually replaces older application instances with newer ones, minimizing downtime and ensuring a smooth transition. If an update introduces unexpected issues, Kubernetes offers a simple rollback mechanism. With a single command, you can revert to a previous version of your application, quickly restoring service and minimizing the impact of faulty deployments.

Self-Healing and High Availability

Kubernetes Deployments are designed to be self-healing and highly available. If a pod fails, the Deployment controller automatically creates a new pod to replace it. This automated recovery process ensures that your application remains available even in the face of unexpected failures. By running multiple replicas of your application across different nodes, Deployments also enhance high availability. If one node becomes unavailable, the other replicas continue to operate, ensuring uninterrupted service.

Create and Manage Kubernetes Deployments

This section covers the practical aspects of creating, managing, and updating Deployments in Kubernetes.

YAML Essentials

Creating a Kubernetes Deployment starts with a YAML file describing your application's desired state. This file specifies the number of replicas, the container image to use, and other crucial configurations. The fundamental structure of a Deployment YAML includes sections for apiVersion, kind, metadata, spec, and template. These define everything from the Deployment's version to the Pod template that governs the running containers.

A basic Deployment YAML looks like this:

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-deployment

spec:

replicas: 3

selector:

matchLabels:

app: my-app

template:

metadata:

labels:

app: my-app

spec:

containers:

- name: my-container

image: my-image:latest

In this example, we define a Deployment named my-deployment that runs three container replicas using the my-image:latest image. The selector and matchLabels ensure that the Deployment manages Pods with the label app: my-app. You can find more details on Deployment YAML structure in the Kubernetes documentation.

Deploy from the Command Line

Once you have your YAML file, you can deploy it to your Kubernetes cluster using the kubectl apply -f <deployment-file>.yaml command-line tool. Make sure you have a running cluster and kubectl installed and configured. The basic command to create a Deployment via the command line is:

kubectl create deployment <deployment-name> --image=<image-location>

For a practical example, let's deploy a simple application:

kubectl create deployment my-app --image=nginx:latest

This command creates a Deployment named my-app running the nginx:latest container image. For a more detailed walkthrough of deploying an application, check out the Kubernetes Basics tutorial.

Scale and Update Deployments

After deploying your application, you might need to scale it up or down based on demand or update it with new code or configuration changes. Scaling a Deployment involves changing the number of replicas. You can do this easily with kubectl:

kubectl scale deployment/<deployment-name> --replicas=<desired-number>

For example, to scale the my-app Deployment to five replicas:

kubectl scale deployment/my-app --replicas=5

Kubernetes also supports Horizontal Pod Autoscaling (HPA) to automatically adjust the number of replicas based on metrics like CPU utilization.

Updating a Deployment is equally straightforward. Modify your Deployment YAML file with the desired changes, such as a new container image or updated resource limits, and then apply the changes using kubectl:

kubectl apply -f <yaml-filename>

The Deployment controller then manages the update process, rolling out the changes to your Pods with minimal disruption to your application.

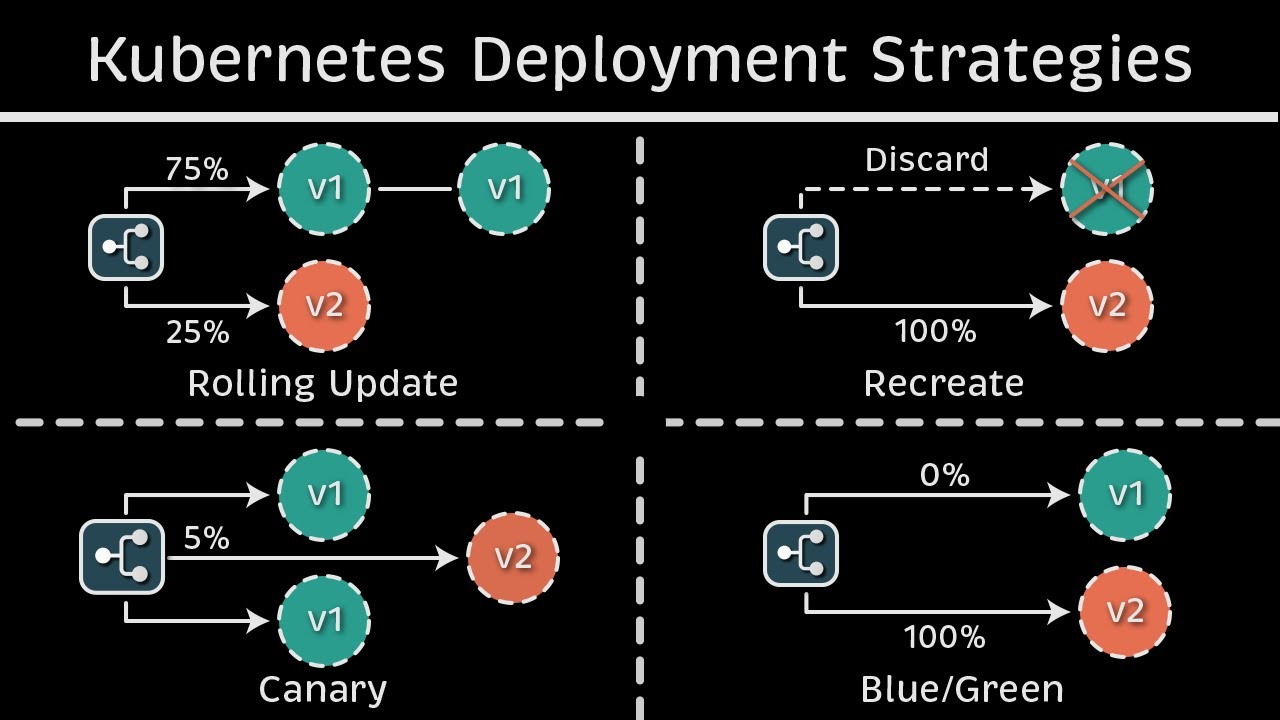

Kubernetes Deployment Strategies

Kubernetes offers several deployment strategies designed for different needs and risk tolerances. Choosing the right strategy minimizes downtime and ensures a smooth update process.

Rolling Updates

Rolling updates are the default deployment strategy in Kubernetes and a good choice for most production environments. This strategy gradually replaces existing pods with new ones, one by one or in batches. This minimizes downtime and allows the application to remain available during the update. Kubernetes ensures that a minimum number of pods are always running, and only when new pods become ready are old ones terminated. You can configure the update speed by defining parameters like maxSurge (the maximum number of pods that can be created above the desired state) and maxUnavailable (the maximum number of pods that can be unavailable during the update).

Recreate Strategy

The recreate strategy is a simpler approach where all existing pods are terminated before creating new ones. While straightforward, this method inevitably leads to downtime, making it unsuitable for live traffic production environments. However, it can be helpful in development or testing scenarios where downtime is acceptable and a clean break between versions is desired. Remember that the application will be completely unavailable during the pod recreation process.

Canary and Blue-Green

Canary and blue-green deployments are more sophisticated strategies that offer greater control and risk mitigation, which are especially valuable for production deployments.

Canary deployments involve rolling out a new version to a small subset of users. This allows you to test the new version in a real-world environment with minimal impact. A common native method for basic canary deployment in Kubernetes is to create two separate Deployments - one for the stable version and one for the canary version - with both sharing the same Service selector. For example:

- Deploy your stable app with several replicas (e.g., three pods of

myapp:1.0.0). - Deploy the canary version with a single replica (e.g., one pod of

myapp:2.0.0-canary), using the sameapp: myapplabel. - The Service routes traffic to all pods matching the label, so roughly 25% of requests go to the canary pod and 75% to the stable pods (proportional to replica count).

Implementing advanced canary deployments often requires a service mesh or API gateway to manage traffic routing between different versions.

Blue/Green deployments involve maintaining two identical environments: "blue" (live) and "green" (staging). The new version is deployed to the green environment, and once validated, traffic is switched from blue to green. This allows for near-zero downtime and easy rollback if issues occur. The main drawback is the increased resource cost of maintaining two separate environments.

Monitor and Troubleshoot Kubernetes Deployments

This section covers key aspects of monitoring and troubleshooting to ensure your deployments run smoothly.

Deployment Status and Events

Kubernetes offers built-in mechanisms to check the status of your deployments and related events. Use the kubectl get deployments command to view the current status of a deployment, including the number of desired, current, updated, and available replicas. This command provides a quick overview of whether your deployment is progressing.

For more granular details, kubectl describe deployment <deployment-name> displays events related to the deployment, such as pod creation, image pulls, and any errors encountered. Monitoring these events helps you understand the lifecycle of your deployment and identify potential problems early on.

Common Issues and Solutions

As deployments scale, you might encounter common issues like CrashLoopBackOff, ImagePullBackOff, or insufficient resources.

CrashLoopBackOffusually indicates a problem within your application code, requiring you to examine container logs for errors.ImagePullBackOffsuggests issues with accessing or pulling the container image, often due to incorrect image names or authentication problems.- Insufficient resource allocation can lead to performance degradation or pod failures. Define resource requests and limits in your deployment YAML to ensure your pods have the necessary CPU and memory.

Understanding the complexities of Kubernetes is crucial for avoiding these and other common pitfalls. Master Kubernetes deployment troubleshooting skills with this comprehensive guide by Plural.

Logging and Metrics

Effective monitoring relies on collecting logs and metrics from your deployments. Centralized logging solutions, such as Elasticsearch, Fluentd, and Kibana (EFK) or Loki, aggregate logs from various pods and nodes, providing a unified view of your cluster's activity. Prometheus is a popular choice for gathering metrics, offering insights into resource usage, application performance, and other key indicators. You can then visualize these metrics using tools like Grafana, creating dashboards to track the health and performance of your deployments. Combining these tools provides a robust monitoring framework.

Explore Kubernetes logging essentials, from accessing and managing logs with kubectl to troubleshooting and scaling across clusters in this practical guide by Plural.

Kubernetes Deployments Best Practices

Best practices for Kubernetes deployments cover version control, CI/CD, security, and performance optimization. Implementing these practices ensures efficient, reliable, and secure application deployments.

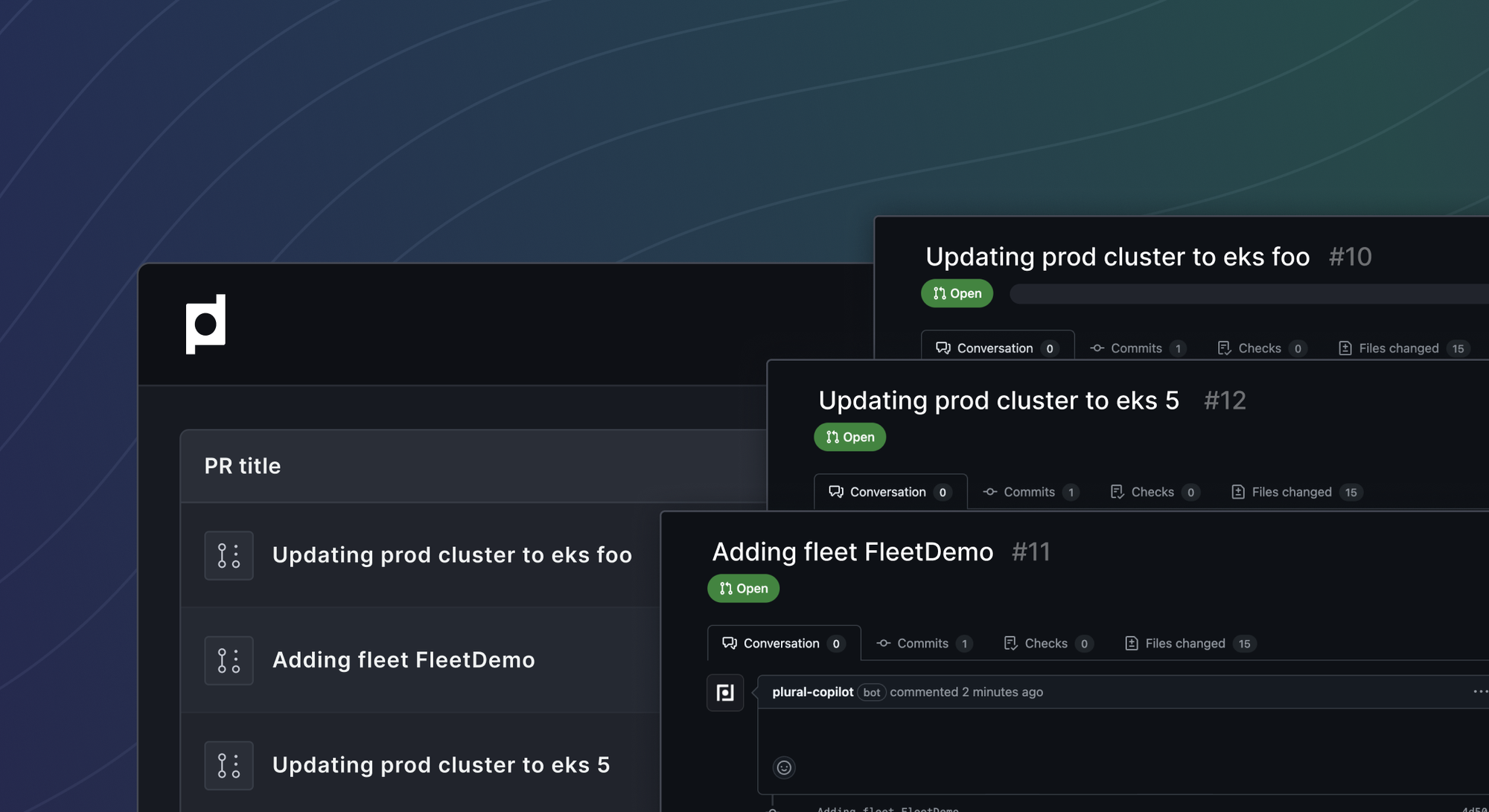

Version Control and CI/CD

Treat your Kubernetes configurations like any other code: store them in a version control system like Git. This enables change tracking, collaboration, and rollback capabilities. Integrate these configurations into your CI/CD pipelines for automated deployments and testing. This streamlines deployments and helps catch configuration errors early. Incorporating monitoring into your CI/CD pipeline ensures that deployments maintain performance and reliability. Track key metrics like resource utilization, container restarts, and API server latency to identify and address potential issues immediately after deployment. Plural's CI/CD integration simplifies this process, providing automated deployments and real-time feedback.

Security

Security in Kubernetes requires a proactive approach. Understand the principle of least privilege and implement role-based access control (RBAC) to restrict access to Kubernetes resources. Regularly scan images for vulnerabilities and update your Kubernetes clusters with the latest security patches. Plan for security from the outset, acknowledging the complexity and potential costs of securing a Kubernetes environment. Overlooking security best practices can lead to vulnerabilities and costly remediation efforts.

Performance Optimization

Optimizing performance involves continuous monitoring and analysis. Utilize a combination of tools like Prometheus for metrics collection, Grafana for visualization, and centralized logging solutions such as the ELK stack or Loki. This comprehensive approach provides insights into resource usage, application performance, and potential bottlenecks. Effective Kubernetes monitoring involves tracking resource utilization (CPU, memory, storage, network), container-level metrics, Kubernetes-specific metrics (such as container restarts, pod evictions, and API server latency), and application-specific metrics. This allows you to identify areas for improvement and maintain optimal performance.

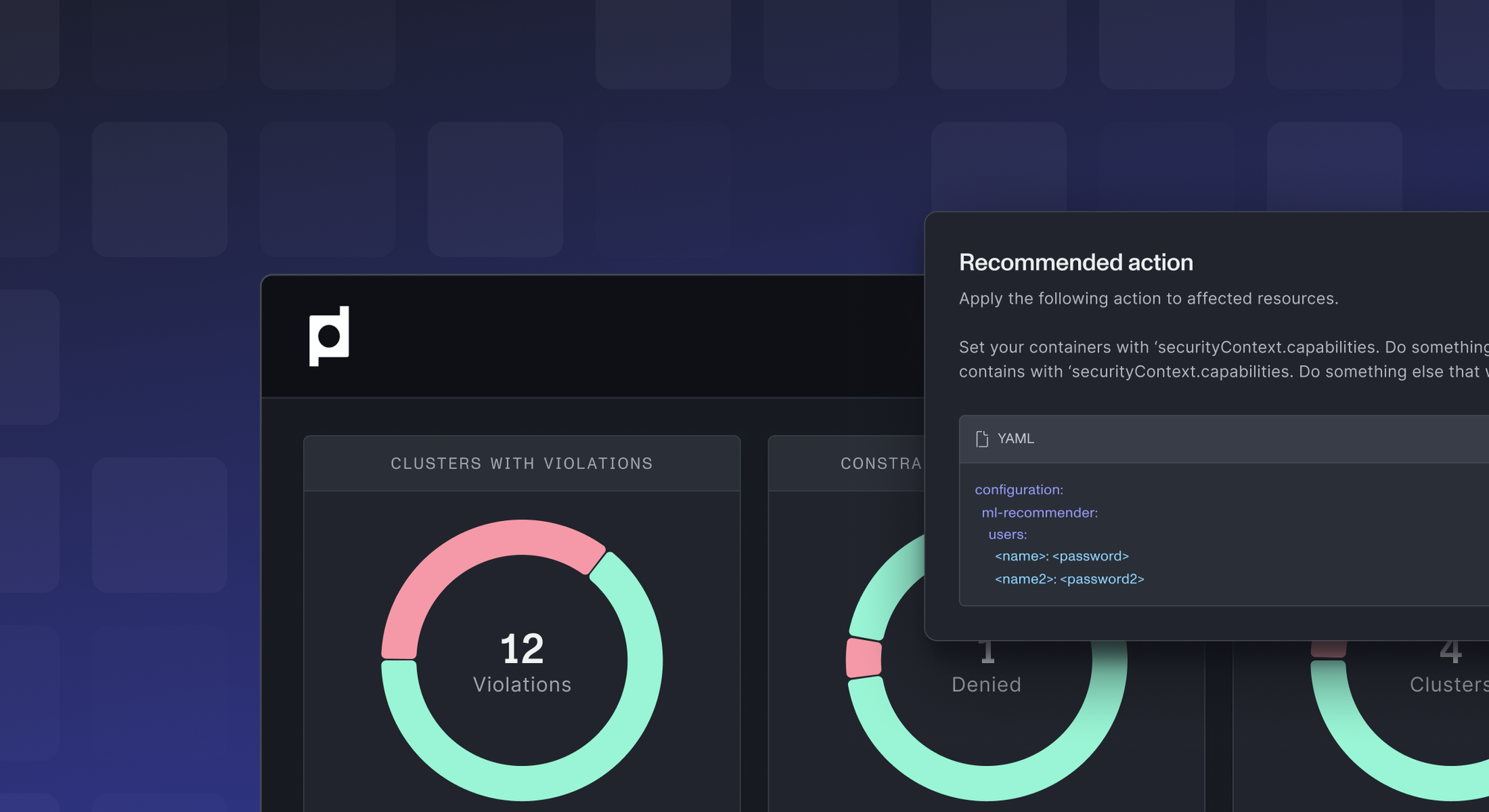

Simplify Multi-Cluster Deployment with Plural

Managing Kubernetes deployments across multiple environments presents unique challenges. Plural simplifies multi-cluster deployments by providing a single control plane to manage all of your clusters, regardless of their location. This allows you to deploy and manage applications consistently across your entire fleet, simplifying operations and reducing the risk of configuration drift.

Plural's agent-based architecture ensures secure communication with your clusters without complex network configurations, making it easy to manage deployments across cloud providers, on-premise environments, and even edge locations. This unified approach streamlines multi-cluster management, enabling you to scale your Kubernetes deployments confidently.

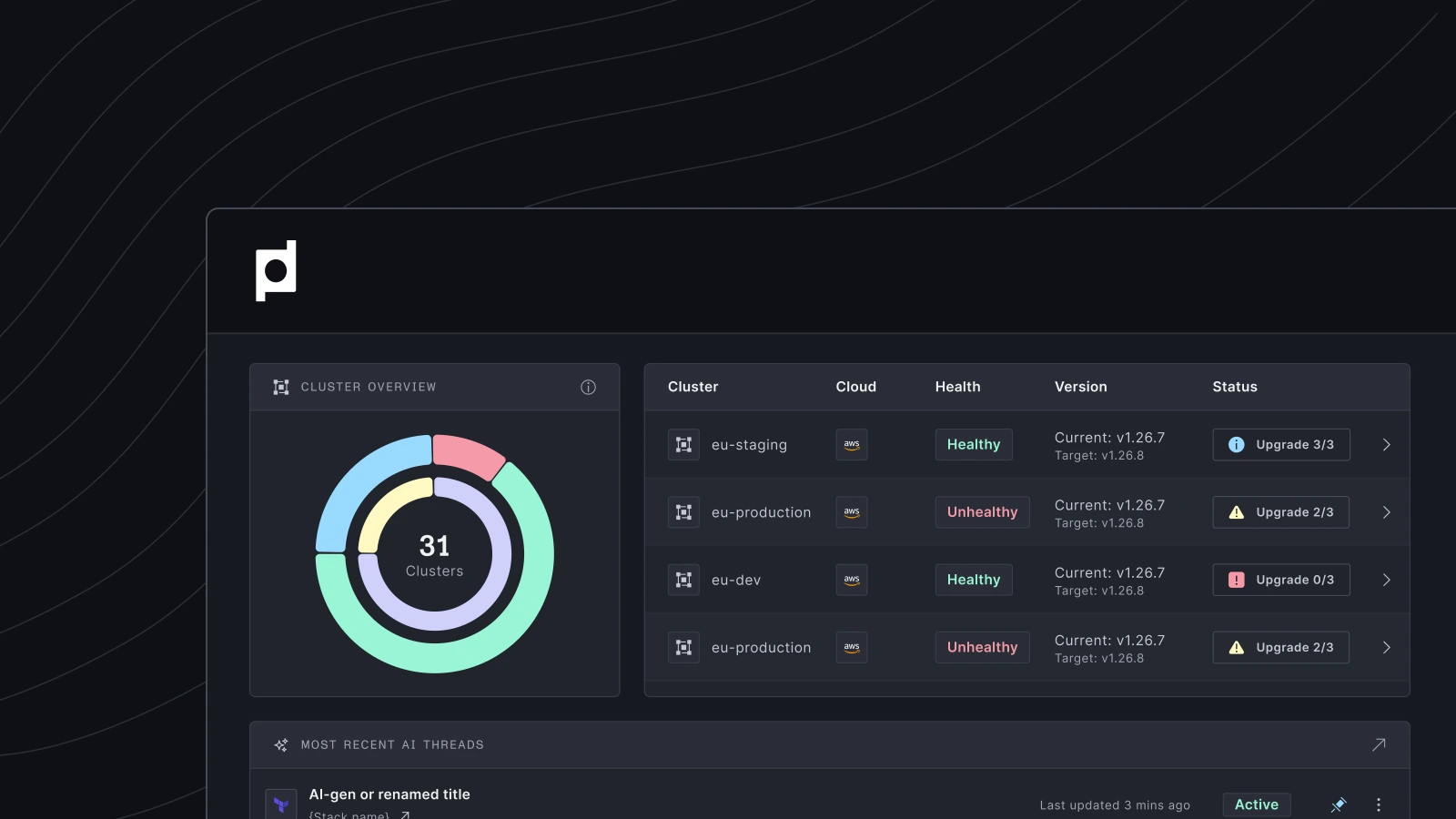

One interface for your entire Kubernetes estate

Juggling the unique UIs of different cloud providers is a challenge. Plural's Operational Console consolidates them into a single pane of glass, giving you complete visibility and control.

- ✅ Simplified provisioning across Amazon, Azure, Google, and more in one interface.

- ✅ Unified monitoring of all clusters (health, status, and resource usage) in one dashboard.

- ✅ Consolidated tools eliminate the need to switch between multiple cloud provider UIs.

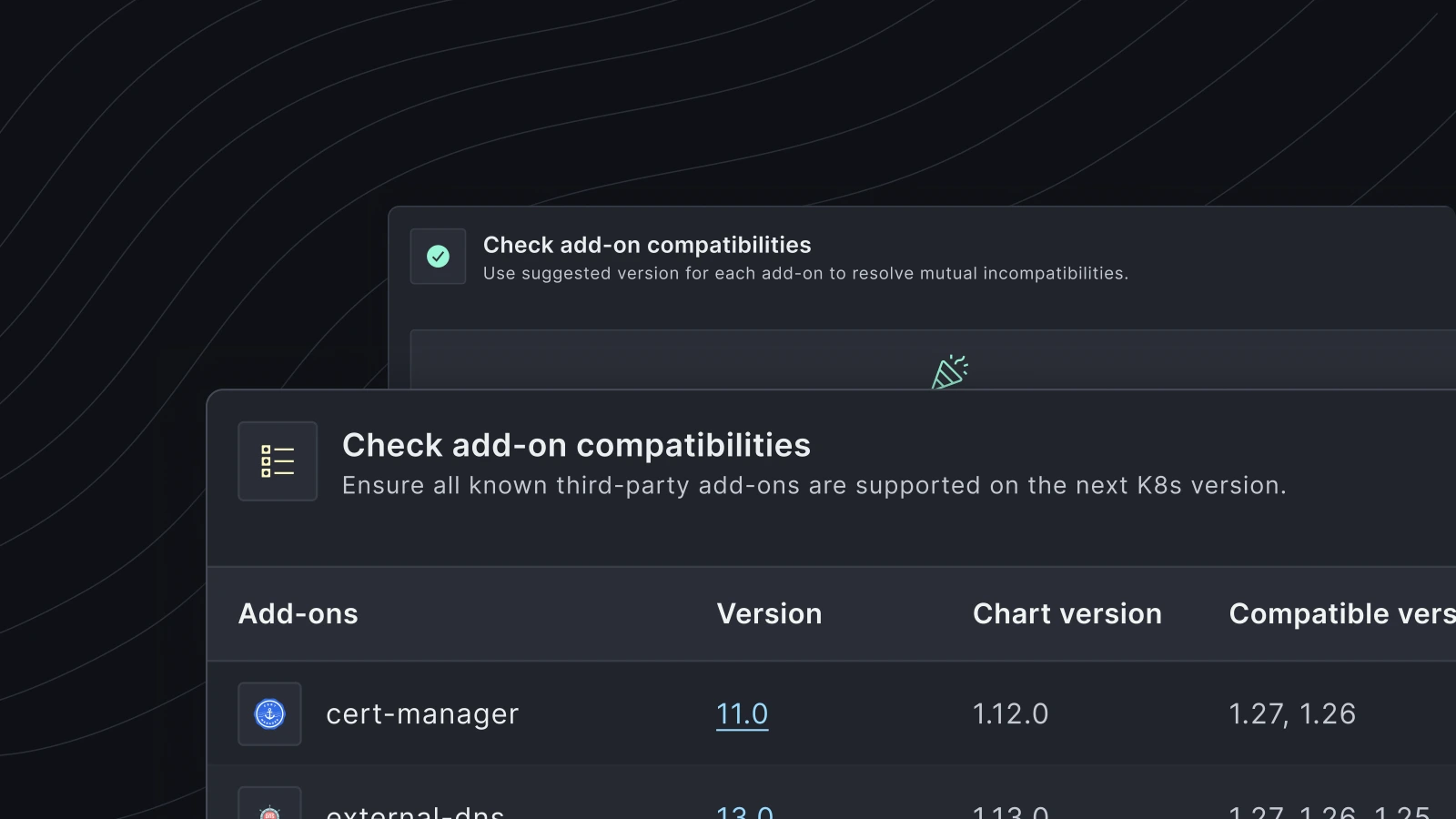

Simplified maintenance and upgrades

Keeping K8s clusters up-to-date across multiple cloud providers can be tedious and risky. Plural's Upgrade Management makes performing maintenance and upgrades directly from a single interface easy.

- ✅ Automated workflows for safe, consistent K8s upgrades across clouds

- ✅ Compatibility checks for YAML configurations and third-party add-ons

- ✅ Rollback capabilities to quickly recover from unexpected issues during upgrades

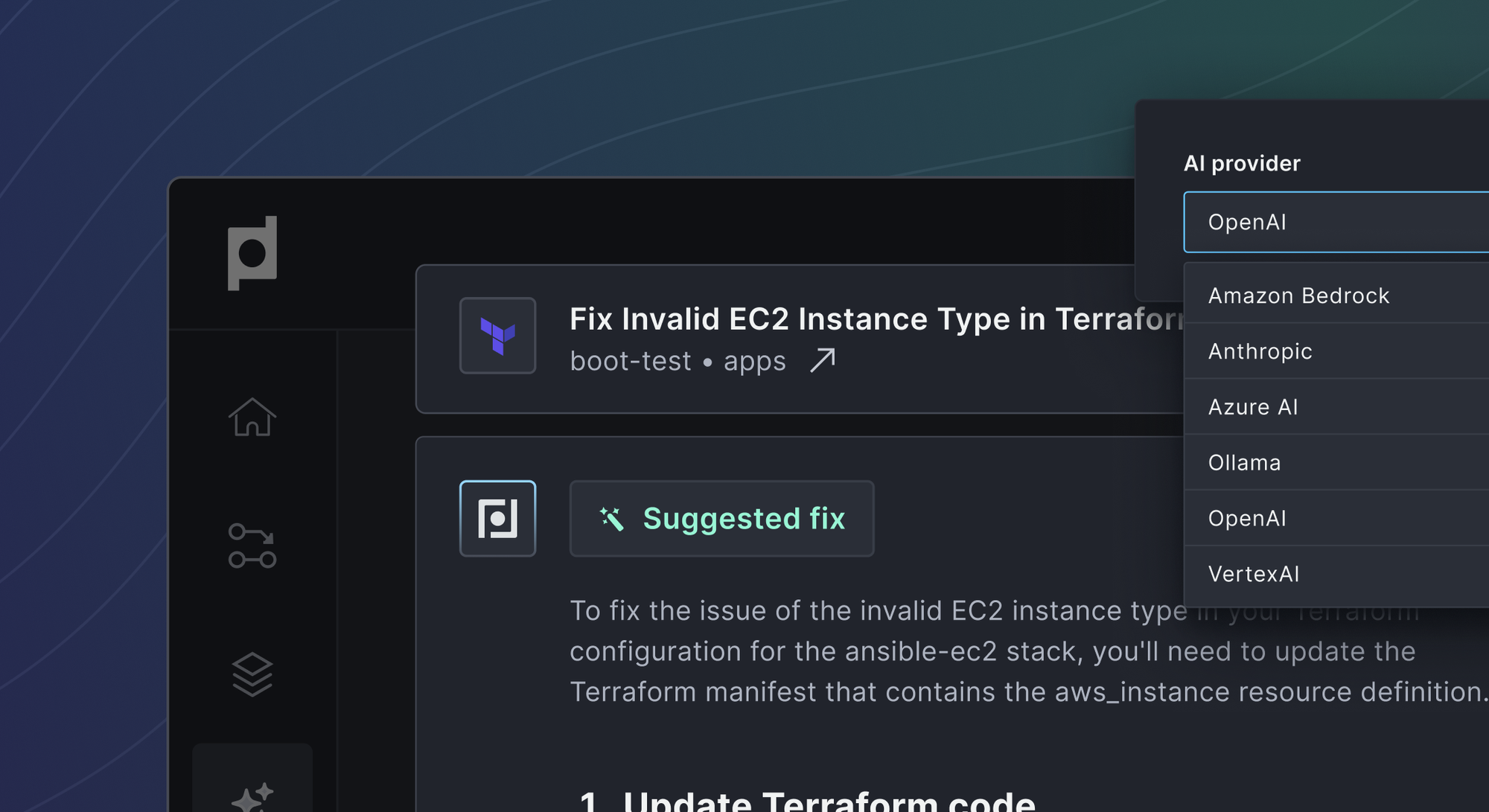

Manage heterogeneous environments with confidence

Each cloud provider has its operational challenges, but Plural's architecture standardizes processes to simplify management and reduce errors.

- ✅ GitOps-driven workflows ensure consistency across clouds

- ✅ Centralized policy enforcement to maintain compliance across environments

- ✅ Real-time telemetry and AI-driven insights to proactively address issues

Related Articles

Unified Cloud Orchestration for Kubernetes

Manage Kubernetes at scale through a single, enterprise-ready platform.

Frequently Asked Questions

How do Kubernetes Deployments differ from ReplicaSets?

Deployments manage ReplicaSets, providing a higher-level abstraction for managing application deployments. While a ReplicaSet ensures a specified number of identical Pods run, a Deployment manages updates and rollouts, creating new ReplicaSets for version changes. You define the desired state in the Deployment, and it uses ReplicaSets to achieve and maintain that state. Directly managing ReplicaSets is possible but less common, as Deployments simplify updates and rollbacks.

What are the key benefits of using Deployments for application management?

Deployments automate several crucial aspects of application management. They provide declarative configuration, allowing you to define the desired state of your application rather than specifying individual steps. They support rolling updates and rollbacks, minimizing downtime during updates and providing a quick recovery path if issues arise. Deployments also offer self-healing capabilities, automatically replacing failed Pods to maintain the desired replica count. These features combined make Deployments a powerful tool for ensuring application reliability and simplifying management.

How do I troubleshoot common Deployment issues like CrashLoopBackOff or ImagePullBackOff?

CrashLoopBackOff indicates a problem within your application code, often revealed by examining container logs. ImagePullBackOff suggests problems with accessing the container image, often due to an incorrect image name or authentication issues. Check your image configuration and credentials. For resource issues, analyze resource usage metrics and adjust resource requests and limits in your Deployment YAML. Tools like Plural's dashboard can help pinpoint these issues by providing a centralized view of resource usage, pod status, and logs.

What are the different deployment strategies available in Kubernetes, and how do I choose the right one?

Kubernetes offers several deployment strategies, including Rolling Updates, Recreate, Canary, and Blue/Green. Rolling Updates are the default and suitable for most production environments, minimizing downtime. Recreate is simpler but involves downtime, making it more appropriate for development or testing. Canary deployments release a new version to a small subset of users for testing before a full rollout. Blue/Green deployments maintain two separate environments, switching traffic between them for near-zero downtime. The best strategy depends on your specific needs and risk tolerance. Plural simplifies the implementation of these strategies, particularly for multi-cluster environments.

How can Plural enhance the management and deployment of my Kubernetes applications?

Plural provides a unified platform for managing Kubernetes deployments across multiple clusters. It offers a single dashboard for visibility into all deployments, automating deployments through a GitOps-based approach. Plural simplifies multi-cluster management, streamlines complex deployments like Canary and Blue/Green, and integrates with popular monitoring tools for comprehensive observability. This reduces manual effort, minimizes errors, and allows you to scale your Kubernetes deployments more effectively.

Newsletter

Join the newsletter to receive the latest updates in your inbox.